For several versions now, CMake by default refers to macOS’ Clang as AppleClang instead of just Clang, which would fail STREQUAL. Fixed by changing it to MATCHES.

Ok, so, i'm still trying to get to the state when it will be a trivial change to report all the separate iterations.

The old code (LHS of the diff) was rather convoluted i'd say.

I have tried to refactor it a bit into *small* logical chunks, with proper comments.

As far as i can tell, i preserved the intent of the code, what it was doing before.

The road forward still isn't clear, but i'm quite sure it's not with the old code :)

The State constructor should not be part of the public API. Adding a

utility method to BenchmarkInstance allows us to avoid leaking the

RunInThread method into the public API.

My knowledge of python is not great, so this is kinda horrible.

Two things:

1. If there were repetitions, for the RHS (i.e. the new value) we were always using the first repetition,

which naturally results in incorrect change reports for the second and following repetitions.

And what is even worse, that completely broke U test. :(

2. A better support for different repetition count for U test was missing.

It's important if we are to be able to report 'iteration as repetition',

since it is rather likely that the iteration count will mismatch.

Now, the rough idea on how this is implemented now. I think this is the right solution.

1. Get all benchmark names (in order) from the lhs benchmark.

2. While preserving the order, keep the unique names

3. Get all benchmark names (in order) from the rhs benchmark.

4. While preserving the order, keep the unique names

5. Intersect `2.` and `4.`, get the list of unique benchmark names that exist on both sides.

6. Now, we want to group (partition) all the benchmarks with the same name.

```

BM_FOO:

[lhs]: BM_FOO/repetition0 BM_FOO/repetition1

[rhs]: BM_FOO/repetition0 BM_FOO/repetition1 BM_FOO/repetition2

...

```

We also drop mismatches in `time_unit` here.

_(whose bright idea was it to store arbitrarily scaled timers in json **?!** )_

7. Iterate for each partition

7.1. Conditionally, diff the overlapping repetitions (the count of repetitions may be different.)

7.2. Conditionally, do the U test:

7.2.1. Get **all** the values of `"real_time"` field from the lhs benchmark

7.2.2. Get **all** the values of `"cpu_time"` field from the lhs benchmark

7.2.3. Get **all** the values of `"real_time"` field from the rhs benchmark

7.2.4. Get **all** the values of `"cpu_time"` field from the rhs benchmark

NOTE: the repetition count may be different, but we want *all* the values!

7.2.5. Do the rest of the u test stuff

7.2.6. Print u test

8. ???

9. **PROFIT**!

Fixes#677

When building for ARM, there is a fallback codepath that uses

gettimeofday, which requires sys/time.h.

The Windows SDK doesn't have this header, but MinGW does have it.

Thus, this fixes building for Windows on ARM with MinGW

headers/libraries, while Windows on ARM with the Windows SDK still

is broken.

The windows SDK headers don't have self-consistent casing anyway,

and many projects consistently use lowercase for them, in order

to fix crosscompilation with mingw headers.

As discussed with @dominichamon and @dbabokin, sugar is nice.

Well, maybe not for the health, but it's sweet.

Alright, enough puns.

A special care needs to be applied not to break csv reporter. UGH.

We end up shedding some code over this.

We no longer specially pretty-print them, they are printed just like the rest of custom counters.

Fixes#627.

This is related to @BaaMeow's work in https://github.com/google/benchmark/pull/616 but is not based on it.

Two new fields are tracked, and dumped into JSON:

* If the run is an aggregate, the aggregate's name is stored.

It can be RMS, BigO, mean, median, stddev, or any custom stat name.

* The aggregate-name-less run name is additionally stored.

I.e. not some name of the benchmark function, but the actual

name, but without the 'aggregate name' suffix.

This way one can group/filter all the runs,

and filter by the particular aggregate type.

I *might* need this for further tooling improvement.

Or maybe not.

But this is certainly worthwhile for custom tooling.

`MSVC` is true for clang-cl, but `"${CMAKE_CXX_COMPILER_ID}" STREQUAL

"MSVC"` is false, so we would enable -Wall, which means -Weverything

with clang-cl, and we get tons of undesired warnings.

Use the simpler condition to fix things.

Patch by: Reid Kleckner @rnk

I have absolutely no way to test this, but this looks obviously-good.

This was reported by Tim Northover @TNorthover in

http://lists.llvm.org/pipermail/llvm-commits/Week-of-Mon-20180903/584223.html

> I think this breaks some 32-bit configurations (well, mine at least).

> I was using Clang (from Xcode 10 beta) on macOS and got a bunch of

> errors referencing sysinfo.cc:292 and onwards:

> /Users/tim/llvm/llvm-project/llvm/utils/benchmark/src/sysinfo.cc:292:47:

> error: non-constant-expression cannot be narrowed from type

> 'std::__1::array<unsigned long long, 4>::value_type' (aka 'unsigned

> long long') to 'size_t' (aka 'unsigned long') in initializer list

> [-Wc++11-narrowing]

> } Cases[] = {{"hw.l1dcachesize", "Data", 1, CacheCounts[1]},

> ^~~~~~~~~~~~~~

>

> The same happens when self-hosting ToT. Unfortunately I couldn't

> reproduce the issue on Debian (Clang 6.0.1) even with libc++; I'm not

> sure what the difference is.

They are basically proto-version of custom user counters.

It does not seem that they do anything that custom user counters

don't do. And having two similar entities is not good for generalization.

Migration plan:

* ```

SetItemsProcessed(<val>)

=>

state.counters.insert({

{"<Name>", benchmark::Counter(<val>, benchmark::Counter::kIsRate)},

...

});

```

* ```

SetBytesProcessed(<val>)

=>

state.counters.insert({

{"<Name>", benchmark::Counter(<val>, benchmark::Counter::kIsRate,

benchmark::Counter::OneK::kIs1024)},

...

});

```

* ```

<Name>_processed()

=>

state.counters["<Name>"]

```

One thing the custom user counters miss is better support

for units of measurement.

Refs. https://github.com/google/benchmark/issues/627

* Counter(): add 'one thousand' param.

Needed for https://github.com/google/benchmark/pull/654

Custom user counters are quite custom. It is not guaranteed

that the user *always* expects for these to have 1k == 1000.

If the counter represents bytes/memory/etc, 1k should be 1024.

Some bikeshedding points:

1. Is this sufficient, or do we really want to go full on

into custom types with names?

I think just the '1000' is sufficient for now.

2. Should there be a helper benchmark::Counter::Counter{1000,1024}()

static 'constructor' functions, since these two, by far,

will be the most used?

3. In the future, we should be somehow encoding this info into JSON.

* Counter(): use std::pair<> to represent 'one thousand'

* Counter(): just use a new enum with two values 1000 vs 1024.

Simpler is better. If someone comes up with a real reason

to need something more advanced, it can be added later on.

* Counter: just store the 1000 or 1024 in the One_K values directly

* Counter: s/One_K/OneK/

There are two destinations:

* display (console, terminal) and

* file.

And each of the destinations can be poplulated with one of the reporters:

* console - human-friendly table-like display

* json

* csv (deprecated)

So using the name console_reporter is confusing.

Is it talking about the console reporter in the sense of

table-like reporter, or in the sense of display destination?

This is *only* exposed in the JSON. Not in CSV, which is deprecated.

This *only* supposed to track these two states.

An additional field could later track which aggregate this is,

specifically (statistic name, rms, bigo, ...)

The motivation is that we already have ReportAggregatesOnly,

but it affects the entire reports, both the display,

and the reporters (json files), which isn't ideal.

It would be very useful to have a 'display aggregates only' option,

both in the library's console reporter, and the python tooling,

This will be especially needed for the 'store separate iterations'.

This only specifically represents handling of reporting of aggregates.

Not of anything else. Making it more specific makes the name less generic.

This is an issue because i want to add "iteration report mode",

so the naming would be conflicting.

found while working on reproducible builds for openSUSE

To reproduce there

osc checkout openSUSE:Factory/benchmark && cd $_

osc build -j1 --vm-type=kvm

* Remove redundant default which causes failures

* Fix old GCC warnings caused by poor analysis

* Use __builtin_unreachable

* Use BENCHMARK_UNREACHABLE()

* Pull __has_builtin to benchmark.h too

* Also move compiler identification macro to main header

* Move custom compiler identification macro back

High system load can skew benchmark results. By including system load averages

in the library's output, we help users identify a potential issue in the

quality of their measurements, and thus assist them in producing better (more

reproducible) results.

I got the idea for this from Brendan Gregg's checklist for benchmark accuracy

(http://www.brendangregg.com/blog/2018-06-30/benchmarking-checklist.html).

* Set -Wno-deprecated-declarations for Intel

Intel compiler silently ignores -Wno-deprecated-declarations

so warning no. 1786 must be explicitly suppressed.

* Make std::int64_t → double casts explicit

While std::int64_t → double is a perfectly conformant

implicit conversion, Intel compiler warns about it.

Make them explicit via static_cast<double>.

* Make std::int64_t → int casts explicit

Intel compiler warns about emplacing an std::int64_t

into an int container. Just make the conversion explicit

via static_cast<int>.

* Cleanup Intel -Wno-deprecated-declarations workaround logic

Inspired by these [two](a1ebe07bea) [bugs](0891555be5) in my code due to the lack of those i have found fixed in my code:

* `kIsIterationInvariant` - `* state.iterations()`

The value is constant for every iteration, and needs to be **multiplied** by the iteration count.

* `kAvgIterations` - `/ state.iterations()`

The is global over all the iterations, and needs to be **divided** by the iteration count.

They play nice with `kIsRate`:

* `kIsIterationInvariantRate`

* `kAvgIterationsRate`.

I'm not sure how meaningful they are when combined with `kAvgThreads`.

I guess the `kIsThreadInvariant` can be added, too, for symmetry with `kAvgThreads`.

As previously discussed, let's flip the switch ^^.

This exposes the problem that it will now be run

for everyone, even if one did not read the help

about the recommended repetition count.

This is not good. So i think we can do the smart thing:

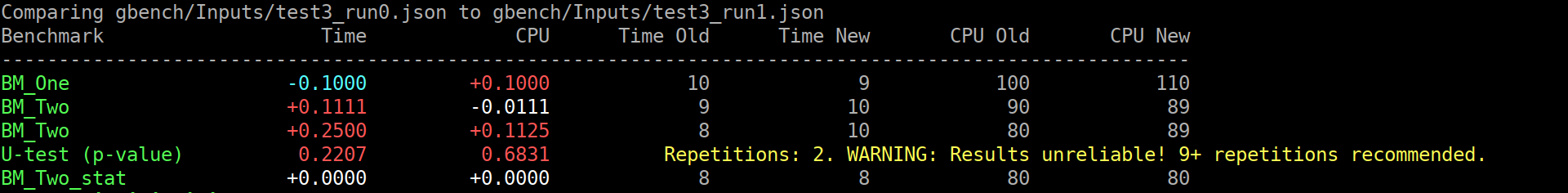

```

$ ./compare.py benchmarks gbench/Inputs/test3_run{0,1}.json

Comparing gbench/Inputs/test3_run0.json to gbench/Inputs/test3_run1.json

Benchmark Time CPU Time Old Time New CPU Old CPU New

--------------------------------------------------------------------------------------------------------

BM_One -0.1000 +0.1000 10 9 100 110

BM_Two +0.1111 -0.0111 9 10 90 89

BM_Two +0.2500 +0.1125 8 10 80 89

BM_Two_pvalue 0.2207 0.6831 U Test, Repetitions: 2. WARNING: Results unreliable! 9+ repetitions recommended.

BM_Two_stat +0.0000 +0.0000 8 8 80 80

```

(old screenshot)

Or, in the good case (noise omitted):

```

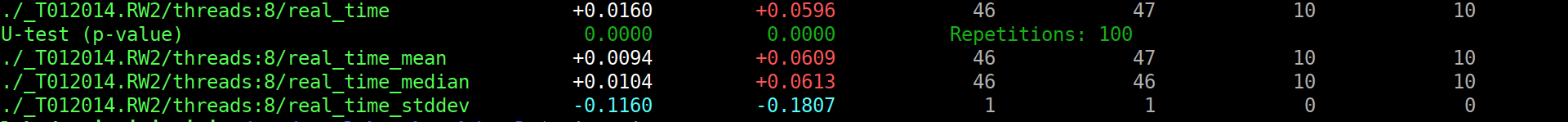

s$ ./compare.py benchmarks /tmp/run{0,1}.json

Comparing /tmp/run0.json to /tmp/run1.json

Benchmark Time CPU Time Old Time New CPU Old CPU New

---------------------------------------------------------------------------------------------------------------------------------

<99 more rows like this>

./_T012014.RW2/threads:8/real_time +0.0160 +0.0596 46 47 10 10

./_T012014.RW2/threads:8/real_time_pvalue 0.0000 0.0000 U Test, Repetitions: 100

./_T012014.RW2/threads:8/real_time_mean +0.0094 +0.0609 46 47 10 10

./_T012014.RW2/threads:8/real_time_median +0.0104 +0.0613 46 46 10 10

./_T012014.RW2/threads:8/real_time_stddev -0.1160 -0.1807 1 1 0 0

```

(old screenshot)

* Fix compilation on Android with GNU STL

GNU STL in Android NDK lacks string conversion functions from C++11, including std::stoul, std::stoi, and std::stod.

This patch reimplements these functions in benchmark:: namespace using C-style equivalents from C++03.

* Avoid use of log2 which doesn't exist in Android GNU STL

GNU STL in Android NDK lacks log2 function from C99/C++11.

This patch replaces their use in the code with double log(double) function.

* format all documents according to contributor guidelines and specifications

use clang-format on/off to stop formatting when it makes excessively poor decisions

* format all tests as well, and mark blocks which change too much

As @dominichamon and I have discussed, the current reporter interface

is poor at best. And something should be done to fix it.

I strongly suspect such a fix will require an entire reimagining

of the API, and therefore breaking backwards compatibility fully.

For that reason we should start deprecating and removing parts

that we don't intend to replace. One of these parts, I argue,

is the CSVReporter. I propose that the new reporter interface

should choose a single output format (JSON) and traffic entirely

in that. If somebody really wanted to replace the functionality

of the CSVReporter they would do so as an external tool which

transforms the JSON.

For these reasons I propose deprecating the CSVReporter.

The first problem you have to solve yourself. The second one can be aided.

The benchmark library can compute some statistics over the repetitions,

which helps with grasping the results somewhat.

But that is only for the one set of results. It does not really help to compare

the two benchmark results, which is the interesting bit. Thankfully, there are

these bundled `tools/compare.py` and `tools/compare_bench.py` scripts.

They can provide a diff between two benchmarking results. Yay!

Except not really, it's just a diff, while it is very informative and better than

nothing, it does not really help answer The Question - am i just looking at the noise?

It's like not having these per-benchmark statistics...

Roughly, we can formulate the question as:

> Are these two benchmarks the same?

> Did my change actually change anything, or is the difference below the noise level?

Well, this really sounds like a [null hypothesis](https://en.wikipedia.org/wiki/Null_hypothesis), does it not?

So maybe we can use statistics here, and solve all our problems?

lol, no, it won't solve all the problems. But maybe it will act as a tool,

to better understand the output, just like the usual statistics on the repetitions...

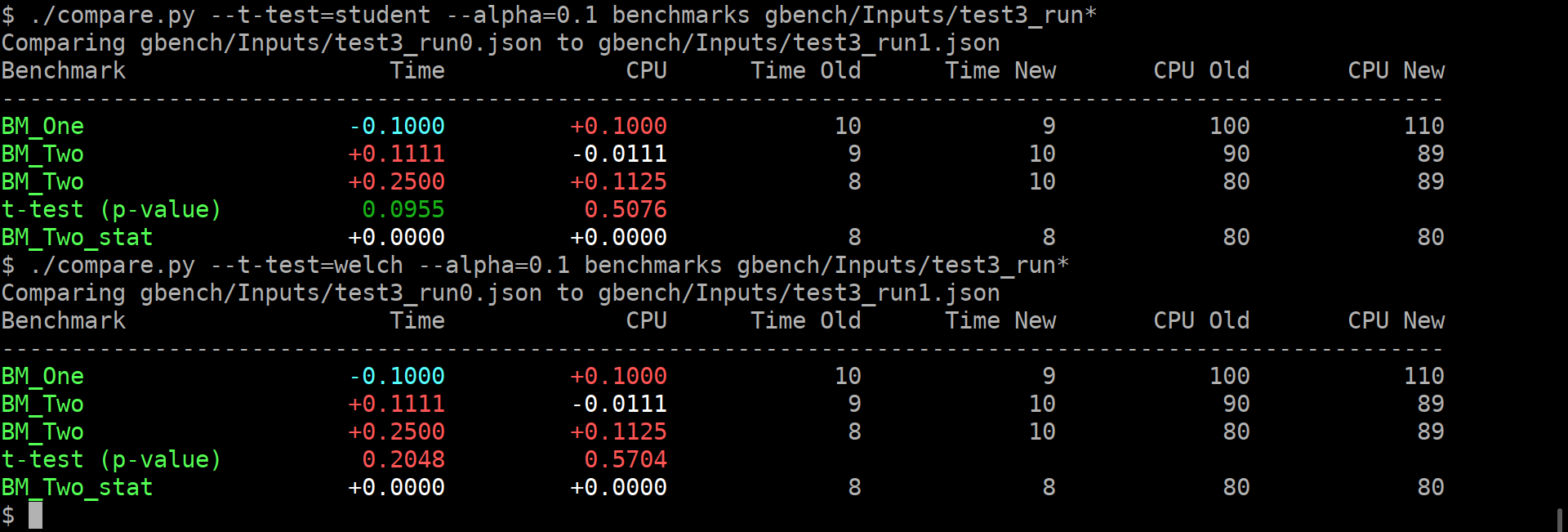

I'm making an assumption here that most of the people care about the change

of average value, not the standard deviation. Thus i believe we can use T-Test,

be it either [Student's t-test](https://en.wikipedia.org/wiki/Student%27s_t-test), or [Welch's t-test](https://en.wikipedia.org/wiki/Welch%27s_t-test).

**EDIT**: however, after @dominichamon review, it was decided that it is better

to use more robust [Mann–Whitney U test](https://en.wikipedia.org/wiki/Mann–Whitney_U_test)

I'm using [scipy.stats.mannwhitneyu](https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.mannwhitneyu.html#scipy.stats.mannwhitneyu).

There are two new user-facing knobs:

```

$ ./compare.py --help

usage: compare.py [-h] [-u] [--alpha UTEST_ALPHA]

{benchmarks,filters,benchmarksfiltered} ...

versatile benchmark output compare tool

<...>

optional arguments:

-h, --help show this help message and exit

-u, --utest Do a two-tailed Mann-Whitney U test with the null

hypothesis that it is equally likely that a randomly

selected value from one sample will be less than or

greater than a randomly selected value from a second

sample. WARNING: requires **LARGE** (9 or more)

number of repetitions to be meaningful!

--alpha UTEST_ALPHA significance level alpha. if the calculated p-value is

below this value, then the result is said to be

statistically significant and the null hypothesis is

rejected. (default: 0.0500)

```

Example output:

As you can guess, the alpha does affect anything but the coloring of the computed p-values.

If it is green, then the change in the average values is statistically-significant.

I'm detecting the repetitions by matching name. This way, no changes to the json are _needed_.

Caveats:

* This won't work if the json is not in the same order as outputted by the benchmark,

or if the parsing does not retain the ordering.

* This won't work if after the grouped repetitions there isn't at least one row with

different name (e.g. statistic). Since there isn't a knob to disable printing of statistics

(only the other way around), i'm not too worried about this.

* **The results will be wrong if the repetition count is different between the two benchmarks being compared.**

* Even though i have added (hopefully full) test coverage, the code of these python tools is staring

to look a bit jumbled.

* So far i have added this only to the `tools/compare.py`.

Should i add it to `tools/compare_bench.py` too?

Or should we deduplicate them (by removing the latter one)?