mirror of https://github.com/google/benchmark.git

[Tooling] Enable U Test by default, add tooltip about repetition count. (#617)

As previously discussed, let's flip the switch ^^.

This exposes the problem that it will now be run

for everyone, even if one did not read the help

about the recommended repetition count.

This is not good. So i think we can do the smart thing:

```

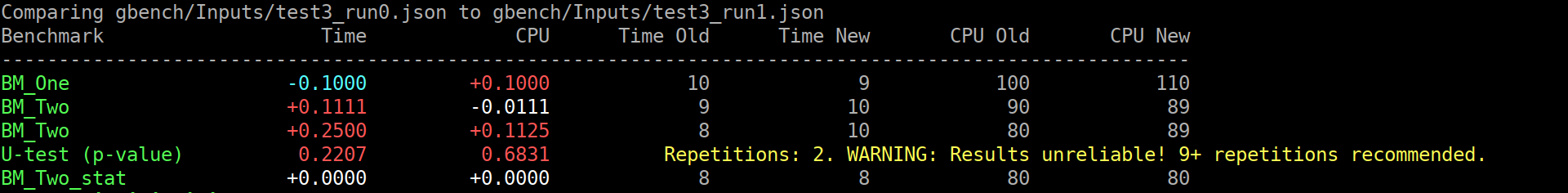

$ ./compare.py benchmarks gbench/Inputs/test3_run{0,1}.json

Comparing gbench/Inputs/test3_run0.json to gbench/Inputs/test3_run1.json

Benchmark Time CPU Time Old Time New CPU Old CPU New

--------------------------------------------------------------------------------------------------------

BM_One -0.1000 +0.1000 10 9 100 110

BM_Two +0.1111 -0.0111 9 10 90 89

BM_Two +0.2500 +0.1125 8 10 80 89

BM_Two_pvalue 0.2207 0.6831 U Test, Repetitions: 2. WARNING: Results unreliable! 9+ repetitions recommended.

BM_Two_stat +0.0000 +0.0000 8 8 80 80

```

(old screenshot)

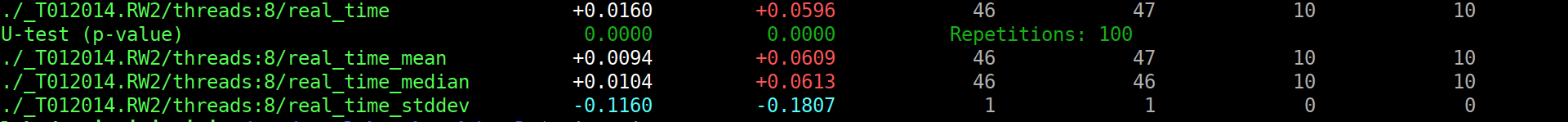

Or, in the good case (noise omitted):

```

s$ ./compare.py benchmarks /tmp/run{0,1}.json

Comparing /tmp/run0.json to /tmp/run1.json

Benchmark Time CPU Time Old Time New CPU Old CPU New

---------------------------------------------------------------------------------------------------------------------------------

<99 more rows like this>

./_T012014.RW2/threads:8/real_time +0.0160 +0.0596 46 47 10 10

./_T012014.RW2/threads:8/real_time_pvalue 0.0000 0.0000 U Test, Repetitions: 100

./_T012014.RW2/threads:8/real_time_mean +0.0094 +0.0609 46 47 10 10

./_T012014.RW2/threads:8/real_time_median +0.0104 +0.0613 46 46 10 10

./_T012014.RW2/threads:8/real_time_stddev -0.1160 -0.1807 1 1 0 0

```

(old screenshot)

This commit is contained in:

parent

151ead6242

commit

7d03f2df49

|

|

@ -38,10 +38,11 @@ def create_parser():

|

|||

|

||||

utest = parser.add_argument_group()

|

||||

utest.add_argument(

|

||||

'-u',

|

||||

'--utest',

|

||||

action="store_true",

|

||||

help="Do a two-tailed Mann-Whitney U test with the null hypothesis that it is equally likely that a randomly selected value from one sample will be less than or greater than a randomly selected value from a second sample.\nWARNING: requires **LARGE** (no less than 9) number of repetitions to be meaningful!")

|

||||

'--no-utest',

|

||||

dest='utest',

|

||||

default=True,

|

||||

action="store_false",

|

||||

help="The tool can do a two-tailed Mann-Whitney U test with the null hypothesis that it is equally likely that a randomly selected value from one sample will be less than or greater than a randomly selected value from a second sample.\nWARNING: requires **LARGE** (no less than {}) number of repetitions to be meaningful!\nThe test is being done by default, if at least {} repetitions were done.\nThis option can disable the U Test.".format(report.UTEST_OPTIMAL_REPETITIONS, report.UTEST_MIN_REPETITIONS))

|

||||

alpha_default = 0.05

|

||||

utest.add_argument(

|

||||

"--alpha",

|

||||

|

|

@ -245,26 +246,16 @@ class TestParser(unittest.TestCase):

|

|||

def test_benchmarks_basic(self):

|

||||

parsed = self.parser.parse_args(

|

||||

['benchmarks', self.testInput0, self.testInput1])

|

||||

self.assertTrue(parsed.utest)

|

||||

self.assertEqual(parsed.mode, 'benchmarks')

|

||||

self.assertEqual(parsed.test_baseline[0].name, self.testInput0)

|

||||

self.assertEqual(parsed.test_contender[0].name, self.testInput1)

|

||||

self.assertFalse(parsed.benchmark_options)

|

||||

|

||||

def test_benchmarks_basic_without_utest(self):

|

||||

parsed = self.parser.parse_args(

|

||||

['--no-utest', 'benchmarks', self.testInput0, self.testInput1])

|

||||

self.assertFalse(parsed.utest)

|

||||

self.assertEqual(parsed.mode, 'benchmarks')

|

||||

self.assertEqual(parsed.test_baseline[0].name, self.testInput0)

|

||||

self.assertEqual(parsed.test_contender[0].name, self.testInput1)

|

||||

self.assertFalse(parsed.benchmark_options)

|

||||

|

||||

def test_benchmarks_basic_with_utest(self):

|

||||

parsed = self.parser.parse_args(

|

||||

['-u', 'benchmarks', self.testInput0, self.testInput1])

|

||||

self.assertTrue(parsed.utest)

|

||||

self.assertEqual(parsed.utest_alpha, 0.05)

|

||||

self.assertEqual(parsed.mode, 'benchmarks')

|

||||

self.assertEqual(parsed.test_baseline[0].name, self.testInput0)

|

||||

self.assertEqual(parsed.test_contender[0].name, self.testInput1)

|

||||

self.assertFalse(parsed.benchmark_options)

|

||||

|

||||

def test_benchmarks_basic_with_utest(self):

|

||||

parsed = self.parser.parse_args(

|

||||

['--utest', 'benchmarks', self.testInput0, self.testInput1])

|

||||

self.assertTrue(parsed.utest)

|

||||

self.assertEqual(parsed.utest_alpha, 0.05)

|

||||

self.assertEqual(parsed.mode, 'benchmarks')

|

||||

self.assertEqual(parsed.test_baseline[0].name, self.testInput0)

|

||||

|

|

@ -273,7 +264,7 @@ class TestParser(unittest.TestCase):

|

|||

|

||||

def test_benchmarks_basic_with_utest_alpha(self):

|

||||

parsed = self.parser.parse_args(

|

||||

['--utest', '--alpha=0.314', 'benchmarks', self.testInput0, self.testInput1])

|

||||

['--alpha=0.314', 'benchmarks', self.testInput0, self.testInput1])

|

||||

self.assertTrue(parsed.utest)

|

||||

self.assertEqual(parsed.utest_alpha, 0.314)

|

||||

self.assertEqual(parsed.mode, 'benchmarks')

|

||||

|

|

@ -281,10 +272,20 @@ class TestParser(unittest.TestCase):

|

|||

self.assertEqual(parsed.test_contender[0].name, self.testInput1)

|

||||

self.assertFalse(parsed.benchmark_options)

|

||||

|

||||

def test_benchmarks_basic_without_utest_with_utest_alpha(self):

|

||||

parsed = self.parser.parse_args(

|

||||

['--no-utest', '--alpha=0.314', 'benchmarks', self.testInput0, self.testInput1])

|

||||

self.assertFalse(parsed.utest)

|

||||

self.assertEqual(parsed.utest_alpha, 0.314)

|

||||

self.assertEqual(parsed.mode, 'benchmarks')

|

||||

self.assertEqual(parsed.test_baseline[0].name, self.testInput0)

|

||||

self.assertEqual(parsed.test_contender[0].name, self.testInput1)

|

||||

self.assertFalse(parsed.benchmark_options)

|

||||

|

||||

def test_benchmarks_with_remainder(self):

|

||||

parsed = self.parser.parse_args(

|

||||

['benchmarks', self.testInput0, self.testInput1, 'd'])

|

||||

self.assertFalse(parsed.utest)

|

||||

self.assertTrue(parsed.utest)

|

||||

self.assertEqual(parsed.mode, 'benchmarks')

|

||||

self.assertEqual(parsed.test_baseline[0].name, self.testInput0)

|

||||

self.assertEqual(parsed.test_contender[0].name, self.testInput1)

|

||||

|

|

@ -293,7 +294,7 @@ class TestParser(unittest.TestCase):

|

|||

def test_benchmarks_with_remainder_after_doubleminus(self):

|

||||

parsed = self.parser.parse_args(

|

||||

['benchmarks', self.testInput0, self.testInput1, '--', 'e'])

|

||||

self.assertFalse(parsed.utest)

|

||||

self.assertTrue(parsed.utest)

|

||||

self.assertEqual(parsed.mode, 'benchmarks')

|

||||

self.assertEqual(parsed.test_baseline[0].name, self.testInput0)

|

||||

self.assertEqual(parsed.test_contender[0].name, self.testInput1)

|

||||

|

|

@ -302,7 +303,7 @@ class TestParser(unittest.TestCase):

|

|||

def test_filters_basic(self):

|

||||

parsed = self.parser.parse_args(

|

||||

['filters', self.testInput0, 'c', 'd'])

|

||||

self.assertFalse(parsed.utest)

|

||||

self.assertTrue(parsed.utest)

|

||||

self.assertEqual(parsed.mode, 'filters')

|

||||

self.assertEqual(parsed.test[0].name, self.testInput0)

|

||||

self.assertEqual(parsed.filter_baseline[0], 'c')

|

||||

|

|

@ -312,7 +313,7 @@ class TestParser(unittest.TestCase):

|

|||

def test_filters_with_remainder(self):

|

||||

parsed = self.parser.parse_args(

|

||||

['filters', self.testInput0, 'c', 'd', 'e'])

|

||||

self.assertFalse(parsed.utest)

|

||||

self.assertTrue(parsed.utest)

|

||||

self.assertEqual(parsed.mode, 'filters')

|

||||

self.assertEqual(parsed.test[0].name, self.testInput0)

|

||||

self.assertEqual(parsed.filter_baseline[0], 'c')

|

||||

|

|

@ -322,7 +323,7 @@ class TestParser(unittest.TestCase):

|

|||

def test_filters_with_remainder_after_doubleminus(self):

|

||||

parsed = self.parser.parse_args(

|

||||

['filters', self.testInput0, 'c', 'd', '--', 'f'])

|

||||

self.assertFalse(parsed.utest)

|

||||

self.assertTrue(parsed.utest)

|

||||

self.assertEqual(parsed.mode, 'filters')

|

||||

self.assertEqual(parsed.test[0].name, self.testInput0)

|

||||

self.assertEqual(parsed.filter_baseline[0], 'c')

|

||||

|

|

@ -332,7 +333,7 @@ class TestParser(unittest.TestCase):

|

|||

def test_benchmarksfiltered_basic(self):

|

||||

parsed = self.parser.parse_args(

|

||||

['benchmarksfiltered', self.testInput0, 'c', self.testInput1, 'e'])

|

||||

self.assertFalse(parsed.utest)

|

||||

self.assertTrue(parsed.utest)

|

||||

self.assertEqual(parsed.mode, 'benchmarksfiltered')

|

||||

self.assertEqual(parsed.test_baseline[0].name, self.testInput0)

|

||||

self.assertEqual(parsed.filter_baseline[0], 'c')

|

||||

|

|

@ -343,7 +344,7 @@ class TestParser(unittest.TestCase):

|

|||

def test_benchmarksfiltered_with_remainder(self):

|

||||

parsed = self.parser.parse_args(

|

||||

['benchmarksfiltered', self.testInput0, 'c', self.testInput1, 'e', 'f'])

|

||||

self.assertFalse(parsed.utest)

|

||||

self.assertTrue(parsed.utest)

|

||||

self.assertEqual(parsed.mode, 'benchmarksfiltered')

|

||||

self.assertEqual(parsed.test_baseline[0].name, self.testInput0)

|

||||

self.assertEqual(parsed.filter_baseline[0], 'c')

|

||||

|

|

@ -354,7 +355,7 @@ class TestParser(unittest.TestCase):

|

|||

def test_benchmarksfiltered_with_remainder_after_doubleminus(self):

|

||||

parsed = self.parser.parse_args(

|

||||

['benchmarksfiltered', self.testInput0, 'c', self.testInput1, 'e', '--', 'g'])

|

||||

self.assertFalse(parsed.utest)

|

||||

self.assertTrue(parsed.utest)

|

||||

self.assertEqual(parsed.mode, 'benchmarksfiltered')

|

||||

self.assertEqual(parsed.test_baseline[0].name, self.testInput0)

|

||||

self.assertEqual(parsed.filter_baseline[0], 'c')

|

||||

|

|

|

|||

|

|

@ -29,7 +29,7 @@

|

|||

"time_unit": "ns"

|

||||

},

|

||||

{

|

||||

"name": "BM_Two_stat",

|

||||

"name": "short",

|

||||

"iterations": 1000,

|

||||

"real_time": 8,

|

||||

"cpu_time": 80,

|

||||

|

|

|

|||

|

|

@ -29,7 +29,7 @@

|

|||

"time_unit": "ns"

|

||||

},

|

||||

{

|

||||

"name": "BM_Two_stat",

|

||||

"name": "short",

|

||||

"iterations": 1000,

|

||||

"real_time": 8,

|

||||

"cpu_time": 80,

|

||||

|

|

|

|||

|

|

@ -34,6 +34,9 @@ BC_ENDC = BenchmarkColor('ENDC', '\033[0m')

|

|||

BC_BOLD = BenchmarkColor('BOLD', '\033[1m')

|

||||

BC_UNDERLINE = BenchmarkColor('UNDERLINE', '\033[4m')

|

||||

|

||||

UTEST_MIN_REPETITIONS = 2

|

||||

UTEST_OPTIMAL_REPETITIONS = 9 # Lowest reasonable number, More is better.

|

||||

|

||||

|

||||

def color_format(use_color, fmt_str, *args, **kwargs):

|

||||

"""

|

||||

|

|

@ -109,11 +112,11 @@ def generate_difference_report(

|

|||

return b

|

||||

return None

|

||||

|

||||

utest_col_name = "U-test (p-value)"

|

||||

utest_col_name = "_pvalue"

|

||||

first_col_width = max(

|

||||

first_col_width,

|

||||

len('Benchmark'),

|

||||

len(utest_col_name))

|

||||

len('Benchmark'))

|

||||

first_col_width += len(utest_col_name)

|

||||

first_line = "{:<{}s}Time CPU Time Old Time New CPU Old CPU New".format(

|

||||

'Benchmark', 12 + first_col_width)

|

||||

output_strs = [first_line, '-' * len(first_line)]

|

||||

|

|

@ -126,16 +129,15 @@ def generate_difference_report(

|

|||

if 'real_time' in bn and 'cpu_time' in bn)

|

||||

for bn in gen:

|

||||

fmt_str = "{}{:<{}s}{endc}{}{:+16.4f}{endc}{}{:+16.4f}{endc}{:14.0f}{:14.0f}{endc}{:14.0f}{:14.0f}"

|

||||

special_str = "{}{:<{}s}{endc}{}{:16.4f}{endc}{}{:16.4f}"

|

||||

special_str = "{}{:<{}s}{endc}{}{:16.4f}{endc}{}{:16.4f}{endc}{} {}"

|

||||

|

||||

if last_name is None:

|

||||

last_name = bn['name']

|

||||

if last_name != bn['name']:

|

||||

MIN_REPETITIONS = 2

|

||||

if ((len(timings_time[0]) >= MIN_REPETITIONS) and

|

||||

(len(timings_time[1]) >= MIN_REPETITIONS) and

|

||||

(len(timings_cpu[0]) >= MIN_REPETITIONS) and

|

||||

(len(timings_cpu[1]) >= MIN_REPETITIONS)):

|

||||

if ((len(timings_time[0]) >= UTEST_MIN_REPETITIONS) and

|

||||

(len(timings_time[1]) >= UTEST_MIN_REPETITIONS) and

|

||||

(len(timings_cpu[0]) >= UTEST_MIN_REPETITIONS) and

|

||||

(len(timings_cpu[1]) >= UTEST_MIN_REPETITIONS)):

|

||||

if utest:

|

||||

def get_utest_color(pval):

|

||||

if pval >= utest_alpha:

|

||||

|

|

@ -146,15 +148,24 @@ def generate_difference_report(

|

|||

timings_time[0], timings_time[1], alternative='two-sided').pvalue

|

||||

cpu_pvalue = mannwhitneyu(

|

||||

timings_cpu[0], timings_cpu[1], alternative='two-sided').pvalue

|

||||

dsc = "U Test, Repetitions: {}".format(len(timings_cpu[0]))

|

||||

dsc_color = BC_OKGREEN

|

||||

if len(timings_cpu[0]) < UTEST_OPTIMAL_REPETITIONS:

|

||||

dsc_color = BC_WARNING

|

||||

dsc += ". WARNING: Results unreliable! {}+ repetitions recommended.".format(

|

||||

UTEST_OPTIMAL_REPETITIONS)

|

||||

output_strs += [color_format(use_color,

|

||||

special_str,

|

||||

BC_HEADER,

|

||||

utest_col_name,

|

||||

"{}{}".format(last_name,

|

||||

utest_col_name),

|

||||

first_col_width,

|

||||

get_utest_color(time_pvalue),

|

||||

time_pvalue,

|

||||

get_utest_color(cpu_pvalue),

|

||||

cpu_pvalue,

|

||||

dsc_color,

|

||||

dsc,

|

||||

endc=BC_ENDC)]

|

||||

last_name = bn['name']

|

||||

timings_time = [[], []]

|

||||

|

|

@ -229,9 +240,12 @@ class TestReportDifference(unittest.TestCase):

|

|||

['BM_1PercentSlower', '+0.0100', '+0.0100', '100', '101', '100', '101'],

|

||||

['BM_10PercentFaster', '-0.1000', '-0.1000', '100', '90', '100', '90'],

|

||||

['BM_10PercentSlower', '+0.1000', '+0.1000', '100', '110', '100', '110'],

|

||||

['BM_100xSlower', '+99.0000', '+99.0000', '100', '10000', '100', '10000'],

|

||||

['BM_100xFaster', '-0.9900', '-0.9900', '10000', '100', '10000', '100'],

|

||||

['BM_10PercentCPUToTime', '+0.1000', '-0.1000', '100', '110', '100', '90'],

|

||||

['BM_100xSlower', '+99.0000', '+99.0000',

|

||||

'100', '10000', '100', '10000'],

|

||||

['BM_100xFaster', '-0.9900', '-0.9900',

|

||||

'10000', '100', '10000', '100'],

|

||||

['BM_10PercentCPUToTime', '+0.1000',

|

||||

'-0.1000', '100', '110', '100', '90'],

|

||||

['BM_ThirdFaster', '-0.3333', '-0.3334', '100', '67', '100', '67'],

|

||||

['BM_BadTimeUnit', '-0.9000', '+0.2000', '0', '0', '0', '1'],

|

||||

]

|

||||

|

|

@ -239,6 +253,7 @@ class TestReportDifference(unittest.TestCase):

|

|||

output_lines_with_header = generate_difference_report(

|

||||

json1, json2, use_color=False)

|

||||

output_lines = output_lines_with_header[2:]

|

||||

print("\n")

|

||||

print("\n".join(output_lines_with_header))

|

||||

self.assertEqual(len(output_lines), len(expect_lines))

|

||||

for i in range(0, len(output_lines)):

|

||||

|

|

@ -302,13 +317,26 @@ class TestReportDifferenceWithUTest(unittest.TestCase):

|

|||

['BM_One', '-0.1000', '+0.1000', '10', '9', '100', '110'],

|

||||

['BM_Two', '+0.1111', '-0.0111', '9', '10', '90', '89'],

|

||||

['BM_Two', '+0.2500', '+0.1125', '8', '10', '80', '89'],

|

||||

['U-test', '(p-value)', '0.2207', '0.6831'],

|

||||

['BM_Two_stat', '+0.0000', '+0.0000', '8', '8', '80', '80'],

|

||||

['BM_Two_pvalue',

|

||||

'0.2207',

|

||||

'0.6831',

|

||||

'U',

|

||||

'Test,',

|

||||

'Repetitions:',

|

||||

'2.',

|

||||

'WARNING:',

|

||||

'Results',

|

||||

'unreliable!',

|

||||

'9+',

|

||||

'repetitions',

|

||||

'recommended.'],

|

||||

['short', '+0.0000', '+0.0000', '8', '8', '80', '80'],

|

||||

]

|

||||

json1, json2 = self.load_results()

|

||||

output_lines_with_header = generate_difference_report(

|

||||

json1, json2, True, 0.05, use_color=False)

|

||||

output_lines = output_lines_with_header[2:]

|

||||

print("\n")

|

||||

print("\n".join(output_lines_with_header))

|

||||

self.assertEqual(len(output_lines), len(expect_lines))

|

||||

for i in range(0, len(output_lines)):

|

||||

|

|

|

|||

Loading…

Reference in New Issue