Autopilot: Return leader info via delegate (#11247)

* Autopilot: Return leader info via delegate * Pull in the new raft-autopilot lib dependencies * update deps * Add CL

This commit is contained in:

parent

3da7cdffc7

commit

406abc19dc

|

|

@ -0,0 +1,3 @@

|

|||

```release-note:bug

|

||||

storage/raft: Support cluster address change for nodes in a cluster managed by autopilot

|

||||

```

|

||||

17

go.mod

17

go.mod

|

|

@ -22,7 +22,7 @@ require (

|

|||

github.com/aliyun/alibaba-cloud-sdk-go v0.0.0-20190620160927-9418d7b0cd0f

|

||||

github.com/aliyun/aliyun-oss-go-sdk v0.0.0-20190307165228-86c17b95fcd5

|

||||

github.com/apple/foundationdb/bindings/go v0.0.0-20190411004307-cd5c9d91fad2

|

||||

github.com/armon/go-metrics v0.3.4

|

||||

github.com/armon/go-metrics v0.3.7

|

||||

github.com/armon/go-proxyproto v0.0.0-20210323213023-7e956b284f0a

|

||||

github.com/armon/go-radix v1.0.0

|

||||

github.com/asaskevich/govalidator v0.0.0-20180720115003-f9ffefc3facf

|

||||

|

|

@ -40,7 +40,7 @@ require (

|

|||

github.com/dsnet/compress v0.0.1 // indirect

|

||||

github.com/duosecurity/duo_api_golang v0.0.0-20190308151101-6c680f768e74

|

||||

github.com/elazarl/go-bindata-assetfs v1.0.1-0.20200509193318-234c15e7648f

|

||||

github.com/fatih/color v1.9.0

|

||||

github.com/fatih/color v1.10.0

|

||||

github.com/fatih/structs v1.1.0

|

||||

github.com/fullsailor/pkcs7 v0.0.0-20190404230743-d7302db945fa

|

||||

github.com/ghodss/yaml v1.0.1-0.20190212211648-25d852aebe32

|

||||

|

|

@ -60,10 +60,10 @@ require (

|

|||

github.com/hashicorp/go-cleanhttp v0.5.2

|

||||

github.com/hashicorp/go-discover v0.0.0-20201029210230-738cb3105cd0

|

||||

github.com/hashicorp/go-gcp-common v0.6.0

|

||||

github.com/hashicorp/go-hclog v0.15.0

|

||||

github.com/hashicorp/go-hclog v0.16.0

|

||||

github.com/hashicorp/go-kms-wrapping v0.5.16

|

||||

github.com/hashicorp/go-memdb v1.0.2

|

||||

github.com/hashicorp/go-msgpack v0.5.5 // indirect

|

||||

github.com/hashicorp/go-msgpack v1.1.5 // indirect

|

||||

github.com/hashicorp/go-multierror v1.1.0

|

||||

github.com/hashicorp/go-raftchunking v0.6.3-0.20191002164813-7e9e8525653a

|

||||

github.com/hashicorp/go-retryablehttp v0.6.7

|

||||

|

|

@ -74,8 +74,8 @@ require (

|

|||

github.com/hashicorp/golang-lru v0.5.4

|

||||

github.com/hashicorp/hcl v1.0.1-vault

|

||||

github.com/hashicorp/nomad/api v0.0.0-20191220223628-edc62acd919d

|

||||

github.com/hashicorp/raft v1.2.0

|

||||

github.com/hashicorp/raft-autopilot v0.1.2

|

||||

github.com/hashicorp/raft v1.3.0

|

||||

github.com/hashicorp/raft-autopilot v0.1.3

|

||||

github.com/hashicorp/raft-boltdb/v2 v2.0.0-20210421194847-a7e34179d62c

|

||||

github.com/hashicorp/raft-snapshot v1.0.3

|

||||

github.com/hashicorp/serf v0.9.5 // indirect

|

||||

|

|

@ -113,7 +113,7 @@ require (

|

|||

github.com/kr/pretty v0.2.1

|

||||

github.com/kr/text v0.2.0

|

||||

github.com/lib/pq v1.8.0

|

||||

github.com/mattn/go-colorable v0.1.7

|

||||

github.com/mattn/go-colorable v0.1.8

|

||||

github.com/mholt/archiver v3.1.1+incompatible

|

||||

github.com/michaelklishin/rabbit-hole v0.0.0-20191008194146-93d9988f0cd5

|

||||

github.com/miekg/dns v1.1.40 // indirect

|

||||

|

|

@ -161,7 +161,8 @@ require (

|

|||

golang.org/x/crypto v0.0.0-20210220033148-5ea612d1eb83

|

||||

golang.org/x/net v0.0.0-20201110031124-69a78807bb2b

|

||||

golang.org/x/oauth2 v0.0.0-20200107190931-bf48bf16ab8d

|

||||

golang.org/x/sys v0.0.0-20210124154548-22da62e12c0c

|

||||

golang.org/x/sync v0.0.0-20210220032951-036812b2e83c // indirect

|

||||

golang.org/x/sys v0.0.0-20210426230700-d19ff857e887

|

||||

golang.org/x/text v0.3.5 // indirect

|

||||

golang.org/x/tools v0.0.0-20210101214203-2dba1e4ea05c

|

||||

google.golang.org/api v0.29.0

|

||||

|

|

|

|||

23

go.sum

23

go.sum

|

|

@ -167,6 +167,8 @@ github.com/armon/go-metrics v0.3.0/go.mod h1:zXjbSimjXTd7vOpY8B0/2LpvNvDoXBuplAD

|

|||

github.com/armon/go-metrics v0.3.3/go.mod h1:4O98XIr/9W0sxpJ8UaYkvjk10Iff7SnFrb4QAOwNTFc=

|

||||

github.com/armon/go-metrics v0.3.4 h1:Xqf+7f2Vhl9tsqDYmXhnXInUdcrtgpRNpIA15/uldSc=

|

||||

github.com/armon/go-metrics v0.3.4/go.mod h1:4O98XIr/9W0sxpJ8UaYkvjk10Iff7SnFrb4QAOwNTFc=

|

||||

github.com/armon/go-metrics v0.3.7 h1:c/oCtWzYpboy6+6f6LjXRlyW7NwA2SWf+a9KMlHq/bM=

|

||||

github.com/armon/go-metrics v0.3.7/go.mod h1:4O98XIr/9W0sxpJ8UaYkvjk10Iff7SnFrb4QAOwNTFc=

|

||||

github.com/armon/go-proxyproto v0.0.0-20210323213023-7e956b284f0a h1:AP/vsCIvJZ129pdm9Ek7bH7yutN3hByqsMoNrWAxRQc=

|

||||

github.com/armon/go-proxyproto v0.0.0-20210323213023-7e956b284f0a/go.mod h1:QmP9hvJ91BbJmGVGSbutW19IC0Q9phDCLGaomwTJbgU=

|

||||

github.com/armon/go-radix v0.0.0-20180808171621-7fddfc383310/go.mod h1:ufUuZ+zHj4x4TnLV4JWEpy2hxWSpsRywHrMgIH9cCH8=

|

||||

|

|

@ -336,6 +338,8 @@ github.com/evanphx/json-patch v4.2.0+incompatible/go.mod h1:50XU6AFN0ol/bzJsmQLi

|

|||

github.com/fatih/color v1.7.0/go.mod h1:Zm6kSWBoL9eyXnKyktHP6abPY2pDugNf5KwzbycvMj4=

|

||||

github.com/fatih/color v1.9.0 h1:8xPHl4/q1VyqGIPif1F+1V3Y3lSmrq01EabUW3CoW5s=

|

||||

github.com/fatih/color v1.9.0/go.mod h1:eQcE1qtQxscV5RaZvpXrrb8Drkc3/DdQ+uUYCNjL+zU=

|

||||

github.com/fatih/color v1.10.0 h1:s36xzo75JdqLaaWoiEHk767eHiwo0598uUxyfiPkDsg=

|

||||

github.com/fatih/color v1.10.0/go.mod h1:ELkj/draVOlAH/xkhN6mQ50Qd0MPOk5AAr3maGEBuJM=

|

||||

github.com/fatih/structs v1.1.0 h1:Q7juDM0QtcnhCpeyLGQKyg4TOIghuNXrkL32pHAUMxo=

|

||||

github.com/fatih/structs v1.1.0/go.mod h1:9NiDSp5zOcgEDl+j00MP/WkGVPOlPRLejGD8Ga6PJ7M=

|

||||

github.com/form3tech-oss/jwt-go v3.2.2+incompatible h1:TcekIExNqud5crz4xD2pavyTgWiPvpYe4Xau31I0PRk=

|

||||

|

|

@ -584,6 +588,8 @@ github.com/hashicorp/go-hclog v0.12.0/go.mod h1:whpDNt7SSdeAju8AWKIWsul05p54N/39

|

|||

github.com/hashicorp/go-hclog v0.14.1/go.mod h1:whpDNt7SSdeAju8AWKIWsul05p54N/39EeqMAyrmvFQ=

|

||||

github.com/hashicorp/go-hclog v0.15.0 h1:qMuK0wxsoW4D0ddCCYwPSTm4KQv1X1ke3WmPWZ0Mvsk=

|

||||

github.com/hashicorp/go-hclog v0.15.0/go.mod h1:whpDNt7SSdeAju8AWKIWsul05p54N/39EeqMAyrmvFQ=

|

||||

github.com/hashicorp/go-hclog v0.16.0 h1:uCeOEwSWGMwhJUdpUjk+1cVKIEfGu2/1nFXukimi2MU=

|

||||

github.com/hashicorp/go-hclog v0.16.0/go.mod h1:whpDNt7SSdeAju8AWKIWsul05p54N/39EeqMAyrmvFQ=

|

||||

github.com/hashicorp/go-immutable-radix v1.0.0/go.mod h1:0y9vanUI8NX6FsYoO3zeMjhV/C5i9g4Q3DwcSNZ4P60=

|

||||

github.com/hashicorp/go-immutable-radix v1.1.0/go.mod h1:0y9vanUI8NX6FsYoO3zeMjhV/C5i9g4Q3DwcSNZ4P60=

|

||||

github.com/hashicorp/go-immutable-radix v1.3.0 h1:8exGP7ego3OmkfksihtSouGMZ+hQrhxx+FVELeXpVPE=

|

||||

|

|

@ -597,6 +603,8 @@ github.com/hashicorp/go-memdb v1.0.2/go.mod h1:I6dKdmYhZqU0RJSheVEWgTNWdVQH5QvTg

|

|||

github.com/hashicorp/go-msgpack v0.5.3/go.mod h1:ahLV/dePpqEmjfWmKiqvPkv/twdG7iPBM1vqhUKIvfM=

|

||||

github.com/hashicorp/go-msgpack v0.5.5 h1:i9R9JSrqIz0QVLz3sz+i3YJdT7TTSLcfLLzJi9aZTuI=

|

||||

github.com/hashicorp/go-msgpack v0.5.5/go.mod h1:ahLV/dePpqEmjfWmKiqvPkv/twdG7iPBM1vqhUKIvfM=

|

||||

github.com/hashicorp/go-msgpack v1.1.5 h1:9byZdVjKTe5mce63pRVNP1L7UAmdHOTEMGehn6KvJWs=

|

||||

github.com/hashicorp/go-msgpack v1.1.5/go.mod h1:gWVc3sv/wbDmR3rQsj1CAktEZzoz1YNK9NfGLXJ69/4=

|

||||

github.com/hashicorp/go-multierror v1.0.0/go.mod h1:dHtQlpGsu+cZNNAkkCN/P3hoUDHhCYQXV3UM06sGGrk=

|

||||

github.com/hashicorp/go-multierror v1.1.0 h1:B9UzwGQJehnUY1yNrnwREHc3fGbC2xefo8g4TbElacI=

|

||||

github.com/hashicorp/go-multierror v1.1.0/go.mod h1:spPvp8C1qA32ftKqdAHm4hHTbPw+vmowP0z+KUhOZdA=

|

||||

|

|

@ -654,12 +662,14 @@ github.com/hashicorp/raft v1.1.0/go.mod h1:4Ak7FSPnuvmb0GV6vgIAJ4vYT4bek9bb6Q+7H

|

|||

github.com/hashicorp/raft v1.1.2-0.20191002163536-9c6bd3e3eb17/go.mod h1:vPAJM8Asw6u8LxC3eJCUZmRP/E4QmUGE1R7g7k8sG/8=

|

||||

github.com/hashicorp/raft v1.2.0 h1:mHzHIrF0S91d3A7RPBvuqkgB4d/7oFJZyvf1Q4m7GA0=

|

||||

github.com/hashicorp/raft v1.2.0/go.mod h1:vPAJM8Asw6u8LxC3eJCUZmRP/E4QmUGE1R7g7k8sG/8=

|

||||

github.com/hashicorp/raft v1.3.0 h1:Wox4J4R7J2FOJLtTa6hdk0VJfiNUSP32pYoYR738bkE=

|

||||

github.com/hashicorp/raft v1.3.0/go.mod h1:4Ak7FSPnuvmb0GV6vgIAJ4vYT4bek9bb6Q+7HVbyzqM=

|

||||

github.com/hashicorp/raft-autopilot v0.1.2 h1:yeqdUjWLjVJkBM+mcVxqwxi+w+aHsb9cEON2dz69OCs=

|

||||

github.com/hashicorp/raft-autopilot v0.1.2/go.mod h1:Af4jZBwaNOI+tXfIqIdbcAnh/UyyqIMj/pOISIfhArw=

|

||||

github.com/hashicorp/raft-autopilot v0.1.3 h1:Y+5jWKTFABJhCrpVwGpGjti2LzwQSzivoqd2wM6JWGw=

|

||||

github.com/hashicorp/raft-autopilot v0.1.3/go.mod h1:Af4jZBwaNOI+tXfIqIdbcAnh/UyyqIMj/pOISIfhArw=

|

||||

github.com/hashicorp/raft-boltdb v0.0.0-20171010151810-6e5ba93211ea h1:xykPFhrBAS2J0VBzVa5e80b5ZtYuNQtgXjN40qBZlD4=

|

||||

github.com/hashicorp/raft-boltdb v0.0.0-20171010151810-6e5ba93211ea/go.mod h1:pNv7Wc3ycL6F5oOWn+tPGo2gWD4a5X+yp/ntwdKLjRk=

|

||||

github.com/hashicorp/raft-boltdb/v2 v2.0.0-20210409134258-03c10cc3d4ea h1:pXD01QLdHmn4Ij82g1vksWbZXwSH6il7Svrm/rdUk18=

|

||||

github.com/hashicorp/raft-boltdb/v2 v2.0.0-20210409134258-03c10cc3d4ea/go.mod h1:kiPs9g148eLShc2TYagUAyKDnD+dH9U+CQKsXzlY9xo=

|

||||

github.com/hashicorp/raft-boltdb/v2 v2.0.0-20210421194847-a7e34179d62c h1:oiKun9QlrOz5yQxMZJ3tf1kWtFYuKSJzxzEDxDPevj4=

|

||||

github.com/hashicorp/raft-boltdb/v2 v2.0.0-20210421194847-a7e34179d62c/go.mod h1:kiPs9g148eLShc2TYagUAyKDnD+dH9U+CQKsXzlY9xo=

|

||||

github.com/hashicorp/raft-snapshot v1.0.3 h1:lTgBBGMFcuKBTwHqWZ4r0TLzNsqo/OByCga/kM6F0uM=

|

||||

|

|

@ -836,6 +846,8 @@ github.com/mattn/go-colorable v0.1.4/go.mod h1:U0ppj6V5qS13XJ6of8GYAs25YV2eR4EVc

|

|||

github.com/mattn/go-colorable v0.1.6/go.mod h1:u6P/XSegPjTcexA+o6vUJrdnUu04hMope9wVRipJSqc=

|

||||

github.com/mattn/go-colorable v0.1.7 h1:bQGKb3vps/j0E9GfJQ03JyhRuxsvdAanXlT9BTw3mdw=

|

||||

github.com/mattn/go-colorable v0.1.7/go.mod h1:u6P/XSegPjTcexA+o6vUJrdnUu04hMope9wVRipJSqc=

|

||||

github.com/mattn/go-colorable v0.1.8 h1:c1ghPdyEDarC70ftn0y+A/Ee++9zz8ljHG1b13eJ0s8=

|

||||

github.com/mattn/go-colorable v0.1.8/go.mod h1:u6P/XSegPjTcexA+o6vUJrdnUu04hMope9wVRipJSqc=

|

||||

github.com/mattn/go-ieproxy v0.0.1 h1:qiyop7gCflfhwCzGyeT0gro3sF9AIg9HU98JORTkqfI=

|

||||

github.com/mattn/go-ieproxy v0.0.1/go.mod h1:pYabZ6IHcRpFh7vIaLfK7rdcWgFEb3SFJ6/gNWuh88E=

|

||||

github.com/mattn/go-isatty v0.0.3/go.mod h1:M+lRXTBqGeGNdLjl/ufCoiOlB5xdOkqRJdNxMWT7Zi4=

|

||||

|

|

@ -1359,6 +1371,8 @@ golang.org/x/sync v0.0.0-20190911185100-cd5d95a43a6e/go.mod h1:RxMgew5VJxzue5/jJ

|

|||

golang.org/x/sync v0.0.0-20200317015054-43a5402ce75a/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

|

||||

golang.org/x/sync v0.0.0-20201020160332-67f06af15bc9 h1:SQFwaSi55rU7vdNs9Yr0Z324VNlrF+0wMqRXT4St8ck=

|

||||

golang.org/x/sync v0.0.0-20201020160332-67f06af15bc9/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

|

||||

golang.org/x/sync v0.0.0-20210220032951-036812b2e83c h1:5KslGYwFpkhGh+Q16bwMP3cOontH8FOep7tGV86Y7SQ=

|

||||

golang.org/x/sync v0.0.0-20210220032951-036812b2e83c/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

|

||||

golang.org/x/sys v0.0.0-20170830134202-bb24a47a89ea/go.mod h1:STP8DvDyc/dI5b8T5hshtkjS+E42TnysNCUPdjciGhY=

|

||||

golang.org/x/sys v0.0.0-20180823144017-11551d06cbcc/go.mod h1:STP8DvDyc/dI5b8T5hshtkjS+E42TnysNCUPdjciGhY=

|

||||

golang.org/x/sys v0.0.0-20180830151530-49385e6e1522/go.mod h1:STP8DvDyc/dI5b8T5hshtkjS+E42TnysNCUPdjciGhY=

|

||||

|

|

@ -1425,6 +1439,10 @@ golang.org/x/sys v0.0.0-20200930185726-fdedc70b468f/go.mod h1:h1NjWce9XRLGQEsW7w

|

|||

golang.org/x/sys v0.0.0-20201201145000-ef89a241ccb3/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

|

||||

golang.org/x/sys v0.0.0-20210124154548-22da62e12c0c h1:VwygUrnw9jn88c4u8GD3rZQbqrP/tgas88tPUbBxQrk=

|

||||

golang.org/x/sys v0.0.0-20210124154548-22da62e12c0c/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

|

||||

golang.org/x/sys v0.0.0-20210423082822-04245dca01da h1:b3NXsE2LusjYGGjL5bxEVZZORm/YEFFrWFjR8eFrw/c=

|

||||

golang.org/x/sys v0.0.0-20210423082822-04245dca01da/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

|

||||

golang.org/x/sys v0.0.0-20210426230700-d19ff857e887 h1:dXfMednGJh/SUUFjTLsWJz3P+TQt9qnR11GgeI3vWKs=

|

||||

golang.org/x/sys v0.0.0-20210426230700-d19ff857e887/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

|

||||

golang.org/x/term v0.0.0-20201117132131-f5c789dd3221 h1:/ZHdbVpdR/jk3g30/d4yUL0JU9kksj8+F/bnQUVLGDM=

|

||||

golang.org/x/term v0.0.0-20201117132131-f5c789dd3221/go.mod h1:Nr5EML6q2oocZ2LXRh80K7BxOlk5/8JxuGnuhpl+muw=

|

||||

golang.org/x/text v0.0.0-20160726164857-2910a502d2bf/go.mod h1:NqM8EUOU14njkJ3fqMW+pc6Ldnwhi/IjpwHt7yyuwOQ=

|

||||

|

|

@ -1458,6 +1476,7 @@ golang.org/x/tools v0.0.0-20190328211700-ab21143f2384/go.mod h1:LCzVGOaR6xXOjkQ3

|

|||

golang.org/x/tools v0.0.0-20190329151228-23e29df326fe/go.mod h1:LCzVGOaR6xXOjkQ3onu1FJEFr0SW1gC7cKk1uF8kGRs=

|

||||

golang.org/x/tools v0.0.0-20190416151739-9c9e1878f421/go.mod h1:LCzVGOaR6xXOjkQ3onu1FJEFr0SW1gC7cKk1uF8kGRs=

|

||||

golang.org/x/tools v0.0.0-20190420181800-aa740d480789/go.mod h1:LCzVGOaR6xXOjkQ3onu1FJEFr0SW1gC7cKk1uF8kGRs=

|

||||

golang.org/x/tools v0.0.0-20190424220101-1e8e1cfdf96b/go.mod h1:RgjU9mgBXZiqYHBnxXauZ1Gv1EHHAz9KjViQ78xBX0Q=

|

||||

golang.org/x/tools v0.0.0-20190425150028-36563e24a262/go.mod h1:RgjU9mgBXZiqYHBnxXauZ1Gv1EHHAz9KjViQ78xBX0Q=

|

||||

golang.org/x/tools v0.0.0-20190506145303-2d16b83fe98c/go.mod h1:RgjU9mgBXZiqYHBnxXauZ1Gv1EHHAz9KjViQ78xBX0Q=

|

||||

golang.org/x/tools v0.0.0-20190524140312-2c0ae7006135/go.mod h1:RgjU9mgBXZiqYHBnxXauZ1Gv1EHHAz9KjViQ78xBX0Q=

|

||||

|

|

|

|||

|

|

@ -381,6 +381,7 @@ func (d *Delegate) KnownServers() map[raft.ServerID]*autopilot.Server {

|

|||

RaftVersion: raft.ProtocolVersionMax,

|

||||

NodeStatus: autopilot.NodeAlive,

|

||||

Ext: d.autopilotServerExt("voter"),

|

||||

IsLeader: true,

|

||||

}

|

||||

|

||||

return ret

|

||||

|

|

|

|||

|

|

@ -228,12 +228,12 @@ func (m *Metrics) allowMetric(key []string, labels []Label) (bool, []Label) {

|

|||

func (m *Metrics) collectStats() {

|

||||

for {

|

||||

time.Sleep(m.ProfileInterval)

|

||||

m.emitRuntimeStats()

|

||||

m.EmitRuntimeStats()

|

||||

}

|

||||

}

|

||||

|

||||

// Emits various runtime statsitics

|

||||

func (m *Metrics) emitRuntimeStats() {

|

||||

func (m *Metrics) EmitRuntimeStats() {

|

||||

// Export number of Goroutines

|

||||

numRoutines := runtime.NumGoroutine()

|

||||

m.SetGauge([]string{"runtime", "num_goroutines"}, float32(numRoutines))

|

||||

|

|

|

|||

|

|

@ -28,6 +28,26 @@ type PrometheusOpts struct {

|

|||

// Expiration is the duration a metric is valid for, after which it will be

|

||||

// untracked. If the value is zero, a metric is never expired.

|

||||

Expiration time.Duration

|

||||

Registerer prometheus.Registerer

|

||||

|

||||

// Gauges, Summaries, and Counters allow us to pre-declare metrics by giving

|

||||

// their Name, Help, and ConstLabels to the PrometheusSink when it is created.

|

||||

// Metrics declared in this way will be initialized at zero and will not be

|

||||

// deleted or altered when their expiry is reached.

|

||||

//

|

||||

// Ex: PrometheusOpts{

|

||||

// Expiration: 10 * time.Second,

|

||||

// Gauges: []GaugeDefinition{

|

||||

// {

|

||||

// Name: []string{ "application", "component", "measurement"},

|

||||

// Help: "application_component_measurement provides an example of how to declare static metrics",

|

||||

// ConstLabels: []metrics.Label{ { Name: "my_label", Value: "does_not_change" }, },

|

||||

// },

|

||||

// },

|

||||

// }

|

||||

GaugeDefinitions []GaugeDefinition

|

||||

SummaryDefinitions []SummaryDefinition

|

||||

CounterDefinitions []CounterDefinition

|

||||

}

|

||||

|

||||

type PrometheusSink struct {

|

||||

|

|

@ -36,21 +56,47 @@ type PrometheusSink struct {

|

|||

summaries sync.Map

|

||||

counters sync.Map

|

||||

expiration time.Duration

|

||||

help map[string]string

|

||||

}

|

||||

|

||||

type PrometheusGauge struct {

|

||||

// GaugeDefinition can be provided to PrometheusOpts to declare a constant gauge that is not deleted on expiry.

|

||||

type GaugeDefinition struct {

|

||||

Name []string

|

||||

ConstLabels []metrics.Label

|

||||

Help string

|

||||

}

|

||||

|

||||

type gauge struct {

|

||||

prometheus.Gauge

|

||||

updatedAt time.Time

|

||||

// canDelete is set if the metric is created during runtime so we know it's ephemeral and can delete it on expiry.

|

||||

canDelete bool

|

||||

}

|

||||

|

||||

type PrometheusSummary struct {

|

||||

// SummaryDefinition can be provided to PrometheusOpts to declare a constant summary that is not deleted on expiry.

|

||||

type SummaryDefinition struct {

|

||||

Name []string

|

||||

ConstLabels []metrics.Label

|

||||

Help string

|

||||

}

|

||||

|

||||

type summary struct {

|

||||

prometheus.Summary

|

||||

updatedAt time.Time

|

||||

canDelete bool

|

||||

}

|

||||

|

||||

type PrometheusCounter struct {

|

||||

// CounterDefinition can be provided to PrometheusOpts to declare a constant counter that is not deleted on expiry.

|

||||

type CounterDefinition struct {

|

||||

Name []string

|

||||

ConstLabels []metrics.Label

|

||||

Help string

|

||||

}

|

||||

|

||||

type counter struct {

|

||||

prometheus.Counter

|

||||

updatedAt time.Time

|

||||

canDelete bool

|

||||

}

|

||||

|

||||

// NewPrometheusSink creates a new PrometheusSink using the default options.

|

||||

|

|

@ -65,9 +111,19 @@ func NewPrometheusSinkFrom(opts PrometheusOpts) (*PrometheusSink, error) {

|

|||

summaries: sync.Map{},

|

||||

counters: sync.Map{},

|

||||

expiration: opts.Expiration,

|

||||

help: make(map[string]string),

|

||||

}

|

||||

|

||||

return sink, prometheus.Register(sink)

|

||||

initGauges(&sink.gauges, opts.GaugeDefinitions, sink.help)

|

||||

initSummaries(&sink.summaries, opts.SummaryDefinitions, sink.help)

|

||||

initCounters(&sink.counters, opts.CounterDefinitions, sink.help)

|

||||

|

||||

reg := opts.Registerer

|

||||

if reg == nil {

|

||||

reg = prometheus.DefaultRegisterer

|

||||

}

|

||||

|

||||

return sink, reg.Register(sink)

|

||||

}

|

||||

|

||||

// Describe is needed to meet the Collector interface.

|

||||

|

|

@ -81,46 +137,107 @@ func (p *PrometheusSink) Describe(c chan<- *prometheus.Desc) {

|

|||

// logic to clean up ephemeral metrics if their value haven't been set for a

|

||||

// duration exceeding our allowed expiration time.

|

||||

func (p *PrometheusSink) Collect(c chan<- prometheus.Metric) {

|

||||

p.collectAtTime(c, time.Now())

|

||||

}

|

||||

|

||||

// collectAtTime allows internal testing of the expiry based logic here without

|

||||

// mocking clocks or making tests timing sensitive.

|

||||

func (p *PrometheusSink) collectAtTime(c chan<- prometheus.Metric, t time.Time) {

|

||||

expire := p.expiration != 0

|

||||

now := time.Now()

|

||||

p.gauges.Range(func(k, v interface{}) bool {

|

||||

if v != nil {

|

||||

lastUpdate := v.(*PrometheusGauge).updatedAt

|

||||

if expire && lastUpdate.Add(p.expiration).Before(now) {

|

||||

if v == nil {

|

||||

return true

|

||||

}

|

||||

g := v.(*gauge)

|

||||

lastUpdate := g.updatedAt

|

||||

if expire && lastUpdate.Add(p.expiration).Before(t) {

|

||||

if g.canDelete {

|

||||

p.gauges.Delete(k)

|

||||

} else {

|

||||

v.(*PrometheusGauge).Collect(c)

|

||||

return true

|

||||

}

|

||||

}

|

||||

g.Collect(c)

|

||||

return true

|

||||

})

|

||||

p.summaries.Range(func(k, v interface{}) bool {

|

||||

if v != nil {

|

||||

lastUpdate := v.(*PrometheusSummary).updatedAt

|

||||

if expire && lastUpdate.Add(p.expiration).Before(now) {

|

||||

if v == nil {

|

||||

return true

|

||||

}

|

||||

s := v.(*summary)

|

||||

lastUpdate := s.updatedAt

|

||||

if expire && lastUpdate.Add(p.expiration).Before(t) {

|

||||

if s.canDelete {

|

||||

p.summaries.Delete(k)

|

||||

} else {

|

||||

v.(*PrometheusSummary).Collect(c)

|

||||

return true

|

||||

}

|

||||

}

|

||||

s.Collect(c)

|

||||

return true

|

||||

})

|

||||

p.counters.Range(func(k, v interface{}) bool {

|

||||

if v != nil {

|

||||

lastUpdate := v.(*PrometheusCounter).updatedAt

|

||||

if expire && lastUpdate.Add(p.expiration).Before(now) {

|

||||

if v == nil {

|

||||

return true

|

||||

}

|

||||

count := v.(*counter)

|

||||

lastUpdate := count.updatedAt

|

||||

if expire && lastUpdate.Add(p.expiration).Before(t) {

|

||||

if count.canDelete {

|

||||

p.counters.Delete(k)

|

||||

} else {

|

||||

v.(*PrometheusCounter).Collect(c)

|

||||

return true

|

||||

}

|

||||

}

|

||||

count.Collect(c)

|

||||

return true

|

||||

})

|

||||

}

|

||||

|

||||

func initGauges(m *sync.Map, gauges []GaugeDefinition, help map[string]string) {

|

||||

for _, g := range gauges {

|

||||

key, hash := flattenKey(g.Name, g.ConstLabels)

|

||||

help[fmt.Sprintf("gauge.%s", key)] = g.Help

|

||||

pG := prometheus.NewGauge(prometheus.GaugeOpts{

|

||||

Name: key,

|

||||

Help: g.Help,

|

||||

ConstLabels: prometheusLabels(g.ConstLabels),

|

||||

})

|

||||

m.Store(hash, &gauge{Gauge: pG})

|

||||

}

|

||||

return

|

||||

}

|

||||

|

||||

func initSummaries(m *sync.Map, summaries []SummaryDefinition, help map[string]string) {

|

||||

for _, s := range summaries {

|

||||

key, hash := flattenKey(s.Name, s.ConstLabels)

|

||||

help[fmt.Sprintf("summary.%s", key)] = s.Help

|

||||

pS := prometheus.NewSummary(prometheus.SummaryOpts{

|

||||

Name: key,

|

||||

Help: s.Help,

|

||||

MaxAge: 10 * time.Second,

|

||||

ConstLabels: prometheusLabels(s.ConstLabels),

|

||||

Objectives: map[float64]float64{0.5: 0.05, 0.9: 0.01, 0.99: 0.001},

|

||||

})

|

||||

m.Store(hash, &summary{Summary: pS})

|

||||

}

|

||||

return

|

||||

}

|

||||

|

||||

func initCounters(m *sync.Map, counters []CounterDefinition, help map[string]string) {

|

||||

for _, c := range counters {

|

||||

key, hash := flattenKey(c.Name, c.ConstLabels)

|

||||

help[fmt.Sprintf("counter.%s", key)] = c.Help

|

||||

pC := prometheus.NewCounter(prometheus.CounterOpts{

|

||||

Name: key,

|

||||

Help: c.Help,

|

||||

ConstLabels: prometheusLabels(c.ConstLabels),

|

||||

})

|

||||

m.Store(hash, &counter{Counter: pC})

|

||||

}

|

||||

return

|

||||

}

|

||||

|

||||

var forbiddenChars = regexp.MustCompile("[ .=\\-/]")

|

||||

|

||||

func (p *PrometheusSink) flattenKey(parts []string, labels []metrics.Label) (string, string) {

|

||||

func flattenKey(parts []string, labels []metrics.Label) (string, string) {

|

||||

key := strings.Join(parts, "_")

|

||||

key = forbiddenChars.ReplaceAllString(key, "_")

|

||||

|

||||

|

|

@ -145,7 +262,7 @@ func (p *PrometheusSink) SetGauge(parts []string, val float32) {

|

|||

}

|

||||

|

||||

func (p *PrometheusSink) SetGaugeWithLabels(parts []string, val float32, labels []metrics.Label) {

|

||||

key, hash := p.flattenKey(parts, labels)

|

||||

key, hash := flattenKey(parts, labels)

|

||||

pg, ok := p.gauges.Load(hash)

|

||||

|

||||

// The sync.Map underlying gauges stores pointers to our structs. If we need to make updates,

|

||||

|

|

@ -155,19 +272,28 @@ func (p *PrometheusSink) SetGaugeWithLabels(parts []string, val float32, labels

|

|||

// so there's no issues there. It's possible for racy updates to occur to the updatedAt

|

||||

// value, but since we're always setting it to time.Now(), it doesn't really matter.

|

||||

if ok {

|

||||

localGauge := *pg.(*PrometheusGauge)

|

||||

localGauge := *pg.(*gauge)

|

||||

localGauge.Set(float64(val))

|

||||

localGauge.updatedAt = time.Now()

|

||||

p.gauges.Store(hash, &localGauge)

|

||||

|

||||

// The gauge does not exist, create the gauge and allow it to be deleted

|

||||

} else {

|

||||

help := key

|

||||

existingHelp, ok := p.help[fmt.Sprintf("gauge.%s", key)]

|

||||

if ok {

|

||||

help = existingHelp

|

||||

}

|

||||

g := prometheus.NewGauge(prometheus.GaugeOpts{

|

||||

Name: key,

|

||||

Help: key,

|

||||

Help: help,

|

||||

ConstLabels: prometheusLabels(labels),

|

||||

})

|

||||

g.Set(float64(val))

|

||||

pg = &PrometheusGauge{

|

||||

g, time.Now(),

|

||||

pg = &gauge{

|

||||

Gauge: g,

|

||||

updatedAt: time.Now(),

|

||||

canDelete: true,

|

||||

}

|

||||

p.gauges.Store(hash, pg)

|

||||

}

|

||||

|

|

@ -178,25 +304,35 @@ func (p *PrometheusSink) AddSample(parts []string, val float32) {

|

|||

}

|

||||

|

||||

func (p *PrometheusSink) AddSampleWithLabels(parts []string, val float32, labels []metrics.Label) {

|

||||

key, hash := p.flattenKey(parts, labels)

|

||||

key, hash := flattenKey(parts, labels)

|

||||

ps, ok := p.summaries.Load(hash)

|

||||

|

||||

// Does the summary already exist for this sample type?

|

||||

if ok {

|

||||

localSummary := *ps.(*PrometheusSummary)

|

||||

localSummary := *ps.(*summary)

|

||||

localSummary.Observe(float64(val))

|

||||

localSummary.updatedAt = time.Now()

|

||||

p.summaries.Store(hash, &localSummary)

|

||||

|

||||

// The summary does not exist, create the Summary and allow it to be deleted

|

||||

} else {

|

||||

help := key

|

||||

existingHelp, ok := p.help[fmt.Sprintf("summary.%s", key)]

|

||||

if ok {

|

||||

help = existingHelp

|

||||

}

|

||||

s := prometheus.NewSummary(prometheus.SummaryOpts{

|

||||

Name: key,

|

||||

Help: key,

|

||||

Help: help,

|

||||

MaxAge: 10 * time.Second,

|

||||

ConstLabels: prometheusLabels(labels),

|

||||

Objectives: map[float64]float64{0.5: 0.05, 0.9: 0.01, 0.99: 0.001},

|

||||

})

|

||||

s.Observe(float64(val))

|

||||

ps = &PrometheusSummary{

|

||||

s, time.Now(),

|

||||

ps = &summary{

|

||||

Summary: s,

|

||||

updatedAt: time.Now(),

|

||||

canDelete: true,

|

||||

}

|

||||

p.summaries.Store(hash, ps)

|

||||

}

|

||||

|

|

@ -213,28 +349,40 @@ func (p *PrometheusSink) IncrCounter(parts []string, val float32) {

|

|||

}

|

||||

|

||||

func (p *PrometheusSink) IncrCounterWithLabels(parts []string, val float32, labels []metrics.Label) {

|

||||

key, hash := p.flattenKey(parts, labels)

|

||||

key, hash := flattenKey(parts, labels)

|

||||

pc, ok := p.counters.Load(hash)

|

||||

|

||||

// Does the counter exist?

|

||||

if ok {

|

||||

localCounter := *pc.(*PrometheusCounter)

|

||||

localCounter := *pc.(*counter)

|

||||

localCounter.Add(float64(val))

|

||||

localCounter.updatedAt = time.Now()

|

||||

p.counters.Store(hash, &localCounter)

|

||||

|

||||

// The counter does not exist yet, create it and allow it to be deleted

|

||||

} else {

|

||||

help := key

|

||||

existingHelp, ok := p.help[fmt.Sprintf("counter.%s", key)]

|

||||

if ok {

|

||||

help = existingHelp

|

||||

}

|

||||

c := prometheus.NewCounter(prometheus.CounterOpts{

|

||||

Name: key,

|

||||

Help: key,

|

||||

Help: help,

|

||||

ConstLabels: prometheusLabels(labels),

|

||||

})

|

||||

c.Add(float64(val))

|

||||

pc = &PrometheusCounter{

|

||||

c, time.Now(),

|

||||

pc = &counter{

|

||||

Counter: c,

|

||||

updatedAt: time.Now(),

|

||||

canDelete: true,

|

||||

}

|

||||

p.counters.Store(hash, pc)

|

||||

}

|

||||

}

|

||||

|

||||

// PrometheusPushSink wraps a normal prometheus sink and provides an address and facilities to export it to an address

|

||||

// on an interval.

|

||||

type PrometheusPushSink struct {

|

||||

*PrometheusSink

|

||||

pusher *push.Pusher

|

||||

|

|

@ -243,7 +391,8 @@ type PrometheusPushSink struct {

|

|||

stopChan chan struct{}

|

||||

}

|

||||

|

||||

func NewPrometheusPushSink(address string, pushIterval time.Duration, name string) (*PrometheusPushSink, error) {

|

||||

// NewPrometheusPushSink creates a PrometheusPushSink by taking an address, interval, and destination name.

|

||||

func NewPrometheusPushSink(address string, pushInterval time.Duration, name string) (*PrometheusPushSink, error) {

|

||||

promSink := &PrometheusSink{

|

||||

gauges: sync.Map{},

|

||||

summaries: sync.Map{},

|

||||

|

|

@ -257,7 +406,7 @@ func NewPrometheusPushSink(address string, pushIterval time.Duration, name strin

|

|||

promSink,

|

||||

pusher,

|

||||

address,

|

||||

pushIterval,

|

||||

pushInterval,

|

||||

make(chan struct{}),

|

||||

}

|

||||

|

||||

|

|

|

|||

|

|

@ -6,7 +6,7 @@ import (

|

|||

"sync/atomic"

|

||||

"time"

|

||||

|

||||

"github.com/hashicorp/go-immutable-radix"

|

||||

iradix "github.com/hashicorp/go-immutable-radix"

|

||||

)

|

||||

|

||||

// Config is used to configure metrics settings

|

||||

|

|

@ -48,6 +48,11 @@ func init() {

|

|||

globalMetrics.Store(&Metrics{sink: &BlackholeSink{}})

|

||||

}

|

||||

|

||||

// Default returns the shared global metrics instance.

|

||||

func Default() *Metrics {

|

||||

return globalMetrics.Load().(*Metrics)

|

||||

}

|

||||

|

||||

// DefaultConfig provides a sane default configuration

|

||||

func DefaultConfig(serviceName string) *Config {

|

||||

c := &Config{

|

||||

|

|

|

|||

|

|

@ -1,20 +1,11 @@

|

|||

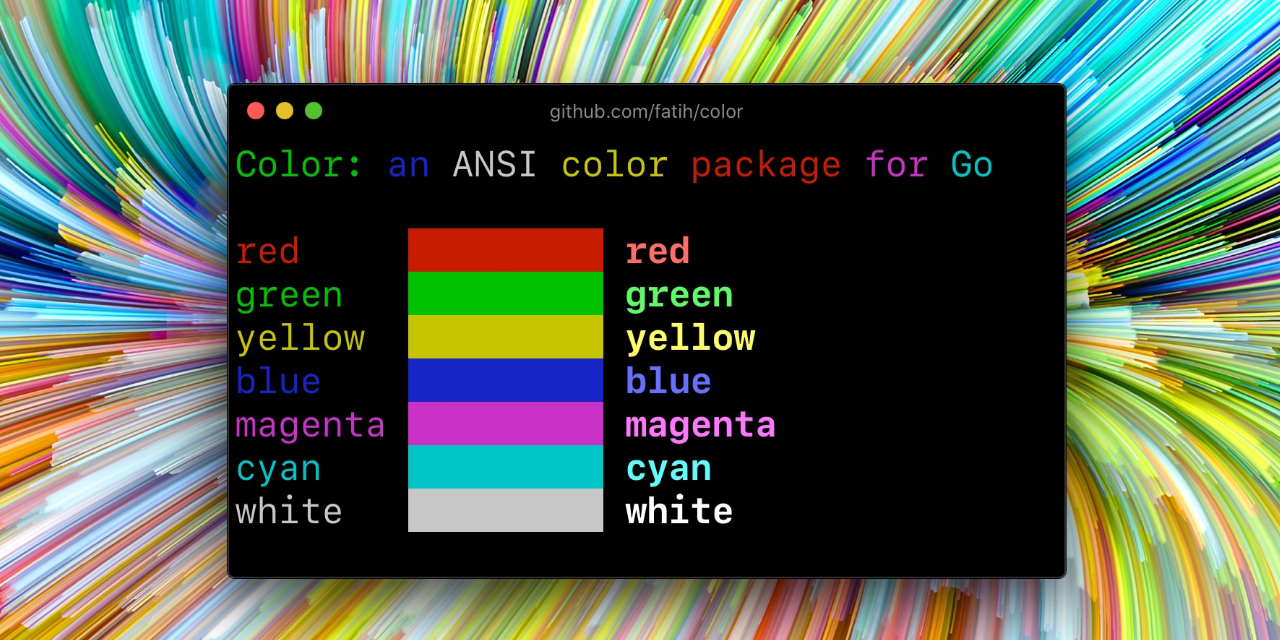

# Archived project. No maintenance.

|

||||

|

||||

This project is not maintained anymore and is archived. Feel free to fork and

|

||||

make your own changes if needed. For more detail read my blog post: [Taking an indefinite sabbatical from my projects](https://arslan.io/2018/10/09/taking-an-indefinite-sabbatical-from-my-projects/)

|

||||

|

||||

Thanks to everyone for their valuable feedback and contributions.

|

||||

|

||||

|

||||

# Color [](https://godoc.org/github.com/fatih/color)

|

||||

# color [](https://github.com/fatih/color/actions) [](https://pkg.go.dev/github.com/fatih/color)

|

||||

|

||||

Color lets you use colorized outputs in terms of [ANSI Escape

|

||||

Codes](http://en.wikipedia.org/wiki/ANSI_escape_code#Colors) in Go (Golang). It

|

||||

has support for Windows too! The API can be used in several ways, pick one that

|

||||

suits you.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

## Install

|

||||

|

|

|

|||

|

|

@ -3,6 +3,6 @@ module github.com/fatih/color

|

|||

go 1.13

|

||||

|

||||

require (

|

||||

github.com/mattn/go-colorable v0.1.4

|

||||

github.com/mattn/go-isatty v0.0.11

|

||||

github.com/mattn/go-colorable v0.1.8

|

||||

github.com/mattn/go-isatty v0.0.12

|

||||

)

|

||||

|

|

|

|||

|

|

@ -1,8 +1,7 @@

|

|||

github.com/mattn/go-colorable v0.1.4 h1:snbPLB8fVfU9iwbbo30TPtbLRzwWu6aJS6Xh4eaaviA=

|

||||

github.com/mattn/go-colorable v0.1.4/go.mod h1:U0ppj6V5qS13XJ6of8GYAs25YV2eR4EVcfRqFIhoBtE=

|

||||

github.com/mattn/go-isatty v0.0.8/go.mod h1:Iq45c/XA43vh69/j3iqttzPXn0bhXyGjM0Hdxcsrc5s=

|

||||

github.com/mattn/go-isatty v0.0.11 h1:FxPOTFNqGkuDUGi3H/qkUbQO4ZiBa2brKq5r0l8TGeM=

|

||||

github.com/mattn/go-isatty v0.0.11/go.mod h1:PhnuNfih5lzO57/f3n+odYbM4JtupLOxQOAqxQCu2WE=

|

||||

golang.org/x/sys v0.0.0-20190222072716-a9d3bda3a223/go.mod h1:STP8DvDyc/dI5b8T5hshtkjS+E42TnysNCUPdjciGhY=

|

||||

golang.org/x/sys v0.0.0-20191026070338-33540a1f6037 h1:YyJpGZS1sBuBCzLAR1VEpK193GlqGZbnPFnPV/5Rsb4=

|

||||

golang.org/x/sys v0.0.0-20191026070338-33540a1f6037/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

|

||||

github.com/mattn/go-colorable v0.1.8 h1:c1ghPdyEDarC70ftn0y+A/Ee++9zz8ljHG1b13eJ0s8=

|

||||

github.com/mattn/go-colorable v0.1.8/go.mod h1:u6P/XSegPjTcexA+o6vUJrdnUu04hMope9wVRipJSqc=

|

||||

github.com/mattn/go-isatty v0.0.12 h1:wuysRhFDzyxgEmMf5xjvJ2M9dZoWAXNNr5LSBS7uHXY=

|

||||

github.com/mattn/go-isatty v0.0.12/go.mod h1:cbi8OIDigv2wuxKPP5vlRcQ1OAZbq2CE4Kysco4FUpU=

|

||||

golang.org/x/sys v0.0.0-20200116001909-b77594299b42/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

|

||||

golang.org/x/sys v0.0.0-20200223170610-d5e6a3e2c0ae h1:/WDfKMnPU+m5M4xB+6x4kaepxRw6jWvR5iDRdvjHgy8=

|

||||

golang.org/x/sys v0.0.0-20200223170610-d5e6a3e2c0ae/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

|

||||

|

|

|

|||

|

|

@ -18,8 +18,13 @@ type interceptLogger struct {

|

|||

}

|

||||

|

||||

func NewInterceptLogger(opts *LoggerOptions) InterceptLogger {

|

||||

l := newLogger(opts)

|

||||

if l.callerOffset > 0 {

|

||||

// extra frames for interceptLogger.{Warn,Info,Log,etc...}, and interceptLogger.log

|

||||

l.callerOffset += 2

|

||||

}

|

||||

intercept := &interceptLogger{

|

||||

Logger: New(opts),

|

||||

Logger: l,

|

||||

mu: new(sync.Mutex),

|

||||

sinkCount: new(int32),

|

||||

Sinks: make(map[SinkAdapter]struct{}),

|

||||

|

|

@ -31,6 +36,14 @@ func NewInterceptLogger(opts *LoggerOptions) InterceptLogger {

|

|||

}

|

||||

|

||||

func (i *interceptLogger) Log(level Level, msg string, args ...interface{}) {

|

||||

i.log(level, msg, args...)

|

||||

}

|

||||

|

||||

// log is used to make the caller stack frame lookup consistent. If Warn,Info,etc

|

||||

// all called Log then direct calls to Log would have a different stack frame

|

||||

// depth. By having all the methods call the same helper we ensure the stack

|

||||

// frame depth is the same.

|

||||

func (i *interceptLogger) log(level Level, msg string, args ...interface{}) {

|

||||

i.Logger.Log(level, msg, args...)

|

||||

if atomic.LoadInt32(i.sinkCount) == 0 {

|

||||

return

|

||||

|

|

@ -45,72 +58,27 @@ func (i *interceptLogger) Log(level Level, msg string, args ...interface{}) {

|

|||

|

||||

// Emit the message and args at TRACE level to log and sinks

|

||||

func (i *interceptLogger) Trace(msg string, args ...interface{}) {

|

||||

i.Logger.Trace(msg, args...)

|

||||

if atomic.LoadInt32(i.sinkCount) == 0 {

|

||||

return

|

||||

}

|

||||

|

||||

i.mu.Lock()

|

||||

defer i.mu.Unlock()

|

||||

for s := range i.Sinks {

|

||||

s.Accept(i.Name(), Trace, msg, i.retrieveImplied(args...)...)

|

||||

}

|

||||

i.log(Trace, msg, args...)

|

||||

}

|

||||

|

||||

// Emit the message and args at DEBUG level to log and sinks

|

||||

func (i *interceptLogger) Debug(msg string, args ...interface{}) {

|

||||

i.Logger.Debug(msg, args...)

|

||||

if atomic.LoadInt32(i.sinkCount) == 0 {

|

||||

return

|

||||

}

|

||||

|

||||

i.mu.Lock()

|

||||

defer i.mu.Unlock()

|

||||

for s := range i.Sinks {

|

||||

s.Accept(i.Name(), Debug, msg, i.retrieveImplied(args...)...)

|

||||

}

|

||||

i.log(Debug, msg, args...)

|

||||

}

|

||||

|

||||

// Emit the message and args at INFO level to log and sinks

|

||||

func (i *interceptLogger) Info(msg string, args ...interface{}) {

|

||||

i.Logger.Info(msg, args...)

|

||||

if atomic.LoadInt32(i.sinkCount) == 0 {

|

||||

return

|

||||

}

|

||||

|

||||

i.mu.Lock()

|

||||

defer i.mu.Unlock()

|

||||

for s := range i.Sinks {

|

||||

s.Accept(i.Name(), Info, msg, i.retrieveImplied(args...)...)

|

||||

}

|

||||

i.log(Info, msg, args...)

|

||||

}

|

||||

|

||||

// Emit the message and args at WARN level to log and sinks

|

||||

func (i *interceptLogger) Warn(msg string, args ...interface{}) {

|

||||

i.Logger.Warn(msg, args...)

|

||||

if atomic.LoadInt32(i.sinkCount) == 0 {

|

||||

return

|

||||

}

|

||||

|

||||

i.mu.Lock()

|

||||

defer i.mu.Unlock()

|

||||

for s := range i.Sinks {

|

||||

s.Accept(i.Name(), Warn, msg, i.retrieveImplied(args...)...)

|

||||

}

|

||||

i.log(Warn, msg, args...)

|

||||

}

|

||||

|

||||

// Emit the message and args at ERROR level to log and sinks

|

||||

func (i *interceptLogger) Error(msg string, args ...interface{}) {

|

||||

i.Logger.Error(msg, args...)

|

||||

if atomic.LoadInt32(i.sinkCount) == 0 {

|

||||

return

|

||||

}

|

||||

|

||||

i.mu.Lock()

|

||||

defer i.mu.Unlock()

|

||||

for s := range i.Sinks {

|

||||

s.Accept(i.Name(), Error, msg, i.retrieveImplied(args...)...)

|

||||

}

|

||||

i.log(Error, msg, args...)

|

||||

}

|

||||

|

||||

func (i *interceptLogger) retrieveImplied(args ...interface{}) []interface{} {

|

||||

|

|

@ -123,7 +91,7 @@ func (i *interceptLogger) retrieveImplied(args ...interface{}) []interface{} {

|

|||

return cp

|

||||

}

|

||||

|

||||

// Create a new sub-Logger that a name decending from the current name.

|

||||

// Create a new sub-Logger that a name descending from the current name.

|

||||

// This is used to create a subsystem specific Logger.

|

||||

// Registered sinks will subscribe to these messages as well.

|

||||

func (i *interceptLogger) Named(name string) Logger {

|

||||

|

|

|

|||

|

|

@ -21,10 +21,14 @@ import (

|

|||

"github.com/fatih/color"

|

||||

)

|

||||

|

||||

// TimeFormat to use for logging. This is a version of RFC3339 that contains

|

||||

// contains millisecond precision

|

||||

// TimeFormat is the time format to use for plain (non-JSON) output.

|

||||

// This is a version of RFC3339 that contains millisecond precision.

|

||||

const TimeFormat = "2006-01-02T15:04:05.000Z0700"

|

||||

|

||||

// TimeFormatJSON is the time format to use for JSON output.

|

||||

// This is a version of RFC3339 that contains microsecond precision.

|

||||

const TimeFormatJSON = "2006-01-02T15:04:05.000000Z07:00"

|

||||

|

||||

// errJsonUnsupportedTypeMsg is included in log json entries, if an arg cannot be serialized to json

|

||||

const errJsonUnsupportedTypeMsg = "logging contained values that don't serialize to json"

|

||||

|

||||

|

|

@ -52,10 +56,11 @@ var _ Logger = &intLogger{}

|

|||

// intLogger is an internal logger implementation. Internal in that it is

|

||||

// defined entirely by this package.

|

||||

type intLogger struct {

|

||||

json bool

|

||||

caller bool

|

||||

name string

|

||||

timeFormat string

|

||||

json bool

|

||||

callerOffset int

|

||||

name string

|

||||

timeFormat string

|

||||

disableTime bool

|

||||

|

||||

// This is an interface so that it's shared by any derived loggers, since

|

||||

// those derived loggers share the bufio.Writer as well.

|

||||

|

|

@ -79,7 +84,12 @@ func New(opts *LoggerOptions) Logger {

|

|||

// NewSinkAdapter returns a SinkAdapter with configured settings

|

||||

// defined by LoggerOptions

|

||||

func NewSinkAdapter(opts *LoggerOptions) SinkAdapter {

|

||||

return newLogger(opts)

|

||||

l := newLogger(opts)

|

||||

if l.callerOffset > 0 {

|

||||

// extra frames for interceptLogger.{Warn,Info,Log,etc...}, and SinkAdapter.Accept

|

||||

l.callerOffset += 2

|

||||

}

|

||||

return l

|

||||

}

|

||||

|

||||

func newLogger(opts *LoggerOptions) *intLogger {

|

||||

|

|

@ -104,29 +114,37 @@ func newLogger(opts *LoggerOptions) *intLogger {

|

|||

|

||||

l := &intLogger{

|

||||

json: opts.JSONFormat,

|

||||

caller: opts.IncludeLocation,

|

||||

name: opts.Name,

|

||||

timeFormat: TimeFormat,

|

||||

disableTime: opts.DisableTime,

|

||||

mutex: mutex,

|

||||

writer: newWriter(output, opts.Color),

|

||||

level: new(int32),

|

||||

exclude: opts.Exclude,

|

||||

independentLevels: opts.IndependentLevels,

|

||||

}

|

||||

if opts.IncludeLocation {

|

||||

l.callerOffset = offsetIntLogger

|

||||

}

|

||||

|

||||

l.setColorization(opts)

|

||||

|

||||

if opts.DisableTime {

|

||||

l.timeFormat = ""

|

||||

} else if opts.TimeFormat != "" {

|

||||

if l.json {

|

||||

l.timeFormat = TimeFormatJSON

|

||||

}

|

||||

if opts.TimeFormat != "" {

|

||||

l.timeFormat = opts.TimeFormat

|

||||

}

|

||||

|

||||

l.setColorization(opts)

|

||||

|

||||

atomic.StoreInt32(l.level, int32(level))

|

||||

|

||||

return l

|

||||

}

|

||||

|

||||

// offsetIntLogger is the stack frame offset in the call stack for the caller to

|

||||

// one of the Warn,Info,Log,etc methods.

|

||||

const offsetIntLogger = 3

|

||||

|

||||

// Log a message and a set of key/value pairs if the given level is at

|

||||

// or more severe that the threshold configured in the Logger.

|

||||

func (l *intLogger) log(name string, level Level, msg string, args ...interface{}) {

|

||||

|

|

@ -183,7 +201,8 @@ func trimCallerPath(path string) string {

|

|||

|

||||

// Non-JSON logging format function

|

||||

func (l *intLogger) logPlain(t time.Time, name string, level Level, msg string, args ...interface{}) {

|

||||

if len(l.timeFormat) > 0 {

|

||||

|

||||

if !l.disableTime {

|

||||

l.writer.WriteString(t.Format(l.timeFormat))

|

||||

l.writer.WriteByte(' ')

|

||||

}

|

||||

|

|

@ -195,17 +214,8 @@ func (l *intLogger) logPlain(t time.Time, name string, level Level, msg string,

|

|||

l.writer.WriteString("[?????]")

|

||||

}

|

||||

|

||||

offset := 3

|

||||

if l.caller {

|

||||

// Check if the caller is inside our package and inside

|

||||

// a logger implementation file

|

||||

if _, file, _, ok := runtime.Caller(3); ok {

|

||||

if strings.HasSuffix(file, "intlogger.go") || strings.HasSuffix(file, "interceptlogger.go") {

|

||||

offset = 4

|

||||

}

|

||||

}

|

||||

|

||||

if _, file, line, ok := runtime.Caller(offset); ok {

|

||||

if l.callerOffset > 0 {

|

||||

if _, file, line, ok := runtime.Caller(l.callerOffset); ok {

|

||||

l.writer.WriteByte(' ')

|

||||

l.writer.WriteString(trimCallerPath(file))

|

||||

l.writer.WriteByte(':')

|

||||

|

|

@ -251,6 +261,9 @@ func (l *intLogger) logPlain(t time.Time, name string, level Level, msg string,

|

|||

switch st := args[i+1].(type) {

|

||||

case string:

|

||||

val = st

|

||||

if st == "" {

|

||||

val = `""`

|

||||

}

|

||||

case int:

|

||||

val = strconv.FormatInt(int64(st), 10)

|

||||

case int64:

|

||||

|

|

@ -292,20 +305,32 @@ func (l *intLogger) logPlain(t time.Time, name string, level Level, msg string,

|

|||

}

|

||||

}

|

||||

|

||||

l.writer.WriteByte(' ')

|

||||

var key string

|

||||

|

||||

switch st := args[i].(type) {

|

||||

case string:

|

||||

l.writer.WriteString(st)

|

||||

key = st

|

||||

default:

|

||||

l.writer.WriteString(fmt.Sprintf("%s", st))

|

||||

key = fmt.Sprintf("%s", st)

|

||||

}

|

||||

l.writer.WriteByte('=')

|

||||

|

||||

if !raw && strings.ContainsAny(val, " \t\n\r") {

|

||||

if strings.Contains(val, "\n") {

|

||||

l.writer.WriteString("\n ")

|

||||

l.writer.WriteString(key)

|

||||

l.writer.WriteString("=\n")

|

||||

writeIndent(l.writer, val, " | ")

|

||||

l.writer.WriteString(" ")

|

||||

} else if !raw && strings.ContainsAny(val, " \t") {

|

||||

l.writer.WriteByte(' ')

|

||||

l.writer.WriteString(key)

|

||||

l.writer.WriteByte('=')

|

||||

l.writer.WriteByte('"')

|

||||

l.writer.WriteString(val)

|

||||

l.writer.WriteByte('"')

|

||||

} else {

|

||||

l.writer.WriteByte(' ')

|

||||

l.writer.WriteString(key)

|

||||

l.writer.WriteByte('=')

|

||||

l.writer.WriteString(val)

|

||||

}

|

||||

}

|

||||

|

|

@ -319,6 +344,25 @@ func (l *intLogger) logPlain(t time.Time, name string, level Level, msg string,

|

|||

}

|

||||

}

|

||||

|

||||

func writeIndent(w *writer, str string, indent string) {

|

||||

for {

|

||||

nl := strings.IndexByte(str, "\n"[0])

|

||||

if nl == -1 {

|

||||

if str != "" {

|

||||

w.WriteString(indent)

|

||||

w.WriteString(str)

|

||||

w.WriteString("\n")

|

||||

}

|

||||

return

|

||||

}

|

||||

|

||||

w.WriteString(indent)

|

||||

w.WriteString(str[:nl])

|

||||

w.WriteString("\n")

|

||||

str = str[nl+1:]

|

||||

}

|

||||

}

|

||||

|

||||

func (l *intLogger) renderSlice(v reflect.Value) string {

|

||||

var buf bytes.Buffer

|

||||

|

||||

|

|

@ -335,22 +379,19 @@ func (l *intLogger) renderSlice(v reflect.Value) string {

|

|||

|

||||

switch sv.Kind() {

|

||||

case reflect.String:

|

||||

val = sv.String()

|

||||

val = strconv.Quote(sv.String())

|

||||

case reflect.Int, reflect.Int16, reflect.Int32, reflect.Int64:

|

||||

val = strconv.FormatInt(sv.Int(), 10)

|

||||

case reflect.Uint, reflect.Uint16, reflect.Uint32, reflect.Uint64:

|

||||

val = strconv.FormatUint(sv.Uint(), 10)

|

||||

default:

|

||||

val = fmt.Sprintf("%v", sv.Interface())

|

||||

if strings.ContainsAny(val, " \t\n\r") {

|

||||

val = strconv.Quote(val)

|

||||

}

|

||||

}

|

||||

|

||||

if strings.ContainsAny(val, " \t\n\r") {

|

||||

buf.WriteByte('"')

|

||||

buf.WriteString(val)

|

||||

buf.WriteByte('"')

|

||||

} else {

|

||||

buf.WriteString(val)

|

||||

}

|

||||

buf.WriteString(val)

|

||||

}

|

||||

|

||||

buf.WriteRune(']')

|

||||

|

|

@ -416,8 +457,10 @@ func (l *intLogger) logJSON(t time.Time, name string, level Level, msg string, a

|

|||

|

||||

func (l intLogger) jsonMapEntry(t time.Time, name string, level Level, msg string) map[string]interface{} {

|

||||

vals := map[string]interface{}{

|

||||

"@message": msg,

|

||||

"@timestamp": t.Format("2006-01-02T15:04:05.000000Z07:00"),

|

||||

"@message": msg,

|

||||

}

|

||||

if !l.disableTime {

|

||||

vals["@timestamp"] = t.Format(l.timeFormat)

|

||||

}

|

||||

|

||||

var levelStr string

|

||||

|

|

@ -442,8 +485,8 @@ func (l intLogger) jsonMapEntry(t time.Time, name string, level Level, msg strin

|

|||

vals["@module"] = name

|

||||

}

|

||||

|

||||

if l.caller {

|

||||

if _, file, line, ok := runtime.Caller(4); ok {

|

||||

if l.callerOffset > 0 {

|

||||

if _, file, line, ok := runtime.Caller(l.callerOffset + 1); ok {

|

||||

vals["@caller"] = fmt.Sprintf("%s:%d", file, line)

|

||||

}

|

||||

}

|

||||

|

|

@ -633,8 +676,15 @@ func (l *intLogger) StandardLogger(opts *StandardLoggerOptions) *log.Logger {

|

|||

}

|

||||

|

||||

func (l *intLogger) StandardWriter(opts *StandardLoggerOptions) io.Writer {

|

||||

newLog := *l

|

||||

if l.callerOffset > 0 {

|

||||

// the stack is

|

||||

// logger.printf() -> l.Output() ->l.out.writer(hclog:stdlogAdaptor.write) -> hclog:stdlogAdaptor.dispatch()

|

||||

// So plus 4.

|

||||

newLog.callerOffset = l.callerOffset + 4

|

||||

}

|

||||

return &stdlogAdapter{

|

||||

log: l,

|

||||

log: &newLog,

|

||||

inferLevels: opts.InferLevels,

|

||||

forceLevel: opts.ForceLevel,

|

||||

}

|

||||

|

|

|

|||

|

|

@ -1,25 +1,22 @@

|

|||

Copyright (c) 2012, 2013 Ugorji Nwoke.

|

||||

The MIT License (MIT)

|

||||

|

||||

Copyright (c) 2012-2015 Ugorji Nwoke.

|

||||

All rights reserved.

|

||||

|

||||

Redistribution and use in source and binary forms, with or without modification,

|

||||

are permitted provided that the following conditions are met:

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

in the Software without restriction, including without limitation the rights

|

||||

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

||||

copies of the Software, and to permit persons to whom the Software is

|

||||

furnished to do so, subject to the following conditions:

|

||||

|

||||

* Redistributions of source code must retain the above copyright notice,

|

||||

this list of conditions and the following disclaimer.

|

||||

* Redistributions in binary form must reproduce the above copyright notice,

|

||||

this list of conditions and the following disclaimer in the documentation

|

||||

and/or other materials provided with the distribution.

|

||||

* Neither the name of the author nor the names of its contributors may be used

|

||||

to endorse or promote products derived from this software

|

||||

without specific prior written permission.

|

||||

The above copyright notice and this permission notice shall be included in all

|

||||

copies or substantial portions of the Software.

|

||||

|

||||

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND

|

||||

ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED

|

||||

WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

|

||||

DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR

|

||||

ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES

|

||||

(INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES;

|

||||

LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON

|

||||

ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT

|

||||

(INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS

|

||||

SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

||||

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

||||

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

||||

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

||||

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

||||

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

||||

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

||||

SOFTWARE.

|

||||

|

|

|

|||

|

|

@ -1,143 +0,0 @@

|

|||

// Copyright (c) 2012, 2013 Ugorji Nwoke. All rights reserved.

|

||||

// Use of this source code is governed by a BSD-style license found in the LICENSE file.

|

||||

|

||||

/*

|

||||

High Performance, Feature-Rich Idiomatic Go encoding library for msgpack and binc .

|

||||

|

||||

Supported Serialization formats are:

|

||||

|

||||

- msgpack: [https://github.com/msgpack/msgpack]

|

||||

- binc: [http://github.com/ugorji/binc]

|

||||

|

||||

To install:

|

||||

|

||||

go get github.com/ugorji/go/codec

|

||||

|

||||

The idiomatic Go support is as seen in other encoding packages in

|

||||

the standard library (ie json, xml, gob, etc).

|

||||

|

||||

Rich Feature Set includes:

|

||||

|

||||

- Simple but extremely powerful and feature-rich API

|

||||

- Very High Performance.

|

||||

Our extensive benchmarks show us outperforming Gob, Json and Bson by 2-4X.

|

||||

This was achieved by taking extreme care on:

|

||||

- managing allocation

|

||||

- function frame size (important due to Go's use of split stacks),

|

||||

- reflection use (and by-passing reflection for common types)

|

||||

- recursion implications

|

||||

- zero-copy mode (encoding/decoding to byte slice without using temp buffers)

|

||||

- Correct.

|

||||

Care was taken to precisely handle corner cases like:

|

||||

overflows, nil maps and slices, nil value in stream, etc.

|

||||

- Efficient zero-copying into temporary byte buffers

|

||||

when encoding into or decoding from a byte slice.

|

||||

- Standard field renaming via tags

|

||||

- Encoding from any value

|

||||

(struct, slice, map, primitives, pointers, interface{}, etc)

|

||||

- Decoding into pointer to any non-nil typed value

|

||||

(struct, slice, map, int, float32, bool, string, reflect.Value, etc)

|

||||

- Supports extension functions to handle the encode/decode of custom types

|

||||

- Support Go 1.2 encoding.BinaryMarshaler/BinaryUnmarshaler

|

||||

- Schema-less decoding

|

||||

(decode into a pointer to a nil interface{} as opposed to a typed non-nil value).

|

||||

Includes Options to configure what specific map or slice type to use

|

||||

when decoding an encoded list or map into a nil interface{}

|

||||

- Provides a RPC Server and Client Codec for net/rpc communication protocol.

|

||||

- Msgpack Specific:

|

||||

- Provides extension functions to handle spec-defined extensions (binary, timestamp)

|

||||

- Options to resolve ambiguities in handling raw bytes (as string or []byte)

|

||||

during schema-less decoding (decoding into a nil interface{})

|

||||

- RPC Server/Client Codec for msgpack-rpc protocol defined at:

|

||||

https://github.com/msgpack-rpc/msgpack-rpc/blob/master/spec.md

|

||||

- Fast Paths for some container types:

|

||||

For some container types, we circumvent reflection and its associated overhead

|

||||

and allocation costs, and encode/decode directly. These types are:

|

||||

[]interface{}

|

||||

[]int

|

||||

[]string

|

||||

map[interface{}]interface{}

|

||||

map[int]interface{}

|

||||

map[string]interface{}

|

||||

|

||||

Extension Support

|

||||

|

||||

Users can register a function to handle the encoding or decoding of

|

||||

their custom types.

|

||||

|

||||

There are no restrictions on what the custom type can be. Some examples:

|

||||

|

||||

type BisSet []int

|

||||

type BitSet64 uint64

|

||||

type UUID string

|

||||

type MyStructWithUnexportedFields struct { a int; b bool; c []int; }

|

||||

type GifImage struct { ... }

|

||||

|

||||

As an illustration, MyStructWithUnexportedFields would normally be

|

||||

encoded as an empty map because it has no exported fields, while UUID

|

||||

would be encoded as a string. However, with extension support, you can

|

||||

encode any of these however you like.

|

||||

|

||||

RPC

|

||||

|

||||

RPC Client and Server Codecs are implemented, so the codecs can be used

|

||||

with the standard net/rpc package.

|

||||

|

||||

Usage

|

||||

|

||||

Typical usage model:

|

||||

|

||||

// create and configure Handle

|

||||

var (

|

||||

bh codec.BincHandle

|

||||

mh codec.MsgpackHandle

|

||||

)

|

||||

|

||||

mh.MapType = reflect.TypeOf(map[string]interface{}(nil))

|

||||

|

||||

// configure extensions

|

||||

// e.g. for msgpack, define functions and enable Time support for tag 1

|

||||

// mh.AddExt(reflect.TypeOf(time.Time{}), 1, myMsgpackTimeEncodeExtFn, myMsgpackTimeDecodeExtFn)

|

||||

|

||||

// create and use decoder/encoder

|

||||

var (

|

||||

r io.Reader

|

||||

w io.Writer

|

||||

b []byte

|

||||

h = &bh // or mh to use msgpack

|

||||

)

|

||||

|

||||

dec = codec.NewDecoder(r, h)

|

||||

dec = codec.NewDecoderBytes(b, h)

|

||||

err = dec.Decode(&v)

|

||||

|

||||

enc = codec.NewEncoder(w, h)

|

||||

enc = codec.NewEncoderBytes(&b, h)

|

||||

err = enc.Encode(v)

|

||||

|

||||

//RPC Server

|

||||

go func() {

|

||||

for {

|

||||

conn, err := listener.Accept()

|

||||

rpcCodec := codec.GoRpc.ServerCodec(conn, h)

|

||||

//OR rpcCodec := codec.MsgpackSpecRpc.ServerCodec(conn, h)

|

||||

rpc.ServeCodec(rpcCodec)

|

||||

}

|

||||

}()

|

||||

|

||||

//RPC Communication (client side)

|

||||

conn, err = net.Dial("tcp", "localhost:5555")

|

||||

rpcCodec := codec.GoRpc.ClientCodec(conn, h)

|

||||

//OR rpcCodec := codec.MsgpackSpecRpc.ClientCodec(conn, h)

|

||||

client := rpc.NewClientWithCodec(rpcCodec)

|

||||

|

||||

Representative Benchmark Results

|

||||

|

||||

Run the benchmark suite using:

|

||||

go test -bi -bench=. -benchmem

|

||||

|

||||

To run full benchmark suite (including against vmsgpack and bson),

|

||||

see notes in ext_dep_test.go

|

||||

|

||||

*/

|

||||

package codec

|

||||

|

|

@ -1,174 +0,0 @@

|

|||

# Codec

|

||||

|

||||

High Performance and Feature-Rich Idiomatic Go Library providing

|

||||

encode/decode support for different serialization formats.

|

||||

|

||||

Supported Serialization formats are:

|

||||

|

||||

- msgpack: [https://github.com/msgpack/msgpack]

|

||||

- binc: [http://github.com/ugorji/binc]

|

||||

|

||||

To install:

|

||||

|

||||

go get github.com/ugorji/go/codec

|

||||

|

||||

Online documentation: [http://godoc.org/github.com/ugorji/go/codec]

|

||||

|

||||

The idiomatic Go support is as seen in other encoding packages in

|

||||

the standard library (ie json, xml, gob, etc).

|

||||

|

||||

Rich Feature Set includes:

|

||||

|

||||

- Simple but extremely powerful and feature-rich API

|

||||

- Very High Performance.

|

||||

Our extensive benchmarks show us outperforming Gob, Json and Bson by 2-4X.

|

||||

This was achieved by taking extreme care on:

|

||||

- managing allocation

|

||||

- function frame size (important due to Go's use of split stacks),

|

||||

- reflection use (and by-passing reflection for common types)

|

||||

- recursion implications

|

||||

- zero-copy mode (encoding/decoding to byte slice without using temp buffers)

|

||||

- Correct.

|

||||

Care was taken to precisely handle corner cases like:

|

||||

overflows, nil maps and slices, nil value in stream, etc.

|

||||

- Efficient zero-copying into temporary byte buffers

|

||||

when encoding into or decoding from a byte slice.

|

||||

- Standard field renaming via tags

|

||||

- Encoding from any value

|

||||

(struct, slice, map, primitives, pointers, interface{}, etc)

|

||||

- Decoding into pointer to any non-nil typed value

|

||||

(struct, slice, map, int, float32, bool, string, reflect.Value, etc)

|

||||

- Supports extension functions to handle the encode/decode of custom types

|

||||

- Support Go 1.2 encoding.BinaryMarshaler/BinaryUnmarshaler

|

||||

- Schema-less decoding

|

||||

(decode into a pointer to a nil interface{} as opposed to a typed non-nil value).

|

||||

Includes Options to configure what specific map or slice type to use

|

||||

when decoding an encoded list or map into a nil interface{}

|

||||

- Provides a RPC Server and Client Codec for net/rpc communication protocol.

|

||||

- Msgpack Specific:

|

||||

- Provides extension functions to handle spec-defined extensions (binary, timestamp)

|

||||