mirror of

https://github.com/facebook/rocksdb.git

synced 2024-12-03 05:54:17 +00:00

263 commits

| Author | SHA1 | Message | Date | |

|---|---|---|---|---|

|

|

2dda7a0dd2 |

Detect compaction pressure at lower debt ratios (#12236)

Summary: This PR significantly reduces the compaction pressure threshold introduced in https://github.com/facebook/rocksdb/issues/12130 by a factor of 64x. The original number was too high to trigger in scenarios where compaction parallelism was needed. Pull Request resolved: https://github.com/facebook/rocksdb/pull/12236 Reviewed By: cbi42 Differential Revision: D52765685 Pulled By: ajkr fbshipit-source-id: 8298e966933b485de24f63165a00e672cb9db6c4 |

||

|

|

5a9ecf6614 |

Automated modernization (#12210)

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/12210 Reviewed By: hx235 Differential Revision: D52559771 Pulled By: ajkr fbshipit-source-id: 1ccdd3a0180cc02bc0441f20b0e4a1db50841b03 |

||

|

|

06e593376c |

Group SST write in flush, compaction and db open with new stats (#11910)

Summary: ## Context/Summary Similar to https://github.com/facebook/rocksdb/pull/11288, https://github.com/facebook/rocksdb/pull/11444, categorizing SST/blob file write according to different io activities allows more insight into the activity. For that, this PR does the following: - Tag different write IOs by passing down and converting WriteOptions to IOOptions - Add new SST_WRITE_MICROS histogram in WritableFileWriter::Append() and breakdown FILE_WRITE_{FLUSH|COMPACTION|DB_OPEN}_MICROS Some related code refactory to make implementation cleaner: - Blob stats - Replace high-level write measurement with low-level WritableFileWriter::Append() measurement for BLOB_DB_BLOB_FILE_WRITE_MICROS. This is to make FILE_WRITE_{FLUSH|COMPACTION|DB_OPEN}_MICROS include blob file. As a consequence, this introduces some behavioral changes on it, see HISTORY and db bench test plan below for more info. - Fix bugs where BLOB_DB_BLOB_FILE_SYNCED/BLOB_DB_BLOB_FILE_BYTES_WRITTEN include file failed to sync and bytes failed to write. - Refactor WriteOptions constructor for easier construction with io_activity and rate_limiter_priority - Refactor DBImpl::~DBImpl()/BlobDBImpl::Close() to bypass thread op verification - Build table - TableBuilderOptions now includes Read/WriteOpitons so BuildTable() do not need to take these two variables - Replace the io_priority passed into BuildTable() with TableBuilderOptions::WriteOpitons::rate_limiter_priority. Similar for BlobFileBuilder. This parameter is used for dynamically changing file io priority for flush, see https://github.com/facebook/rocksdb/pull/9988?fbclid=IwAR1DtKel6c-bRJAdesGo0jsbztRtciByNlvokbxkV6h_L-AE9MACzqRTT5s for more - Update ThreadStatus::FLUSH_BYTES_WRITTEN to use io_activity to track flush IO in flush job and db open instead of io_priority ## Test ### db bench Flush ``` ./db_bench --statistics=1 --benchmarks=fillseq --num=100000 --write_buffer_size=100 rocksdb.sst.write.micros P50 : 1.830863 P95 : 4.094720 P99 : 6.578947 P100 : 26.000000 COUNT : 7875 SUM : 20377 rocksdb.file.write.flush.micros P50 : 1.830863 P95 : 4.094720 P99 : 6.578947 P100 : 26.000000 COUNT : 7875 SUM : 20377 rocksdb.file.write.compaction.micros P50 : 0.000000 P95 : 0.000000 P99 : 0.000000 P100 : 0.000000 COUNT : 0 SUM : 0 rocksdb.file.write.db.open.micros P50 : 0.000000 P95 : 0.000000 P99 : 0.000000 P100 : 0.000000 COUNT : 0 SUM : 0 ``` compaction, db oopen ``` Setup: ./db_bench --statistics=1 --benchmarks=fillseq --num=10000 --disable_auto_compactions=1 -write_buffer_size=100 --db=../db_bench Run:./db_bench --statistics=1 --benchmarks=compact --db=../db_bench --use_existing_db=1 rocksdb.sst.write.micros P50 : 2.675325 P95 : 9.578788 P99 : 18.780000 P100 : 314.000000 COUNT : 638 SUM : 3279 rocksdb.file.write.flush.micros P50 : 0.000000 P95 : 0.000000 P99 : 0.000000 P100 : 0.000000 COUNT : 0 SUM : 0 rocksdb.file.write.compaction.micros P50 : 2.757353 P95 : 9.610687 P99 : 19.316667 P100 : 314.000000 COUNT : 615 SUM : 3213 rocksdb.file.write.db.open.micros P50 : 2.055556 P95 : 3.925000 P99 : 9.000000 P100 : 9.000000 COUNT : 23 SUM : 66 ``` blob stats - just to make sure they aren't broken by this PR ``` Integrated Blob DB Setup: ./db_bench --enable_blob_files=1 --statistics=1 --benchmarks=fillseq --num=10000 --disable_auto_compactions=1 -write_buffer_size=100 --db=../db_bench Run:./db_bench --enable_blob_files=1 --statistics=1 --benchmarks=compact --db=../db_bench --use_existing_db=1 pre-PR: rocksdb.blobdb.blob.file.write.micros P50 : 7.298246 P95 : 9.771930 P99 : 9.991813 P100 : 16.000000 COUNT : 235 SUM : 1600 rocksdb.blobdb.blob.file.synced COUNT : 1 rocksdb.blobdb.blob.file.bytes.written COUNT : 34842 post-PR: rocksdb.blobdb.blob.file.write.micros P50 : 2.000000 P95 : 2.829360 P99 : 2.993779 P100 : 9.000000 COUNT : 707 SUM : 1614 - COUNT is higher and values are smaller as it includes header and footer write - COUNT is 3X higher due to each Append() count as one post-PR, while in pre-PR, 3 Append()s counts as one. See https://github.com/facebook/rocksdb/pull/11910/files#diff-32b811c0a1c000768cfb2532052b44dc0b3bf82253f3eab078e15ff201a0dabfL157-L164 rocksdb.blobdb.blob.file.synced COUNT : 1 (stay the same) rocksdb.blobdb.blob.file.bytes.written COUNT : 34842 (stay the same) ``` ``` Stacked Blob DB Run: ./db_bench --use_blob_db=1 --statistics=1 --benchmarks=fillseq --num=10000 --disable_auto_compactions=1 -write_buffer_size=100 --db=../db_bench pre-PR: rocksdb.blobdb.blob.file.write.micros P50 : 12.808042 P95 : 19.674497 P99 : 28.539683 P100 : 51.000000 COUNT : 10000 SUM : 140876 rocksdb.blobdb.blob.file.synced COUNT : 8 rocksdb.blobdb.blob.file.bytes.written COUNT : 1043445 post-PR: rocksdb.blobdb.blob.file.write.micros P50 : 1.657370 P95 : 2.952175 P99 : 3.877519 P100 : 24.000000 COUNT : 30001 SUM : 67924 - COUNT is higher and values are smaller as it includes header and footer write - COUNT is 3X higher due to each Append() count as one post-PR, while in pre-PR, 3 Append()s counts as one. See https://github.com/facebook/rocksdb/pull/11910/files#diff-32b811c0a1c000768cfb2532052b44dc0b3bf82253f3eab078e15ff201a0dabfL157-L164 rocksdb.blobdb.blob.file.synced COUNT : 8 (stay the same) rocksdb.blobdb.blob.file.bytes.written COUNT : 1043445 (stay the same) ``` ### Rehearsal CI stress test Trigger 3 full runs of all our CI stress tests ### Performance Flush ``` TEST_TMPDIR=/dev/shm ./db_basic_bench_pre_pr --benchmark_filter=ManualFlush/key_num:524288/per_key_size:256 --benchmark_repetitions=1000 -- default: 1 thread is used to run benchmark; enable_statistics = true Pre-pr: avg 507515519.3 ns 497686074,499444327,500862543,501389862,502994471,503744435,504142123,504224056,505724198,506610393,506837742,506955122,507695561,507929036,508307733,508312691,508999120,509963561,510142147,510698091,510743096,510769317,510957074,511053311,511371367,511409911,511432960,511642385,511691964,511730908, Post-pr: avg 511971266.5 ns, regressed 0.88% 502744835,506502498,507735420,507929724,508313335,509548582,509994942,510107257,510715603,511046955,511352639,511458478,512117521,512317380,512766303,512972652,513059586,513804934,513808980,514059409,514187369,514389494,514447762,514616464,514622882,514641763,514666265,514716377,514990179,515502408, ``` Compaction ``` TEST_TMPDIR=/dev/shm ./db_basic_bench_{pre|post}_pr --benchmark_filter=ManualCompaction/comp_style:0/max_data:134217728/per_key_size:256/enable_statistics:1 --benchmark_repetitions=1000 -- default: 1 thread is used to run benchmark Pre-pr: avg 495346098.30 ns 492118301,493203526,494201411,494336607,495269217,495404950,496402598,497012157,497358370,498153846 Post-pr: avg 504528077.20, regressed 1.85%. "ManualCompaction" include flush so the isolated regression for compaction should be around 1.85-0.88 = 0.97% 502465338,502485945,502541789,502909283,503438601,504143885,506113087,506629423,507160414,507393007 ``` Put with WAL (in case passing WriteOptions slows down this path even without collecting SST write stats) ``` TEST_TMPDIR=/dev/shm ./db_basic_bench_pre_pr --benchmark_filter=DBPut/comp_style:0/max_data:107374182400/per_key_size:256/enable_statistics:1/wal:1 --benchmark_repetitions=1000 -- default: 1 thread is used to run benchmark Pre-pr: avg 3848.10 ns 3814,3838,3839,3848,3854,3854,3854,3860,3860,3860 Post-pr: avg 3874.20 ns, regressed 0.68% 3863,3867,3871,3874,3875,3877,3877,3877,3880,3881 ``` Pull Request resolved: https://github.com/facebook/rocksdb/pull/11910 Reviewed By: ajkr Differential Revision: D49788060 Pulled By: hx235 fbshipit-source-id: 79e73699cda5be3b66461687e5147c2484fc5eff |

||

|

|

c6c683a0ca |

Remove the default force behavior for EnableFileDeletion API (#12001)

Summary: Disabling file deletion can be critical for operations like making a backup, recovery from manifest IO error (for now). Ideally as long as there is one caller requesting file deletion disabled, it should be kept disabled until all callers agree to re-enable it. So this PR removes the default forcing behavior for the `EnableFileDeletion` API, and users need to explicitly pass the argument if they insisted on doing so knowing the consequence of what can be potentially disrupted. This PR removes the API's default argument value so it will cause breakage for all users that are relying on the default value, regardless of whether the forcing behavior is critical for them. When fixing this breakage, it's good to check if the forcing behavior is indeed needed and potential disruption is OK. This PR also makes unit test that do not need force behavior to do a regular enable file deletion. Pull Request resolved: https://github.com/facebook/rocksdb/pull/12001 Reviewed By: ajkr Differential Revision: D51214683 Pulled By: jowlyzhang fbshipit-source-id: ca7b1ebf15c09eed00f954da2f75c00d2c6a97e4 |

||

|

|

0ff7665c95 |

Fix low priority write may cause crash when it is rate limited (#11932)

Summary: Fixed https://github.com/facebook/rocksdb/issues/11902 Pull Request resolved: https://github.com/facebook/rocksdb/pull/11932 Reviewed By: akankshamahajan15 Differential Revision: D50573356 Pulled By: hx235 fbshipit-source-id: adeb1abdc43b523b0357746055ce4a2eabde56a1 |

||

|

|

d5bc30befa |

Enforce status checking after Valid() returns false for IteratorWrapper (#11975)

Summary: ... when compiled with ASSERT_STATUS_CHECKED = 1. The main change is in iterator_wrapper.h. The remaining changes are just fixing existing unit tests. Adding this check to IteratorWrapper gives a good coverage as the class is used in many places, including child iterators under merging iterator, merging iterator under DB iter, file_iter under level iterator, etc. This change can catch the bug fixed in https://github.com/facebook/rocksdb/issues/11782. Future follow up: enable `ASSERT_STATUS_CHECKED=1` for stress test and for DEBUG_LEVEL=0. Pull Request resolved: https://github.com/facebook/rocksdb/pull/11975 Test Plan: * `ASSERT_STATUS_CHECKED=1 DEBUG_LEVEL=2 make -j32 J=32 check` * I tried to run stress test with `ASSERT_STATUS_CHECKED=1`, but there are a lot of existing stress code that ignore status checking, and fail without the change in this PR. So defer that to a follow up task. Reviewed By: ajkr Differential Revision: D50383790 Pulled By: cbi42 fbshipit-source-id: 1a28ce0f5fdf1890f93400b26b3b1b3a287624ce |

||

|

|

03a74411c0 |

Add unit test for default temperature (#11722)

Summary: This piggy back the existing last level file temperature statistics test to test the default temperature becoming effective. While adding this unit test, I found that the approach to swap out and use default temperature in `VersionBuilder::LoadTableHandlers` will miss the L0 files created from flush, and only work for existing SST files, SST files created by compaction. So this PR moves that logic to `TableCache::GetTableReader`. Pull Request resolved: https://github.com/facebook/rocksdb/pull/11722 Test Plan: ``` ./db_test2 --gtest_filter="*LastLevelStatistics*" make all check ``` Reviewed By: pdillinger Differential Revision: D48489171 Pulled By: jowlyzhang fbshipit-source-id: ac29f7d484916f3218729594c5bb35c4f2979ac2 |

||

|

|

c2aad555c3 |

Add CompressionOptions::checksum for enabling ZSTD checksum (#11666)

Summary:

Optionally enable zstd checksum flag (

|

||

|

|

76ed9a3990 |

Add missing status check when compiling with ASSERT_STATUS_CHECKED=1 (#11686)

Summary:

It seems the flag `-fno-elide-constructors` is incorrectly overwritten in Makefile by

|

||

|

|

e95cc1217d |

CompactRange() always compacts to bottommost level for leveled compaction (#11468)

Summary:

currently for leveled compaction, the max output level of a call to `CompactRange()` is pre-computed before compacting each level. This max output level is the max level whose key range overlaps with the manual compaction key range. However, during manual compaction, files in the max output level may be compacted down further by some background compaction. When this background compaction is a trivial move, there is a race condition and the manual compaction may not be able to compact all keys in the specified key range. This PR updates `CompactRange()` to always compact to the bottommost level to make this race condition more unlikely (it can still happen, see more in comment here:

|

||

|

|

39f5846ec7 |

Much better stats for seeks and prefix filtering (#11460)

Summary: We want to know more about opportunities for better range filters, and the effectiveness of our own range filters. Currently the stats are very limited, essentially logging just hits and misses against prefix filters for range scans in BLOOM_FILTER_PREFIX_* without tracking the false positive rate. Perhaps confusingly, when prefix filters are used for point queries, the stats are currently going into the non-PREFIX tickers. This change does several things: * Introduce new stat tickers for seeks and related filtering, \*LEVEL_SEEK\* * Most importantly, allows us to see opportunities for range filtering. Specifically, we can count how many times a seek in an SST file accesses at least one data block, and how many times at least one value() is then accessed. If a data block was accessed but no value(), we can generally assume that the key(s) seen was(were) not of interest so could have been filtered with the right kind of filter, avoiding the data block access. * We can get the same level of detail when a filter (for now, prefix Bloom/ribbon) is used, or not. Specifically, we can infer a false positive rate for prefix filters (not available before) from the seek "false positive" rate: when a data block is accessed but no value() is called. (There can be other explanations for a seek false positive, but in typical iterator usage it would indicate a filter false positive.) * For efficiency, I wanted to avoid making additional calls to the prefix extractor (or key comparisons, etc.), which would be required if we wanted to more precisely detect filter false positives. I believe that instrumenting value() is the best balance of efficiency vs. accurately measuring what we are often interested in. * The stats are divided between last level and non-last levels, to help understand potential tiered storage use cases. * The old BLOOM_FILTER_PREFIX_* stats have a different meaning: no longer referring to iterators but to point queries using prefix filters. BLOOM_FILTER_PREFIX_TRUE_POSITIVE is added for computing the prefix false positive rate on point queries, which can be due to filter false positives as well as different keys with the same prefix. * Similarly, the non-PREFIX BLOOM_FILTER stats are now for whole key filtering only. Pull Request resolved: https://github.com/facebook/rocksdb/pull/11460 Test Plan: unit tests updated, including updating many to pop the stat value since last read to improve test readability and maintainability. Performance test shows a consistent small improvement with these changes, both with clang and with gcc. CPU profile indicates that RecordTick is using less CPU, and this makes sense at least for a high filter miss rate. Before, we were recording two ticks per filter miss in iterators (CHECKED & USEFUL) and now recording just one (FILTERED). Create DB with ``` TEST_TMPDIR=/dev/shm ./db_bench -benchmarks=fillrandom -num=10000000 -disable_wal=1 -write_buffer_size=30000000 -bloom_bits=8 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -prefix_size=8 ``` And run simultaneous before&after with ``` TEST_TMPDIR=/dev/shm ./db_bench -readonly -benchmarks=seekrandom[-X1000] -num=10000000 -bloom_bits=8 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -prefix_size=8 -seek_nexts=1 -duration=20 -seed=43 -threads=8 -cache_size=1000000000 -statistics ``` Before: seekrandom [AVG 275 runs] : 189680 (± 222) ops/sec; 18.4 (± 0.0) MB/sec After: seekrandom [AVG 275 runs] : 197110 (± 208) ops/sec; 19.1 (± 0.0) MB/sec Reviewed By: ajkr Differential Revision: D46029177 Pulled By: pdillinger fbshipit-source-id: cdace79a2ea548d46c5900b068c5b7c3a02e5822 |

||

|

|

586d78b31e |

Remove wait_unscheduled from waitForCompact internal API (#11443)

Summary: Context: In pull request https://github.com/facebook/rocksdb/issues/11436, we are introducing a new public API `waitForCompact(const WaitForCompactOptions& wait_for_compact_options)`. This API invokes the internal implementation `waitForCompact(bool wait_unscheduled=false)`. The unscheduled parameter indicates the compactions that are not yet scheduled but are required to process items in the queue. In certain cases, we are unable to wait for compactions, such as during a shutdown or when background jobs are paused. It is important to return the appropriate status in these scenarios. For all other cases, we should wait for all compaction and flush jobs, including the unscheduled ones. The primary purpose of this new API is to wait until the system has resolved its compaction debt. Currently, the usage of `wait_unscheduled` is limited to test code. This pull request eliminates the usage of wait_unscheduled. The internal `waitForCompact()` API now waits for unscheduled compactions unless the db is undergoing a shutdown. In the event of a shutdown, the API returns `Status::ShutdownInProgress()`. Additionally, a new parameter, `abort_on_pause`, has been introduced with a default value of `false`. This parameter addresses the possibility of waiting indefinitely for unscheduled jobs if `PauseBackgroundWork()` was called before `waitForCompact()` is invoked. By setting `abort_on_pause` to `true`, the API will immediately return `Status::Aborted`. Furthermore, all tests that previously called `waitForCompact(true)` have been fixed. Pull Request resolved: https://github.com/facebook/rocksdb/pull/11443 Test Plan: Existing tests that involve a shutdown in progress: - DBCompactionTest::CompactRangeShutdownWhileDelayed - DBTestWithParam::PreShutdownMultipleCompaction - DBTestWithParam::PreShutdownCompactionMiddle Reviewed By: pdillinger Differential Revision: D45923426 Pulled By: jaykorean fbshipit-source-id: 7dc93fe6a6841a7d9d2d72866fa647090dba8eae |

||

|

|

8f763bdeab |

Record and use the tail size to prefetch table tail (#11406)

Summary: **Context:** We prefetch the tail part of a SST file (i.e, the blocks after data blocks till the end of the file) during each SST file open in hope to prefetch all the stuff at once ahead of time for later read e.g, footer, meta index, filter/index etc. The existing approach to estimate the tail size to prefetch is through `TailPrefetchStats` heuristics introduced in https://github.com/facebook/rocksdb/pull/4156, which has caused small reads in unlucky case (e.g, small read into the tail buffer during table open in thread 1 under the same BlockBasedTableFactory object can make thread 2's tail prefetching use a small size that it shouldn't) and is hard to debug. Therefore we decide to record the exact tail size and use it directly to prefetch tail of the SST instead of relying heuristics. **Summary:** - Obtain and record in manifest the tail size in `BlockBasedTableBuilder::Finish()` - For backward compatibility, we fall back to TailPrefetchStats and last to simple heuristics that the tail size is a linear portion of the file size - see PR conversation for more. - Make`tail_start_offset` part of the table properties and deduct tail size to record in manifest for external files (e.g, file ingestion, import CF) and db repair (with no access to manifest). Pull Request resolved: https://github.com/facebook/rocksdb/pull/11406 Test Plan: 1. New UT 2. db bench Note: db bench on /tmp/ where direct read is supported is too slow to finish and the default pinning setting in db bench is not helpful to profile # sst read of Get. Therefore I hacked the following to obtain the following comparison. ``` diff --git a/table/block_based/block_based_table_reader.cc b/table/block_based/block_based_table_reader.cc index bd5669f0f..791484c1f 100644 --- a/table/block_based/block_based_table_reader.cc +++ b/table/block_based/block_based_table_reader.cc @@ -838,7 +838,7 @@ Status BlockBasedTable::PrefetchTail( &tail_prefetch_size); // Try file system prefetch - if (!file->use_direct_io() && !force_direct_prefetch) { + if (false && !file->use_direct_io() && !force_direct_prefetch) { if (!file->Prefetch(prefetch_off, prefetch_len, ro.rate_limiter_priority) .IsNotSupported()) { prefetch_buffer->reset(new FilePrefetchBuffer( diff --git a/tools/db_bench_tool.cc b/tools/db_bench_tool.cc index ea40f5fa0..39a0ac385 100644 --- a/tools/db_bench_tool.cc +++ b/tools/db_bench_tool.cc @@ -4191,6 +4191,8 @@ class Benchmark { std::shared_ptr<TableFactory>(NewCuckooTableFactory(table_options)); } else { BlockBasedTableOptions block_based_options; + block_based_options.metadata_cache_options.partition_pinning = + PinningTier::kAll; block_based_options.checksum = static_cast<ChecksumType>(FLAGS_checksum_type); if (FLAGS_use_hash_search) { ``` Create DB ``` ./db_bench --bloom_bits=3 --use_existing_db=1 --seed=1682546046158958 --partition_index_and_filters=1 --statistics=1 -db=/dev/shm/testdb/ -benchmarks=readrandom -key_size=3200 -value_size=512 -num=1000000 -write_buffer_size=6550000 -disable_auto_compactions=false -target_file_size_base=6550000 -compression_type=none ``` ReadRandom ``` ./db_bench --bloom_bits=3 --use_existing_db=1 --seed=1682546046158958 --partition_index_and_filters=1 --statistics=1 -db=/dev/shm/testdb/ -benchmarks=readrandom -key_size=3200 -value_size=512 -num=1000000 -write_buffer_size=6550000 -disable_auto_compactions=false -target_file_size_base=6550000 -compression_type=none ``` (a) Existing (Use TailPrefetchStats for tail size + use seperate prefetch buffer in PartitionedFilter/IndexReader::CacheDependencies()) ``` rocksdb.table.open.prefetch.tail.hit COUNT : 3395 rocksdb.sst.read.micros P50 : 5.655570 P95 : 9.931396 P99 : 14.845454 P100 : 585.000000 COUNT : 999905 SUM : 6590614 ``` (b) This PR (Record tail size + use the same tail buffer in PartitionedFilter/IndexReader::CacheDependencies()) ``` rocksdb.table.open.prefetch.tail.hit COUNT : 14257 rocksdb.sst.read.micros P50 : 5.173347 P95 : 9.015017 P99 : 12.912610 P100 : 228.000000 COUNT : 998547 SUM : 5976540 ``` As we can see, we increase the prefetch tail hit count and decrease SST read count with this PR 3. Test backward compatibility by stepping through reading with post-PR code on a db generated pre-PR. Reviewed By: pdillinger Differential Revision: D45413346 Pulled By: hx235 fbshipit-source-id: 7d5e36a60a72477218f79905168d688452a4c064 |

||

|

|

4720ba4391 |

Remove RocksDB LITE (#11147)

Summary: We haven't been actively mantaining RocksDB LITE recently and the size must have been gone up significantly. We are removing the support. Most of changes were done through following comments: unifdef -m -UROCKSDB_LITE `git grep -l ROCKSDB_LITE | egrep '[.](cc|h)'` by Peter Dillinger. Others changes were manually applied to build scripts, CircleCI manifests, ROCKSDB_LITE is used in an expression and file db_stress_test_base.cc. Pull Request resolved: https://github.com/facebook/rocksdb/pull/11147 Test Plan: See CI Reviewed By: pdillinger Differential Revision: D42796341 fbshipit-source-id: 4920e15fc2060c2cd2221330a6d0e5e65d4b7fe2 |

||

|

|

2800aa069a |

Remove compressed block cache (#11117)

Summary: Compressed block cache is replaced by compressed secondary cache. Remove the feature. Pull Request resolved: https://github.com/facebook/rocksdb/pull/11117 Test Plan: See CI passes Reviewed By: pdillinger Differential Revision: D42700164 fbshipit-source-id: 6cbb24e460da29311150865f60ecb98637f9f67d |

||

|

|

98d5db5c2e |

Sort L0 files by newly introduced epoch_num (#10922)

Summary:

**Context:**

Sorting L0 files by `largest_seqno` has at least two inconvenience:

- File ingestion and compaction involving ingested files can create files of overlapping seqno range with the existing files. `force_consistency_check=true` will catch such overlap seqno range even those harmless overlap.

- For example, consider the following sequence of events ("key@n" indicates key at seqno "n")

- insert k1@1 to memtable m1

- ingest file s1 with k2@2, ingest file s2 with k3@3

- insert k4@4 to m1

- compact files s1, s2 and result in new file s3 of seqno range [2, 3]

- flush m1 and result in new file s4 of seqno range [1, 4]. And `force_consistency_check=true` will think s4 and s3 has file reordering corruption that might cause retuning an old value of k1

- However such caught corruption is a false positive since s1, s2 will not have overlapped keys with k1 or whatever inserted into m1 before ingest file s1 by the requirement of file ingestion (otherwise the m1 will be flushed first before any of the file ingestion completes). Therefore there in fact isn't any file reordering corruption.

- Single delete can decrease a file's largest seqno and ordering by `largest_seqno` can introduce a wrong ordering hence file reordering corruption

- For example, consider the following sequence of events ("key@n" indicates key at seqno "n", Credit to ajkr for this example)

- an existing SST s1 contains only k1@1

- insert k1@2 to memtable m1

- ingest file s2 with k3@3, ingest file s3 with k4@4

- insert single delete k5@5 in m1

- flush m1 and result in new file s4 of seqno range [2, 5]

- compact s1, s2, s3 and result in new file s5 of seqno range [1, 4]

- compact s4 and result in new file s6 of seqno range [2] due to single delete

- By the last step, we have file ordering by largest seqno (">" means "newer") : s5 > s6 while s6 contains a newer version of the k1's value (i.e, k1@2) than s5, which is a real reordering corruption. While this can be caught by `force_consistency_check=true`, there isn't a good way to prevent this from happening if ordering by `largest_seqno`

Therefore, we are redesigning the sorting criteria of L0 files and avoid above inconvenience. Credit to ajkr , we now introduce `epoch_num` which describes the order of a file being flushed or ingested/imported (compaction output file will has the minimum `epoch_num` among input files'). This will avoid the above inconvenience in the following ways:

- In the first case above, there will no longer be overlap seqno range check in `force_consistency_check=true` but `epoch_number` ordering check. This will result in file ordering s1 < s2 < s4 (pre-compaction) and s3 < s4 (post-compaction) which won't trigger false positive corruption. See test class `DBCompactionTestL0FilesMisorderCorruption*` for more.

- In the second case above, this will result in file ordering s1 < s2 < s3 < s4 (pre-compacting s1, s2, s3), s5 < s4 (post-compacting s1, s2, s3), s5 < s6 (post-compacting s4), which are correct file ordering without causing any corruption.

**Summary:**

- Introduce `epoch_number` stored per `ColumnFamilyData` and sort CF's L0 files by their assigned `epoch_number` instead of `largest_seqno`.

- `epoch_number` is increased and assigned upon `VersionEdit::AddFile()` for flush (or similarly for WriteLevel0TableForRecovery) and file ingestion (except for allow_behind_true, which will always get assigned as the `kReservedEpochNumberForFileIngestedBehind`)

- Compaction output file is assigned with the minimum `epoch_number` among input files'

- Refit level: reuse refitted file's epoch_number

- Other paths needing `epoch_number` treatment:

- Import column families: reuse file's epoch_number if exists. If not, assign one based on `NewestFirstBySeqNo`

- Repair: reuse file's epoch_number if exists. If not, assign one based on `NewestFirstBySeqNo`.

- Assigning new epoch_number to a file and adding this file to LSM tree should be atomic. This is guaranteed by us assigning epoch_number right upon `VersionEdit::AddFile()` where this version edit will be apply to LSM tree shape right after by holding the db mutex (e.g, flush, file ingestion, import column family) or by there is only 1 ongoing edit per CF (e.g, WriteLevel0TableForRecovery, Repair).

- Assigning the minimum input epoch number to compaction output file won't misorder L0 files (even through later `Refit(target_level=0)`). It's due to for every key "k" in the input range, a legit compaction will cover a continuous epoch number range of that key. As long as we assign the key "k" the minimum input epoch number, it won't become newer or older than the versions of this key that aren't included in this compaction hence no misorder.

- Persist `epoch_number` of each file in manifest and recover `epoch_number` on db recovery

- Backward compatibility with old db without `epoch_number` support is guaranteed by assigning `epoch_number` to recovered files by `NewestFirstBySeqno` order. See `VersionStorageInfo::RecoverEpochNumbers()` for more

- Forward compatibility with manifest is guaranteed by flexibility of `NewFileCustomTag`

- Replace `force_consistent_check` on L0 with `epoch_number` and remove false positive check like case 1 with `largest_seqno` above

- Due to backward compatibility issue, we might encounter files with missing epoch number at the beginning of db recovery. We will still use old L0 sorting mechanism (`NewestFirstBySeqno`) to check/sort them till we infer their epoch number. See usages of `EpochNumberRequirement`.

- Remove fix https://github.com/facebook/rocksdb/pull/5958#issue-511150930 and their outdated tests to file reordering corruption because such fix can be replaced by this PR.

- Misc:

- update existing tests with `epoch_number` so make check will pass

- update https://github.com/facebook/rocksdb/pull/5958#issue-511150930 tests to verify corruption is fixed using `epoch_number` and cover universal/fifo compaction/CompactRange/CompactFile cases

- assert db_mutex is held for a few places before calling ColumnFamilyData::NewEpochNumber()

Pull Request resolved: https://github.com/facebook/rocksdb/pull/10922

Test Plan:

- `make check`

- New unit tests under `db/db_compaction_test.cc`, `db/db_test2.cc`, `db/version_builder_test.cc`, `db/repair_test.cc`

- Updated tests (i.e, `DBCompactionTestL0FilesMisorderCorruption*`) under https://github.com/facebook/rocksdb/pull/5958#issue-511150930

- [Ongoing] Compatibility test: manually run

|

||

|

|

3d0d6b8140 |

Make best-efforts recovery verify SST unique ID before Version construction (#10962)

Summary: The check for SST unique IDs added to best-efforts recovery (`Options::best_efforts_recovery` is true). With best_efforts_recovery being true, RocksDB will recover to the latest point in MANIFEST such that all valid SST files included up to this point pass unique ID checks as well. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10962 Test Plan: make check Reviewed By: pdillinger Differential Revision: D41378241 Pulled By: riversand963 fbshipit-source-id: a036064e2c17dec13d080a24ef2a9f85d607b16c |

||

|

|

db9cbddc6f |

Deflake DBTest2.TraceAndReplay by relaxing latency checks (#10979)

Summary: Since the latency measurement uses real time it is possible for the operation to complete in zero microseconds and then fail these checks. We saw this with the operation that invokes Get() on an invalid CF. This PR relaxes the assertions to allow for operations completing in zero microseconds. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10979 Reviewed By: riversand963 Differential Revision: D41478300 Pulled By: ajkr fbshipit-source-id: 50ef096bd8f0162b31adb46f54ae6ddc337d0a5e |

||

|

|

5cf6ab6f31 |

Ran clang-format on db/ directory (#10910)

Summary: Ran `find ./db/ -type f | xargs clang-format -i`. Excluded minor changes it tried to make on db/db_impl/. Everything else it changed was directly under db/ directory. Included minor manual touchups mentioned in PR commit history. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10910 Reviewed By: riversand963 Differential Revision: D40880683 Pulled By: ajkr fbshipit-source-id: cfe26cda05b3fb9a72e3cb82c286e21d8c5c4174 |

||

|

|

f726d29a82 |

Allow penultimate level output for the last level only compaction (#10822)

Summary: Allow the last level only compaction able to output result to penultimate level if the penultimate level is empty. Which will also block the other compaction output to the penultimate level. (it includes the PR https://github.com/facebook/rocksdb/issues/10829) Pull Request resolved: https://github.com/facebook/rocksdb/pull/10822 Reviewed By: siying Differential Revision: D40389180 Pulled By: jay-zhuang fbshipit-source-id: 4e5dcdce307795b5e07b5dd1fa29dd75bb093bad |

||

|

|

f3cc66632b |

Align compaction output file boundaries to the next level ones (#10655)

Summary:

Try to align the compaction output file boundaries to the next level ones

(grandparent level), to reduce the level compaction write-amplification.

In level compaction, there are "wasted" data at the beginning and end of the

output level files. Align the file boundary can avoid such "wasted" compaction.

With this PR, it tries to align the non-bottommost level file boundaries to its

next level ones. It may cut file when the file size is large enough (at least

50% of target_file_size) and not too large (2x target_file_size).

db_bench shows about 12.56% compaction reduction:

```

TEST_TMPDIR=/data/dbbench2 ./db_bench --benchmarks=fillrandom,readrandom -max_background_jobs=12 -num=400000000 -target_file_size_base=33554432

# baseline:

Flush(GB): cumulative 25.882, interval 7.216

Cumulative compaction: 285.90 GB write, 162.36 MB/s write, 269.68 GB read, 153.15 MB/s read, 2926.7 seconds

# with this change:

Flush(GB): cumulative 25.882, interval 7.753

Cumulative compaction: 249.97 GB write, 141.96 MB/s write, 233.74 GB read, 132.74 MB/s read, 2534.9 seconds

```

The compaction simulator shows a similar result (14% with 100G random data).

As a side effect, with this PR, the SST file size can exceed the

target_file_size, but is capped at 2x target_file_size. And there will be

smaller files. Here are file size statistics when loading 100GB with the target

file size 32MB:

```

baseline this_PR

count 1.656000e+03 1.705000e+03

mean 3.116062e+07 3.028076e+07

std 7.145242e+06 8.046139e+06

```

The feature is enabled by default, to revert to the old behavior disable it

with `AdvancedColumnFamilyOptions.level_compaction_dynamic_file_size = false`

Also includes https://github.com/facebook/rocksdb/issues/1963 to cut file before skippable grandparent file. Which is for

use case like user adding 2 or more non-overlapping data range at the same

time, it can reduce the overlapping of 2 datasets in the lower levels.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/10655

Reviewed By: cbi42

Differential Revision: D39552321

Pulled By: jay-zhuang

fbshipit-source-id: 640d15f159ab0cd973f2426cfc3af266fc8bdde2

|

||

|

|

ef443cead4 |

Refactor to avoid confusing "raw block" (#10408)

Summary: We have a lot of confusing code because of mixed, sometimes completely opposite uses of of the term "raw block" or "raw contents", sometimes within the same source file. For example, in `BlockBasedTableBuilder`, `raw_block_contents` and `raw_size` generally referred to uncompressed block contents and size, while `WriteRawBlock` referred to writing a block that is already compressed if it is going to be. Meanwhile, in `BlockBasedTable`, `raw_block_contents` either referred to a (maybe compressed) block with trailer, or a maybe compressed block maybe without trailer. (Note: left as follow-up work to use C++ typing to better sort out the various kinds of BlockContents.) This change primarily tries to apply some consistent terminology around the kinds of block representations, avoiding the unclear "raw". (Any meaning of "raw" assumes some bias toward the storage layer or toward the logical data layer.) Preferred terminology: * **Serialized block** - bytes that go into storage. For block-based table (usually the case) this includes the block trailer. WART: block `size` may or may not include the trailer; need to be clear about whether it does or not. * **Maybe compressed block** - like a serialized block, but without the trailer (or no promise of including a trailer). Must be accompanied by a CompressionType. * **Uncompressed block** - "payload" bytes that are either stored with no compression, used as input to compression function, or result of decompression function. * **Parsed block** - an in-memory form of a block in block cache, as it is used by the table reader. Different C++ types are used depending on the block type (see block_like_traits.h). Other refactorings: * Misc corrections/improvements of internal API comments * Remove a few misleading / unhelpful / redundant comments. * Use move semantics in some places to simplify contracts * Use better parameter names to indicate which parameters are used for outputs * Remove some extraneous `extern` * Various clean-ups to `CacheDumperImpl` (mostly unnecessary code) Pull Request resolved: https://github.com/facebook/rocksdb/pull/10408 Test Plan: existing tests Reviewed By: akankshamahajan15 Differential Revision: D38172617 Pulled By: pdillinger fbshipit-source-id: ccb99299f324ac5ca46996d34c5089621a4f260c |

||

|

|

6de7081cf3 |

Always verify SST unique IDs on SST file open (#10532)

Summary: Although we've been tracking SST unique IDs in the DB manifest unconditionally, checking has been opt-in and with an extra pass at DB::Open time. This changes the behavior of `verify_sst_unique_id_in_manifest` to check unique ID against manifest every time an SST file is opened through table cache (normal DB operations), replacing the explicit pass over files at DB::Open time. This change also enables the option by default and removes the "EXPERIMENTAL" designation. One possible criticism is that the option no longer ensures the integrity of a DB at Open time. This is far from an all-or-nothing issue. Verifying the IDs of all SST files hardly ensures all the data in the DB is readable. (VerifyChecksum is supposed to do that.) Also, with max_open_files=-1 (default, extremely common), all SST files are opened at DB::Open time anyway. Implementation details: * `VerifySstUniqueIdInManifest()` functions are the extra/explicit pass that is now removed. * Unit tests that manipulate/corrupt table properties have to opt out of this check, because that corrupts the "actual" unique id. (And even for testing we don't currently have a mechanism to set "no unique id" in the in-memory file metadata for new files.) * A lot of other unit test churn relates to (a) default checking on, and (b) checking on SST open even without DB::Open (e.g. on flush) * Use `FileMetaData` for more `TableCache` operations (in place of `FileDescriptor`) so that we have access to the unique_id whenever we might need to open an SST file. **There is the possibility of performance impact because we can no longer use the more localized `fd` part of an `FdWithKeyRange` but instead follow the `file_metadata` pointer. However, this change (possible regression) is only done for `GetMemoryUsageByTableReaders`.** * Removed a completely unnecessary constructor overload of `TableReaderOptions` Possible follow-up: * Verification only happens when opening through table cache. Are there more places where this should happen? * Improve error message when there is a file size mismatch vs. manifest (FIXME added in the appropriate place). * I'm not sure there's a justification for `FileDescriptor` to be distinct from `FileMetaData`. * I'm skeptical that `FdWithKeyRange` really still makes sense for optimizing some data locality by duplicating some data in memory, but I could be wrong. * An unnecessary overload of NewTableReader was recently added, in the public API nonetheless (though unusable there). It should be cleaned up to put most things under `TableReaderOptions`. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10532 Test Plan: updated unit tests Performance test showing no significant difference (just noise I think): `./db_bench -benchmarks=readwhilewriting[-X10] -num=3000000 -disable_wal=1 -bloom_bits=8 -write_buffer_size=1000000 -target_file_size_base=1000000` Before: readwhilewriting [AVG 10 runs] : 68702 (± 6932) ops/sec After: readwhilewriting [AVG 10 runs] : 68239 (± 7198) ops/sec Reviewed By: jay-zhuang Differential Revision: D38765551 Pulled By: pdillinger fbshipit-source-id: a827a708155f12344ab2a5c16e7701c7636da4c2 |

||

|

|

275cd80cdb |

Add a blob-specific cache priority (#10461)

Summary: RocksDB's `Cache` abstraction currently supports two priority levels for items: high (used for frequently accessed/highly valuable SST metablocks like index/filter blocks) and low (used for SST data blocks). Blobs are typically lower-value targets for caching than data blocks, since 1) with BlobDB, data blocks containing blob references conceptually form an index structure which has to be consulted before we can read the blob value, and 2) cached blobs represent only a single key-value, while cached data blocks generally contain multiple KVs. Since we would like to make it possible to use the same backing cache for the block cache and the blob cache, it would make sense to add a new, lower-than-low cache priority level (bottom level) for blobs so data blocks are prioritized over them. This task is a part of https://github.com/facebook/rocksdb/issues/10156 Pull Request resolved: https://github.com/facebook/rocksdb/pull/10461 Reviewed By: siying Differential Revision: D38672823 Pulled By: ltamasi fbshipit-source-id: 90cf7362036563d79891f47be2cc24b827482743 |

||

|

|

3f763763aa |

Change bottommost_temperture to last_level_temperture (#10471)

Summary: Change tiered compaction feature from `bottommost_temperture` to `last_level_temperture`. The old option is kept for migration purpose only, which is behaving the same as `last_level_temperture` and it will be removed in the next release. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10471 Test Plan: CI Reviewed By: siying Differential Revision: D38450621 Pulled By: jay-zhuang fbshipit-source-id: cc1cdf8bad409376fec0152abc0a64fb72a91527 |

||

|

|

65036e4217 |

Revert "Add a blob-specific cache priority (#10309)" (#10434)

Summary:

This reverts commit

|

||

|

|

8d178090be |

Add a blob-specific cache priority (#10309)

Summary: RocksDB's `Cache` abstraction currently supports two priority levels for items: high (used for frequently accessed/highly valuable SST metablocks like index/filter blocks) and low (used for SST data blocks). Blobs are typically lower-value targets for caching than data blocks, since 1) with BlobDB, data blocks containing blob references conceptually form an index structure which has to be consulted before we can read the blob value, and 2) cached blobs represent only a single key-value, while cached data blocks generally contain multiple KVs. Since we would like to make it possible to use the same backing cache for the block cache and the blob cache, it would make sense to add a new, lower-than-low cache priority level (bottom level) for blobs so data blocks are prioritized over them. This task is a part of https://github.com/facebook/rocksdb/issues/10156 Pull Request resolved: https://github.com/facebook/rocksdb/pull/10309 Reviewed By: ltamasi Differential Revision: D38211655 Pulled By: gangliao fbshipit-source-id: 65ef33337db4d85277cc6f9782d67c421ad71dd5 |

||

|

|

faa0f9723c |

Tiered compaction: integrate Seqno time mapping with per key placement (#10370)

Summary: Using the Sequence number to time mapping to decide if a key is hot or not in compaction and place it in the corresponding level. Note: the feature is not complete, level compaction will run indefinitely until all penultimate level data is cold and small enough to not trigger compaction. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10370 Test Plan: CI * Run basic db_bench for universal compaction manually Reviewed By: siying Differential Revision: D37892338 Pulled By: jay-zhuang fbshipit-source-id: 792bbd91b1ccc2f62b5d14c53118434bcaac4bbe |

||

|

|

69a18b9bad |

VerifySstUniqueIds status is overrided for multi CFs (#10247)

Summary: There's bug that basically we only report the last CF's VerifySstUniqueIds() result: https://github.com/facebook/rocksdb/pull/9990#discussion_r877268810 Pull Request resolved: https://github.com/facebook/rocksdb/pull/10247 Test Plan: CI Reviewed By: pdillinger Differential Revision: D37384265 Pulled By: jay-zhuang fbshipit-source-id: d462ad0eab39c9145c45a3db9c45539d5d76f7dd |

||

|

|

a9565ccb26 |

Try to trivial move more than one files (#10190)

Summary: In leveled compaction, try to trivial move more than one files if possible, up to 4 files or max_compaction_bytes. This is to allow higher write throughput for some use cases where data is loaded in sequential order, where appying compaction results is the bottleneck. When pick up a file to compact and it doesn't have overlapping files in the next level, try to expand to the next file if there is still no overlapping. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10190 Test Plan: Add some unit tests. For performance, Try to run ./db_bench_multi_move --benchmarks=fillseq --compression_type=lz4 --write_buffer_size=5000000 --num=100000000 --value_size=1000 -level_compaction_dynamic_level_bytes Together with https://github.com/facebook/rocksdb/pull/10188 , stalling will be eliminated in this benchmark. Reviewed By: jay-zhuang Differential Revision: D37230647 fbshipit-source-id: 42b260f545c46abc5d90335ac2bbfcd09602b549 |

||

|

|

ad135f3ffd |

Document design/specification bugs with auto_prefix_mode (#10144)

Summary: auto_prefix_mode is designed to use prefix filtering in a particular "safe" set of cases where the upper bound and the seek key have different prefixes: where the upper bound is the "same length immediate successor". These conditions are not sufficient to guarantee the same iteration results as total_order_seek if the DB contains "short" keys, less than the "full" (maximum) prefix length. We are not simply disabling the optimization in these successor cases because it is likely that users are essentially getting what they want out of existing usage. Especially if users are constructing successor bounds with the intention of doing a prefix-bounded seek, the existing behavior is more expected than the total_order_seek behavior. Consequently, for now we reconcile the bad specification of behavior by documenting the existing mismatch with total_order_seek. A closely related issue affects hypothetical comparators like ReverseBytewiseComparator: if they "correctly" implement IsSameLengthImmediateSuccessor, auto_prefix_mode could omit more entries (other than "short" keys noted above). Luckily, the built-in ReverseBytewiseComparator has an "incorrect" implementation of IsSameLengthImmediateSuccessor that effectively prevents prefix optimization and, thus, the bug. This is now documented as a new constraint on IsSameLengthImmediateSuccessor, and the implementation tweaked to be simply "safe" rather than "incorrect". This change also includes unit test updates to demonstrate the above issues. (Test was cleaned up for readability and simplicity.) Intended follow-up: * Tweak documented axioms for prefix_extractor (more details then) * Consider some sort of fix for this case. I don't know what that would look like without breaking the performance of existing code. Perhaps if all keys in an SST file have prefixes that are "full length," we can track that fact and use it to allow optimization with the "same length immediate successor", but that would only apply to new files. * Consider a better system of specifying prefix bounds Pull Request resolved: https://github.com/facebook/rocksdb/pull/10144 Test Plan: test updates included Reviewed By: siying Differential Revision: D37052710 Pulled By: pdillinger fbshipit-source-id: 5f63b7d65f3f214e4b143e0f9aa1749527c587db |

||

|

|

3ee6c9baec |

Consolidate manual_compaction_paused_ check (#10070)

Summary: As pointed out by [https://github.com/facebook/rocksdb/pull/8351#discussion_r645765422](https://github.com/facebook/rocksdb/pull/8351#discussion_r645765422), check `manual_compaction_paused` and `manual_compaction_canceled` can be reduced by setting `*canceled` to be true in `DisableManualCompaction()` and `*canceled` to be false in the last time calling `EnableManualCompaction()`. Changed Tests: The origin `DBTest2.PausingManualCompaction1` uses a callback function to increase `manual_compaction_paused` and the origin CompactionJob/CompactionIterator with `manual_compaction_paused` can detect this. I changed the callback function so that it sets `*canceled` as true if `canceled` is not `nullptr` (to notify CompactionJob/CompactionIterator the compaction has been canceled). Pull Request resolved: https://github.com/facebook/rocksdb/pull/10070 Test Plan: This change does not introduce new features, but some slight difference in compaction implementation. Run the same manual compaction unit tests as before (e.g., PausingManualCompaction[1-4], CancelManualCompaction[1-2], CancelManualCompactionWithListener in db_test2, and db_compaction_test). Reviewed By: ajkr Differential Revision: D36949133 Pulled By: littlepig2013 fbshipit-source-id: c5dc4c956fbf8f624003a0f5ad2690240063a821 |

||

|

|

a020031552 |

Add kLastTemperature as temperature high bound (#10044)

Summary: Only used as temperature high bound for current code, may increase with more temperatures added. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10044 Test Plan: ci Reviewed By: siying Differential Revision: D36633410 Pulled By: jay-zhuang fbshipit-source-id: eecdfa7623c31778c31d789902eacf78aad7b482 |

||

|

|

23f34c7ae5 |

Skip ZSTD dict tests if the version doesn't support it (#10046)

Summary: For example, the default ZSTD version for ubuntu20 is 1.4.4, which will fail the test `PresetCompressionDict`: ``` db/db_test_util.cc:607: Failure Invalid argument: zstd finalizeDictionary cannot be used because ZSTD 1.4.5+ is not linked with the binary. terminate called after throwing an instance of 'testing::internal::GoogleTestFailureException' ``` Pull Request resolved: https://github.com/facebook/rocksdb/pull/10046 Test Plan: test pass with old zstd Reviewed By: cbi42 Differential Revision: D36640067 Pulled By: jay-zhuang fbshipit-source-id: b1c49fb7295f57f4515ce4eb3a52ae7d7e45da86 |

||

|

|

cc23b46da1 |

Support using ZDICT_finalizeDictionary to generate zstd dictionary (#9857)

Summary:

An untrained dictionary is currently simply the concatenation of several samples. The ZSTD API, ZDICT_finalizeDictionary(), can improve such a dictionary's effectiveness at low cost. This PR changes how dictionary is created by calling the ZSTD ZDICT_finalizeDictionary() API instead of creating raw content dictionary (when max_dict_buffer_bytes > 0), and pass in all buffered uncompressed data blocks as samples.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/9857

Test Plan:

#### db_bench test for cpu/memory of compression+decompression and space saving on synthetic data:

Set up: change the parameter [here](

|

||

|

|

280b9f371a |

Fix auto_prefix_mode performance with partitioned filters (#10012)

Summary: Essentially refactored the RangeMayExist implementation in FullFilterBlockReader to FilterBlockReaderCommon so that it applies to partitioned filters as well. (The function is not called for the block-based filter case.) RangeMayExist is essentially a series of checks around a possible PrefixMayExist, and I'm confident those checks should be the same for partitioned as for full filters. (I think it's likely that bugs remain in those checks, but this change is overall a simplifying one.) Added auto_prefix_mode support to db_bench Other small fixes as well Fixes https://github.com/facebook/rocksdb/issues/10003 Pull Request resolved: https://github.com/facebook/rocksdb/pull/10012 Test Plan: Expanded unit test that uses statistics to check for filter optimization, fails without the production code changes here Performance: populate two DBs with ``` TEST_TMPDIR=/dev/shm/rocksdb_nonpartitioned ./db_bench -benchmarks=fillrandom -num=10000000 -disable_wal=1 -write_buffer_size=30000000 -bloom_bits=16 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -prefix_size=8 TEST_TMPDIR=/dev/shm/rocksdb_partitioned ./db_bench -benchmarks=fillrandom -num=10000000 -disable_wal=1 -write_buffer_size=30000000 -bloom_bits=16 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -prefix_size=8 -partition_index_and_filters ``` Observe no measurable change in non-partitioned performance ``` TEST_TMPDIR=/dev/shm/rocksdb_nonpartitioned ./db_bench -benchmarks=seekrandom[-X1000] -num=10000000 -readonly -bloom_bits=16 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -prefix_size=8 -auto_prefix_mode -cache_index_and_filter_blocks=1 -cache_size=1000000000 -duration 20 ``` Before: seekrandom [AVG 15 runs] : 11798 (± 331) ops/sec After: seekrandom [AVG 15 runs] : 11724 (± 315) ops/sec Observe big improvement with partitioned (also supported by bloom use statistics) ``` TEST_TMPDIR=/dev/shm/rocksdb_partitioned ./db_bench -benchmarks=seekrandom[-X1000] -num=10000000 -readonly -bloom_bits=16 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -prefix_size=8 -partition_index_and_filters -auto_prefix_mode -cache_index_and_filter_blocks=1 -cache_size=1000000000 -duration 20 ``` Before: seekrandom [AVG 12 runs] : 2942 (± 57) ops/sec After: seekrandom [AVG 12 runs] : 7489 (± 184) ops/sec Reviewed By: siying Differential Revision: D36469796 Pulled By: pdillinger fbshipit-source-id: bcf1e2a68d347b32adb2b27384f945434e7a266d |

||

|

|

c6d326d3d7 |

Track SST unique id in MANIFEST and verify (#9990)

Summary: Start tracking SST unique id in MANIFEST, which is used to verify with SST properties to make sure the SST file is not overwritten or misplaced. A DB option `try_verify_sst_unique_id` is introduced to enable/disable the verification, if enabled, it opens all SST files during DB-open to read the unique_id from table properties (default is false), so it's recommended to use it with `max_open_files = -1` to pre-open the files. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9990 Test Plan: unittests, format-compatible test, mini-crash Reviewed By: anand1976 Differential Revision: D36381863 Pulled By: jay-zhuang fbshipit-source-id: 89ea2eb6b35ed3e80ead9c724eb096083eaba63f |

||

|

|

4da34b97ee |

Set Read rate limiter priority dynamically and pass it to FS (#9996)

Summary: ### Context: Background compactions and flush generate large reads and writes, and can be long running, especially for universal compaction. In some cases, this can impact foreground reads and writes by users. ### Solution User, Flush, and Compaction reads share some code path. For this task, we update the rate_limiter_priority in ReadOptions for code paths (e.g. FindTable (mainly in BlockBasedTable::Open()) and various iterators), and eventually update the rate_limiter_priority in IOOptions for FSRandomAccessFile. **This PR is for the Read path.** The **Read:** dynamic priority for different state are listed as follows: | State | Normal | Delayed | Stalled | | ----- | ------ | ------- | ------- | | Flush (verification read in BuildTable()) | IO_USER | IO_USER | IO_USER | | Compaction | IO_LOW | IO_USER | IO_USER | | User | User provided | User provided | User provided | We will respect the read_options that the user provided and will not set it. The only sst read for Flush is the verification read in BuildTable(). It claims to be "regard as user read". **Details** 1. Set read_options.rate_limiter_priority dynamically: - User: Do not update the read_options. Use the read_options that the user provided. - Compaction: Update read_options in CompactionJob::ProcessKeyValueCompaction(). - Flush: Update read_options in BuildTable(). 2. Pass the rate limiter priority to FSRandomAccessFile functions: - After calling the FindTable(), read_options is passed through GetTableReader(table_cache.cc), BlockBasedTableFactory::NewTableReader(block_based_table_factory.cc), and BlockBasedTable::Open(). The Open() needs some updates for the ReadOptions variable and the updates are also needed for the called functions, including PrefetchTail(), PrepareIOOptions(), ReadFooterFromFile(), ReadMetaIndexblock(), ReadPropertiesBlock(), PrefetchIndexAndFilterBlocks(), and ReadRangeDelBlock(). - In RandomAccessFileReader, the functions to be updated include Read(), MultiRead(), ReadAsync(), and Prefetch(). - Update the downstream functions of NewIndexIterator(), NewDataBlockIterator(), and BlockBasedTableIterator(). ### Test Plans Add unit tests. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9996 Reviewed By: anand1976 Differential Revision: D36452483 Pulled By: gitbw95 fbshipit-source-id: 60978204a4f849bb9261cb78d9bc1cb56d6008cf |

||

|

|

736a7b5433 |

Remove own ToString() (#9955)

Summary: ToString() is created as some platform doesn't support std::to_string(). However, we've already used std::to_string() by mistake for 16 months (in db/db_info_dumper.cc). This commit just remove ToString(). Pull Request resolved: https://github.com/facebook/rocksdb/pull/9955 Test Plan: Watch CI tests Reviewed By: riversand963 Differential Revision: D36176799 fbshipit-source-id: bdb6dcd0e3a3ab96a1ac810f5d0188f684064471 |

||

|

|

a8a422e962 |

Add manifest fix-up utility for file temperatures (#9683)

Summary: The goal of this change is to allow changes to the "current" (in FileSystem) file temperatures to feed back into DB metadata, so that they can inform decisions and stats reporting. In part because of modular code factoring, it doesn't seem easy to do this automagically, where opening an SST file and observing current Temperature different from expected would trigger a change in metadata and DB manifest write (essentially giving the deep read path access to the write path). It is also difficult to do this while the DB is open because of the limitations of LogAndApply. This change allows updating file temperature metadata on a closed DB using an experimental utility function UpdateManifestForFilesState() or `ldb update_manifest --update_temperatures`. This should suffice for "migration" scenarios where outside tooling has placed or re-arranged DB files into a (different) tiered configuration without going through RocksDB itself (currently, only compaction can change temperature metadata). Some details: * Refactored and added unit test for `ldb unsafe_remove_sst_file` because of shared functionality * Pulled in autovector.h changes from https://github.com/facebook/rocksdb/issues/9546 to fix SuperVersionContext move constructor (related to an older draft of this change) Possible follow-up work: * Support updating manifest with file checksums, such as when a new checksum function is used and want existing DB metadata updated for it. * It's possible that for some repair scenarios, lighter weight than full repair, we might want to support UpdateManifestForFilesState() to modify critical file details like size or checksum using same algorithm. But let's make sure these are differentiated from modifying file details in ways that don't suspect corruption (or require extreme trust). Pull Request resolved: https://github.com/facebook/rocksdb/pull/9683 Test Plan: unit tests added Reviewed By: jay-zhuang Differential Revision: D34798828 Pulled By: pdillinger fbshipit-source-id: cfd83e8fb10761d8c9e7f9c020d68c9106a95554 |

||

|

|

cff0d1e8e6 |

New backup meta schema, with file temperatures (#9660)

Summary: The primary goal of this change is to add support for backing up and restoring (applying on restore) file temperature metadata, without committing to either the DB manifest or the FS reported "current" temperatures being exclusive "source of truth". To achieve this goal, we need to add temperature information to backup metadata, which requires updated backup meta schema. Fortunately I prepared for this in https://github.com/facebook/rocksdb/issues/8069, which began forward compatibility in version 6.19.0 for this kind of schema update. (Previously, backup meta schema was not extensible! Making this schema update public will allow some other "nice to have" features like taking backups with hard links, and avoiding crc32c checksum computation when another checksum is already available.) While schema version 2 is newly public, the default schema version is still 1. Until we change the default, users will need to set to 2 to enable features like temperature data backup+restore. New metadata like temperature information will be ignored with a warning in versions before this change and since 6.19.0. The metadata is considered ignorable because a functioning DB can be restored without it. Some detail: * Some renaming because "future schema" is now just public schema 2. * Initialize some atomics in TestFs (linter reported) * Add temperature hint support to SstFileDumper (used by BackupEngine) Pull Request resolved: https://github.com/facebook/rocksdb/pull/9660 Test Plan: related unit test majorly updated for the new functionality, including some shared testing support for tracking temperatures in a FS. Some other tests and testing hooks into production code also updated for making the backup meta schema change public. Reviewed By: ajkr Differential Revision: D34686968 Pulled By: pdillinger fbshipit-source-id: 3ac1fa3e67ee97ca8a5103d79cc87d872c1d862a |

||

|

|

ce60d0cbe5 |

Test refactoring for Backups+Temperatures (#9655)

Summary: In preparation for more support for file Temperatures in BackupEngine, this change does some test refactoring: * Move DBTest2::BackupFileTemperature test to BackupEngineTest::FileTemperatures, with some updates to make it work in the new home. This test will soon be expanded for deeper backup work. * Move FileTemperatureTestFS from db_test2.cc to db_test_util.h, to support sharing because of above moved test, but split off the "no link" part to the test needing it. * Use custom FileSystems in backupable_db_test rather than custom Envs, because going through Env file interfaces doesn't support temperatures. * Fix RemapFileSystem to map DirFsyncOptions::renamed_new_name parameter to FsyncWithDirOptions, which was required because this limitation caused a crash only after moving to higher fidelity of FileSystem interface (vs. LegacyDirectoryWrapper throwing away some parameter details) * `backupable_options_` -> `engine_options_` as part of the ongoing work to get rid of the obsolete "backupable" naming. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9655 Test Plan: test code updates only Reviewed By: jay-zhuang Differential Revision: D34622183 Pulled By: pdillinger fbshipit-source-id: f24b7a596a89b9e089e960f4e5d772575513e93f |

||

|

|

8ca433f912 |

Fix test race conditions with OnFlushCompleted() (#9617)

Summary: We often see flaky tests due to `DB::Flush()` or `DBImpl::TEST_WaitForFlushMemTable()` not waiting until event listeners complete. For example, https://github.com/facebook/rocksdb/issues/9084, https://github.com/facebook/rocksdb/issues/9400, https://github.com/facebook/rocksdb/issues/9528, plus two new ones this week: "EventListenerTest.OnSingleDBFlushTest" and "DBFlushTest.FireOnFlushCompletedAfterCommittedResult". I ran a `make check` with the below race condition-coercing patch and fixed issues it found besides old BlobDB. ``` diff --git a/db/db_impl/db_impl_compaction_flush.cc b/db/db_impl/db_impl_compaction_flush.cc index 0e1864788..aaba68c4a 100644 --- a/db/db_impl/db_impl_compaction_flush.cc +++ b/db/db_impl/db_impl_compaction_flush.cc @@ -861,6 +861,8 @@ void DBImpl::NotifyOnFlushCompleted( mutable_cf_options.level0_stop_writes_trigger); // release lock while notifying events mutex_.Unlock(); + bg_cv_.SignalAll(); + sleep(1); { for (auto& info : *flush_jobs_info) { info->triggered_writes_slowdown = triggered_writes_slowdown; ``` The reason I did not fix old BlobDB issues is because it appears to have a fundamental (non-test) issue. In particular, it uses an EventListener to keep track of the files. OnFlushCompleted() could be delayed until even after a compaction involving that flushed file completes, causing the compaction to unexpectedly delete an untracked file. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9617 Test Plan: `make check` including the race condition coercing patch Reviewed By: hx235 Differential Revision: D34384022 Pulled By: ajkr fbshipit-source-id: 2652ded39b415277c5d6a628414345223930514e |

||

|

|

3379d1466f |

Fix DBTest2.BackupFileTemperature memory leak (#9610)

Summary: Valgrind was failing with the below error because we forgot to destroy the `BackupEngine` object: ``` ==421173== Command: ./db_test2 --gtest_filter=DBTest2.BackupFileTemperature ==421173== Note: Google Test filter = DBTest2.BackupFileTemperature [==========] Running 1 test from 1 test case. [----------] Global test environment set-up. [----------] 1 test from DBTest2 [ RUN ] DBTest2.BackupFileTemperature --421173-- WARNING: unhandled amd64-linux syscall: 425 --421173-- You may be able to write your own handler. --421173-- Read the file README_MISSING_SYSCALL_OR_IOCTL. --421173-- Nevertheless we consider this a bug. Please report --421173-- it at http://valgrind.org/support/bug_reports.html. [ OK ] DBTest2.BackupFileTemperature (3366 ms) [----------] 1 test from DBTest2 (3371 ms total) [----------] Global test environment tear-down [==========] 1 test from 1 test case ran. (3413 ms total) [ PASSED ] 1 test. ==421173== ==421173== HEAP SUMMARY: ==421173== in use at exit: 13,042 bytes in 195 blocks ==421173== total heap usage: 26,022 allocs, 25,827 frees, 27,555,265 bytes allocated ==421173== ==421173== 8 bytes in 1 blocks are possibly lost in loss record 6 of 167 ==421173== at 0x4838DBF: operator new(unsigned long) (vg_replace_malloc.c:344) ==421173== by 0x8D4606: allocate (new_allocator.h:114) ==421173== by 0x8D4606: allocate (alloc_traits.h:445) ==421173== by 0x8D4606: _M_allocate (stl_vector.h:343) ==421173== by 0x8D4606: reserve (vector.tcc:78) ==421173== by 0x8D4606: rocksdb::BackupEngineImpl::Initialize() (backupable_db.cc:1174) ==421173== by 0x8D5473: Initialize (backupable_db.cc:918) ==421173== by 0x8D5473: rocksdb::BackupEngine::Open(rocksdb::BackupEngineOptions const&, rocksdb::Env*, rocksdb::BackupEngine**) (backupable_db.cc:937) ==421173== by 0x50AC8F: Open (backup_engine.h:585) ==421173== by 0x50AC8F: rocksdb::DBTest2_BackupFileTemperature_Test::TestBody() (db_test2.cc:6996) ... ``` Pull Request resolved: https://github.com/facebook/rocksdb/pull/9610 Test Plan: ``` $ make -j24 ROCKSDBTESTS_SUBSET=db_test2 valgrind_check_some ``` Reviewed By: akankshamahajan15 Differential Revision: D34371210 Pulled By: ajkr fbshipit-source-id: 68154fcb0c51b28222efa23fa4ee02df8d925a18 |

||

|

|

d3a2f284d9 |

Add Temperature info in NewSequentialFile() (#9499)

Summary: Add Temperature hints information from RocksDB in API `NewSequentialFile()`. backup and checkpoint operations need to open the source files with `NewSequentialFile()`, which will have the temperature hints. Other operations are not covered. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9499 Test Plan: Added unittest Reviewed By: pdillinger Differential Revision: D34006115 Pulled By: jay-zhuang fbshipit-source-id: 568b34602b76520e53128672bd07e9d886786a2f |

||

|

|

f4b2500e12 |

Add last level and non-last level read statistics (#9519)

Summary: Add last level and non-last level read statistics: ``` LAST_LEVEL_READ_BYTES, LAST_LEVEL_READ_COUNT, NON_LAST_LEVEL_READ_BYTES, NON_LAST_LEVEL_READ_COUNT, ``` Pull Request resolved: https://github.com/facebook/rocksdb/pull/9519 Test Plan: added unittest Reviewed By: siying Differential Revision: D34062539 Pulled By: jay-zhuang fbshipit-source-id: 908644c3050878b4234febdc72e3e19d89af38cd |

||

|

|

2fbc672732 |

Add temperature information to the event listener callbacks (#9591)

Summary: RocksDB try to provide temperature information in the event listener callbacks. The information is not guaranteed, as some operation like backup won't have these information. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9591 Test Plan: Added unittest Reviewed By: siying, pdillinger Differential Revision: D34309339 Pulled By: jay-zhuang fbshipit-source-id: 4aca4f270f99fa49186d85d300da42594663d6d7 |

||

|

|

babe56ddba |

Add rate limiter priority to ReadOptions (#9424)

Summary: Users can set the priority for file reads associated with their operation by setting `ReadOptions::rate_limiter_priority` to something other than `Env::IO_TOTAL`. Rate limiting `VerifyChecksum()` and `VerifyFileChecksums()` is the motivation for this PR, so it also includes benchmarks and minor bug fixes to get that working. `RandomAccessFileReader::Read()` already had support for rate limiting compaction reads. I changed that rate limiting to be non-specific to compaction, but rather performed according to the passed in `Env::IOPriority`. Now the compaction read rate limiting is supported by setting `rate_limiter_priority = Env::IO_LOW` on its `ReadOptions`. There is no default value for the new `Env::IOPriority` parameter to `RandomAccessFileReader::Read()`. That means this PR goes through all callers (in some cases multiple layers up the call stack) to find a `ReadOptions` to provide the priority. There are TODOs for cases I believe it would be good to let user control the priority some day (e.g., file footer reads), and no TODO in cases I believe it doesn't matter (e.g., trace file reads). The API doc only lists the missing cases where a file read associated with a provided `ReadOptions` cannot be rate limited. For cases like file ingestion checksum calculation, there is no API to provide `ReadOptions` or `Env::IOPriority`, so I didn't count that as missing. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9424 Test Plan: - new unit tests - new benchmarks on ~50MB database with 1MB/s read rate limit and 100ms refill interval; verified with strace reads are chunked (at 0.1MB per chunk) and spaced roughly 100ms apart. - setup command: `./db_bench -benchmarks=fillrandom,compact -db=/tmp/testdb -target_file_size_base=1048576 -disable_auto_compactions=true -file_checksum=true` - benchmarks command: `strace -ttfe pread64 ./db_bench -benchmarks=verifychecksum,verifyfilechecksums -use_existing_db=true -db=/tmp/testdb -rate_limiter_bytes_per_sec=1048576 -rate_limit_bg_reads=1 -rate_limit_user_ops=true -file_checksum=true` - crash test using IO_USER priority on non-validation reads with https://github.com/facebook/rocksdb/issues/9567 reverted: `python3 tools/db_crashtest.py blackbox --max_key=1000000 --write_buffer_size=524288 --target_file_size_base=524288 --level_compaction_dynamic_level_bytes=true --duration=3600 --rate_limit_bg_reads=true --rate_limit_user_ops=true --rate_limiter_bytes_per_sec=10485760 --interval=10` Reviewed By: hx235 Differential Revision: D33747386 Pulled By: ajkr fbshipit-source-id: a2d985e97912fba8c54763798e04f006ccc56e0c |

||

|

|

9745c68eb1 |

Remove deprecated option new_table_reader_for_compaction_inputs (#9443)

Summary: In RocksDB option new_table_reader_for_compaction_inputs has not effect on Compaction or on the behavior of RocksDB library. Therefore, we are removing it in the upcoming 7.0 release. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9443 Test Plan: CircleCI Reviewed By: ajkr Differential Revision: D33788508 Pulled By: akankshamahajan15 fbshipit-source-id: 324ca6f12bfd019e9bd5e1b0cdac39be5c3cec7d |

||

|

|

036bbab6f7 |

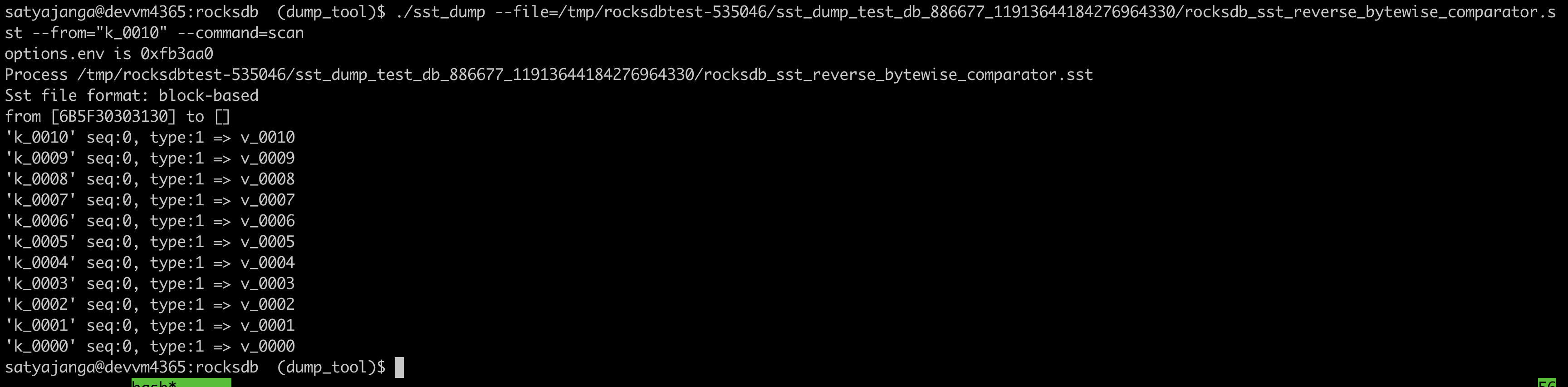

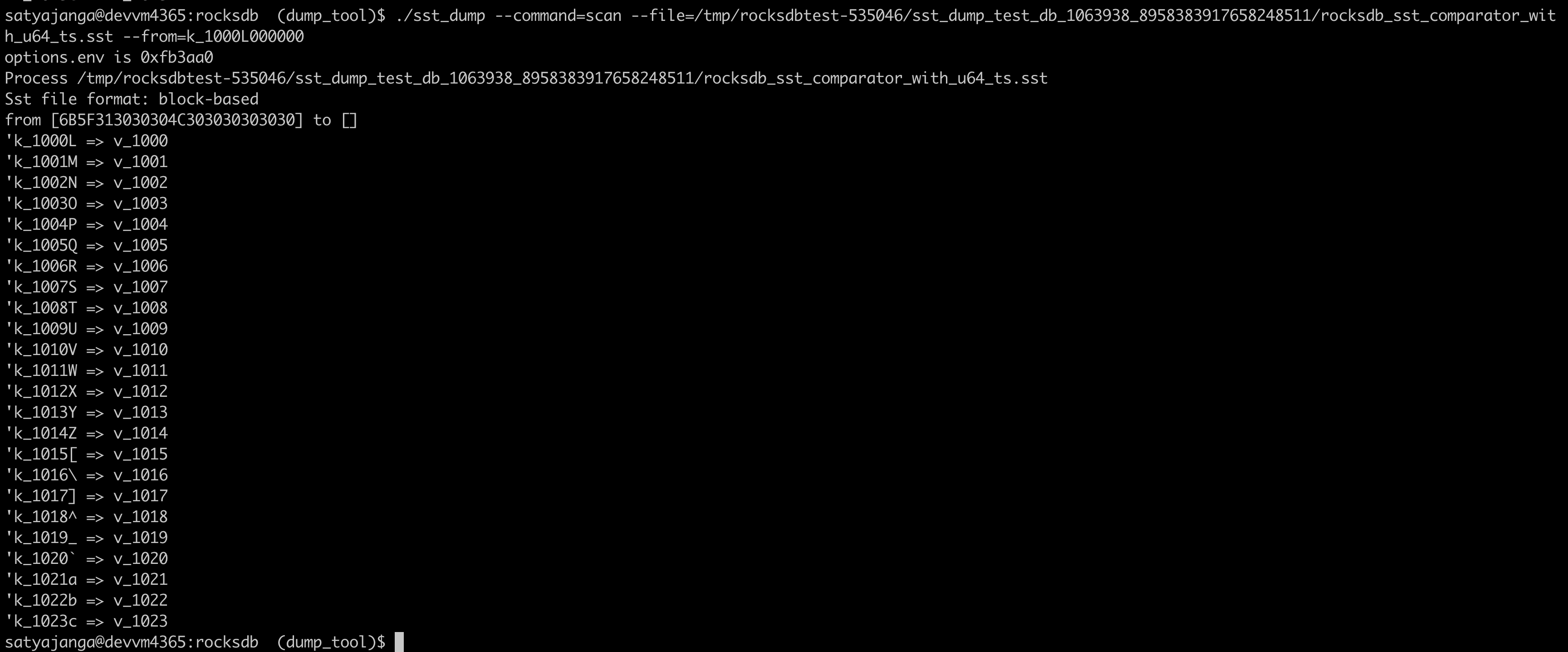

Use the comparator from the sst file table properties in sst_dump_tool (#9491)

Summary: We introduced a new Comparator for timestamp in user keys. In the sst_dump_tool by default we use BytewiseComparator to read sst files. This change allows us to read comparator_name from table properties in meta data block and use it to read. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9491 Test Plan: added unittests for new functionality. make check   Reviewed By: riversand963 Differential Revision: D33993614 Pulled By: satyajanga fbshipit-source-id: 4b5cf938e6d2cb3931d763bef5baccc900b8c536 |