mirror of

https://github.com/facebook/rocksdb.git

synced 2024-12-04 02:02:41 +00:00

360 commits

| Author | SHA1 | Message | Date | |

|---|---|---|---|---|

|

|

177b2fa341 |

Set the value for --version, add --build_info (#10275)

Summary:

./db_bench --version

db_bench version 7.5.0

./db_bench --build_info

(RocksDB) 7.5.0

rocksdb_build_date: 2022-06-29 09:58:04

rocksdb_build_git_sha:

|

||

|

|

57a0e2f304 |

Clock cache (#10273)

Summary: This is the initial step in the development of a lock-free clock cache. This PR includes the base hash table design (which we mostly ported over from FastLRUCache) and the clock eviction algorithm. Importantly, it's still _not_ lock-free---all operations use a shard lock. Besides the locking, there are other features left as future work: - Remove keys from the handles. Instead, use 128-bit bijective hashes of them for handle comparisons, probing (we need two 32-bit hashes of the key for double hashing) and sharding (we need one 6-bit hash). - Remove the clock_usage_ field, which is updated on every lookup. Even if it were atomically updated, it could cause memory invalidations across cores. - Middle insertions into the clock list. - A test that exercises the clock eviction policy. - Update the Java API of ClockCache and Java calls to C++. Along the way, we improved the code and comments quality of FastLRUCache. These changes are relatively minor. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10273 Test Plan: ``make -j24 check`` Reviewed By: pdillinger Differential Revision: D37522461 Pulled By: guidotag fbshipit-source-id: 3d70b737dbb70dcf662f00cef8c609750f083943 |

||

|

|

2352e2dfda |

Add the blob cache to the stress tests and the benchmarking tool (#10202)

Summary: In order to facilitate correctness and performance testing, we would like to add the new blob cache to our stress test tool `db_stress` and our continuously running crash test script `db_crashtest.py`, as well as our synthetic benchmarking tool `db_bench` and the BlobDB performance testing script `run_blob_bench.sh`. As part of this task, we would also like to utilize these benchmarking tools to get some initial performance numbers about the effectiveness of caching blobs. This PR is a part of https://github.com/facebook/rocksdb/issues/10156 Pull Request resolved: https://github.com/facebook/rocksdb/pull/10202 Reviewed By: ltamasi Differential Revision: D37325739 Pulled By: gangliao fbshipit-source-id: deb65d0d414502270dd4c324d987fd5469869fa8 |

||

|

|

a5d773e077 |

Add rate-limiting support to batched MultiGet() (#10159)

Summary: **Context/Summary:** https://github.com/facebook/rocksdb/pull/9424 added rate-limiting support for user reads, which does not include batched `MultiGet()`s that call `RandomAccessFileReader::MultiRead()`. The reason is that it's harder (compared with RandomAccessFileReader::Read()) to implement the ideal rate-limiting where we first call `RateLimiter::RequestToken()` for allowed bytes to multi-read and then consume those bytes by satisfying as many requests in `MultiRead()` as possible. For example, it can be tricky to decide whether we want partially fulfilled requests within one `MultiRead()` or not. However, due to a recent urgent user request, we decide to pursue an elementary (but a conditionally ineffective) solution where we accumulate enough rate limiter requests toward the total bytes needed by one `MultiRead()` before doing that `MultiRead()`. This is not ideal when the total bytes are huge as we will actually consume a huge bandwidth from rate-limiter causing a burst on disk. This is not what we ultimately want with rate limiter. Therefore a follow-up work is noted through TODO comments. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10159 Test Plan: - Modified existing unit test `DBRateLimiterOnReadTest/DBRateLimiterOnReadTest.NewMultiGet` - Traced the underlying system calls `io_uring_enter` and verified they are 10 seconds apart from each other correctly under the setting of `strace -ftt -e trace=io_uring_enter ./db_bench -benchmarks=multireadrandom -db=/dev/shm/testdb2 -readonly -num=50 -threads=1 -multiread_batched=1 -batch_size=100 -duration=10 -rate_limiter_bytes_per_sec=200 -rate_limiter_refill_period_us=1000000 -rate_limit_bg_reads=1 -disable_auto_compactions=1 -rate_limit_user_ops=1` where each `MultiRead()` read about 2000 bytes (inspected by debugger) and the rate limiter grants 200 bytes per seconds. - Stress test: - Verified `./db_stress (-test_cf_consistency=1/test_batches_snapshots=1) -use_multiget=1 -cache_size=1048576 -rate_limiter_bytes_per_sec=10241024 -rate_limit_bg_reads=1 -rate_limit_user_ops=1` work Reviewed By: ajkr, anand1976 Differential Revision: D37135172 Pulled By: hx235 fbshipit-source-id: 73b8e8f14761e5d4b77235dfe5d41f4eea968bcd |

||

|

|

126c223714 |

Remove deprecated block-based filter (#10184)

Summary: In https://github.com/facebook/rocksdb/issues/9535, release 7.0, we hid the old block-based filter from being created using the public API, because of its inefficiency. Although we normally maintain read compatibility on old DBs forever, filters are not required for reading a DB, only for optimizing read performance. Thus, it should be acceptable to remove this code and the substantial maintenance burden it carries as useful features are developed and validated (such as user timestamp). This change completely removes the code for reading and writing the old block-based filters, net removing about 1370 lines of code no longer needed. Options removed from testing / benchmarking tools. The prior existence is only evident in a couple of places: * `CacheEntryRole::kDeprecatedFilterBlock` - We can update this public API enum in a major release to minimize source code incompatibilities. * A warning is logged when an old table file is opened that used the old block-based filter. This is provided as a courtesy, and would be a pain to unit test, so manual testing should suffice. Unfortunately, sst_dump does not tell you whether a file uses block-based filter, and the structure of the code makes it very difficult to fix. * To detect that case, `kObsoleteFilterBlockPrefix` (renamed from `kFilterBlockPrefix`) for metaindex is maintained (for now). Other notes: * In some cases where numbers are associated with filter configurations, we have had to update the assigned numbers so that they all correspond to something that exists. * Fixed potential stat counting bug by assuming `filter_checked = false` for cases like `filter == nullptr` rather than assuming `filter_checked = true` * Removed obsolete `block_offset` and `prefix_extractor` parameters from several functions. * Removed some unnecessary checks `if (!table_prefix_extractor() && !prefix_extractor)` because the caller guarantees the prefix extractor exists and is compatible Pull Request resolved: https://github.com/facebook/rocksdb/pull/10184 Test Plan: tests updated, manually test new warning in LOG using base version to generate a DB Reviewed By: riversand963 Differential Revision: D37212647 Pulled By: pdillinger fbshipit-source-id: 06ee020d8de3b81260ffc36ad0c1202cbf463a80 |

||

|

|

94329ae4ec |

Use only ASCII in source files (#10164)

Summary: Fix existing usage of non-ASCII and add a check to prevent future use. Added `-n` option to greps to provide line numbers. Alternative to https://github.com/facebook/rocksdb/issues/10147 Pull Request resolved: https://github.com/facebook/rocksdb/pull/10164 Test Plan: used new checker to find & fix cases, manually check db_bench output is preserved Reviewed By: akankshamahajan15 Differential Revision: D37148792 Pulled By: pdillinger fbshipit-source-id: 68c8b57e7ab829369540d532590bf756938855c7 |

||

|

|

9882652b0e |

Verify write batch checksum before WAL (#10114)

Summary: Context: WriteBatch can have key-value checksums when it was created `with protection_bytes_per_key > 0`. This PR added checksum verification for write batches before they are written to WAL. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10114 Test Plan: - Added new unit tests to db_kv_checksum_test.cc: `make check -j32` - benchmark on performance regression: `./db_bench --benchmarks=fillrandom[-X20] -db=/dev/shm/test_rocksdb -write_batch_protection_bytes_per_key=8` - Pre-PR: ` fillrandom [AVG 20 runs] : 198875 (± 3006) ops/sec; 22.0 (± 0.3) MB/sec ` - Post-PR: ` fillrandom [AVG 20 runs] : 196487 (± 2279) ops/sec; 21.7 (± 0.3) MB/sec ` Mean regressed about 1% (198875 -> 196487 ops/sec). Reviewed By: ajkr Differential Revision: D36917464 Pulled By: cbi42 fbshipit-source-id: 29beb74edf65f04b1a890b4f650d873dc7ed790d |

||

|

|

ce419c0f10 |

Allow db_bench and db_stress to set allow_data_in_errors (#10171)

Summary: There is `Options::allow_data_in_errors` that controls whether RocksDB is allowed to log data, e.g. key, value, etc in LOG files. It is false by default. However, in db_bench and db_stress, it is often ok to log data because there is no concern about privacy. This PR allows db_stress and db_bench to set this option on the command line, while it remains false by default. Furthermore, make crash/recovery test driven by db_crashtest.py to opt-in. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10171 Test Plan: Stress test and db_bench Reviewed By: hx235 Differential Revision: D37163787 Pulled By: riversand963 fbshipit-source-id: 0242f24d292ba15b6faf8ff903963b85d3e011f8 |

||

|

|

d665afdbf3 |

Account memory of FileMetaData in global memory limit (#9924)

Summary: **Context/Summary:** As revealed by heap profiling, allocation of `FileMetaData` for [newly created file added to a Version](https://github.com/facebook/rocksdb/pull/9924/files#diff-a6aa385940793f95a2c5b39cc670bd440c4547fa54fd44622f756382d5e47e43R774) can consume significant heap memory. This PR is to account that toward our global memory limit based on block cache capacity. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9924 Test Plan: - Previous `make check` verified there are only 2 places where the memory of the allocated `FileMetaData` can be released - New unit test `TEST_P(ChargeFileMetadataTestWithParam, Basic)` - db bench (CPU cost of `charge_file_metadata` in write and compact) - **write micros/op: -0.24%** : `TEST_TMPDIR=/dev/shm/testdb ./db_bench -benchmarks=fillseq -db=$TEST_TMPDIR -charge_file_metadata=1 (remove this option for pre-PR) -disable_auto_compactions=1 -write_buffer_size=100000 -num=4000000 | egrep 'fillseq'` - **compact micros/op -0.87%** : `TEST_TMPDIR=/dev/shm/testdb ./db_bench -benchmarks=fillseq -db=$TEST_TMPDIR -charge_file_metadata=1 -disable_auto_compactions=1 -write_buffer_size=100000 -num=4000000 -numdistinct=1000 && ./db_bench -benchmarks=compact -db=$TEST_TMPDIR -use_existing_db=1 -charge_file_metadata=1 -disable_auto_compactions=1 | egrep 'compact'` table 1 - write #-run | (pre-PR) avg micros/op | std micros/op | (post-PR) micros/op | std micros/op | change (%) -- | -- | -- | -- | -- | -- 10 | 3.9711 | 0.264408 | 3.9914 | 0.254563 | 0.5111933721 20 | 3.83905 | 0.0664488 | 3.8251 | 0.0695456 | -0.3633711465 40 | 3.86625 | 0.136669 | 3.8867 | 0.143765 | 0.5289363078 80 | 3.87828 | 0.119007 | 3.86791 | 0.115674 | **-0.2673865734** 160 | 3.87677 | 0.162231 | 3.86739 | 0.16663 | **-0.2419539978** table 2 - compact #-run | (pre-PR) avg micros/op | std micros/op | (post-PR) micros/op | std micros/op | change (%) -- | -- | -- | -- | -- | -- 10 | 2,399,650.00 | 96,375.80 | 2,359,537.00 | 53,243.60 | -1.67 20 | 2,410,480.00 | 89,988.00 | 2,433,580.00 | 91,121.20 | 0.96 40 | 2.41E+06 | 121811 | 2.39E+06 | 131525 | **-0.96** 80 | 2.40E+06 | 134503 | 2.39E+06 | 108799 | **-0.78** - stress test: `python3 tools/db_crashtest.py blackbox --charge_file_metadata=1 --cache_size=1` killed as normal Reviewed By: ajkr Differential Revision: D36055583 Pulled By: hx235 fbshipit-source-id: b60eab94707103cb1322cf815f05810ef0232625 |

||

|

|

f105e1a501 |

Make the per-shard hash table fixed-size. (#10154)

Summary: We make the size of the per-shard hash table fixed. The base level of the hash table is now preallocated with the required capacity. The user must provide an estimate of the size of the values. Notice that even though the base level becomes fixed, the chains are still dynamic. Overall, the shard capacity mechanisms haven't changed, so we don't need to test this. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10154 Test Plan: `make -j24 check` Reviewed By: pdillinger Differential Revision: D37124451 Pulled By: guidotag fbshipit-source-id: cba6ac76052fe0ec60b8ff4211b3de7650e80d0c |

||

|

|

cf85607795 |

Add support for FastLRUCache in db_bench. (#10096)

Summary: db_bench can now run with FastLRUCache. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10096 Test Plan: - Temporarily add an ``assert(false)`` in the execution path that sets up the FastLRUCache. Run ``make -j24 db_bench``. Then test the appropriate code is used by running ``./db_bench -cache_type=fast_lru_cache`` and checking that the assert is called. Repeat for LRUCache. - Verify that FastLRUCache (currently a clone of LRUCache) produces similar benchmark data than LRUCache, by comparing the outputs of ``./db_bench -benchmarks=fillseq,fillrandom,readseq,readrandom -cache_type=fast_lru_cache`` and ``./db_bench -benchmarks=fillseq,fillrandom,readseq,readrandom -cache_type=lru_cache``. Reviewed By: gitbw95 Differential Revision: D36898774 Pulled By: guidotag fbshipit-source-id: f9f6b6f6da124f88b21b3c8dee742fbb04eff773 |

||

|

|

e6432dfd4c |

Make it possible to enable blob files starting from a certain LSM tree level (#10077)

Summary: Currently, if blob files are enabled (i.e. `enable_blob_files` is true), large values are extracted both during flush/recovery (when SST files are written into level 0 of the LSM tree) and during compaction into any LSM tree level. For certain use cases that have a mix of short-lived and long-lived values, it might make sense to support extracting large values only during compactions whose output level is greater than or equal to a specified LSM tree level (e.g. compactions into L1/L2/... or above). This could reduce the space amplification caused by large values that are turned into garbage shortly after being written at the price of some write amplification incurred by long-lived values whose extraction to blob files is delayed. In order to achieve this, we would like to do the following: - Add a new configuration option `blob_file_starting_level` (default: 0) to `AdvancedColumnFamilyOptions` (and `MutableCFOptions` and extend the related logic) - Instantiate `BlobFileBuilder` in `BuildTable` (used during flush and recovery, where the LSM tree level is L0) and `CompactionJob` iff `enable_blob_files` is set and the LSM tree level is `>= blob_file_starting_level` - Add unit tests for the new functionality, and add the new option to our stress tests (`db_stress` and `db_crashtest.py` ) - Add the new option to our benchmarking tool `db_bench` and the BlobDB benchmark script `run_blob_bench.sh` - Add the new option to the `ldb` tool (see https://github.com/facebook/rocksdb/wiki/Administration-and-Data-Access-Tool) - Ideally extend the C and Java bindings with the new option - Update the BlobDB wiki to document the new option. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10077 Reviewed By: ltamasi Differential Revision: D36884156 Pulled By: gangliao fbshipit-source-id: 942bab025f04633edca8564ed64791cb5e31627d |

||

|

|

8515bd50c9 |

Support read rate-limiting in SequentialFileReader (#9973)

Summary: Added rate limiter and read rate-limiting support to SequentialFileReader. I've updated call sites to SequentialFileReader::Read with appropriate IO priority (or left a TODO and specified IO_TOTAL for now). The PR is separated into four commits: the first one added the rate-limiting support, but with some fixes in the unit test since the number of request bytes from rate limiter in SequentialFileReader are not accurate (there is overcharge at EOF). The second commit fixed this by allowing SequentialFileReader to check file size and determine how many bytes are left in the file to read. The third commit added benchmark related code. The fourth commit moved the logic of using file size to avoid overcharging the rate limiter into backup engine (the main user of SequentialFileReader). Pull Request resolved: https://github.com/facebook/rocksdb/pull/9973 Test Plan: - `make check`, backup_engine_test covers usage of SequentialFileReader with rate limiter. - Run db_bench to check if rate limiting is throttling as expected: Verified that reads and writes are together throttled at 2MB/s, and at 0.2MB chunks that are 100ms apart. - Set up: `./db_bench --benchmarks=fillrandom -db=/dev/shm/test_rocksdb` - Benchmark: ``` strace -ttfe read,write ./db_bench --benchmarks=backup -db=/dev/shm/test_rocksdb --backup_rate_limit=2097152 --use_existing_db strace -ttfe read,write ./db_bench --benchmarks=restore -db=/dev/shm/test_rocksdb --restore_rate_limit=2097152 --use_existing_db ``` - db bench on backup and restore to ensure no performance regression. - backup (avg over 50 runs): pre-change: 1.90443e+06 micros/op; post-change: 1.8993e+06 micros/op (improve by 0.2%) - restore (avg over 50 runs): pre-change: 1.79105e+06 micros/op; post-change: 1.78192e+06 micros/op (improve by 0.5%) ``` # Set up ./db_bench --benchmarks=fillrandom -db=/tmp/test_rocksdb -num=10000000 # benchmark TEST_TMPDIR=/tmp/test_rocksdb NUM_RUN=50 for ((j=0;j<$NUM_RUN;j++)) do ./db_bench -db=$TEST_TMPDIR -num=10000000 -benchmarks=backup -use_existing_db | egrep 'backup' # Restore #./db_bench -db=$TEST_TMPDIR -num=10000000 -benchmarks=restore -use_existing_db done > rate_limit.txt && awk -v NUM_RUN=$NUM_RUN '{sum+=$3;sum_sqrt+=$3^2}END{print sum/NUM_RUN, sqrt(sum_sqrt/NUM_RUN-(sum/NUM_RUN)^2)}' rate_limit.txt >> rate_limit_2.txt ``` Reviewed By: hx235 Differential Revision: D36327418 Pulled By: cbi42 fbshipit-source-id: e75d4307cff815945482df5ba630c1e88d064691 |

||

|

|

cc23b46da1 |

Support using ZDICT_finalizeDictionary to generate zstd dictionary (#9857)

Summary:

An untrained dictionary is currently simply the concatenation of several samples. The ZSTD API, ZDICT_finalizeDictionary(), can improve such a dictionary's effectiveness at low cost. This PR changes how dictionary is created by calling the ZSTD ZDICT_finalizeDictionary() API instead of creating raw content dictionary (when max_dict_buffer_bytes > 0), and pass in all buffered uncompressed data blocks as samples.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/9857

Test Plan:

#### db_bench test for cpu/memory of compression+decompression and space saving on synthetic data:

Set up: change the parameter [here](

|

||

|

|

280b9f371a |

Fix auto_prefix_mode performance with partitioned filters (#10012)

Summary: Essentially refactored the RangeMayExist implementation in FullFilterBlockReader to FilterBlockReaderCommon so that it applies to partitioned filters as well. (The function is not called for the block-based filter case.) RangeMayExist is essentially a series of checks around a possible PrefixMayExist, and I'm confident those checks should be the same for partitioned as for full filters. (I think it's likely that bugs remain in those checks, but this change is overall a simplifying one.) Added auto_prefix_mode support to db_bench Other small fixes as well Fixes https://github.com/facebook/rocksdb/issues/10003 Pull Request resolved: https://github.com/facebook/rocksdb/pull/10012 Test Plan: Expanded unit test that uses statistics to check for filter optimization, fails without the production code changes here Performance: populate two DBs with ``` TEST_TMPDIR=/dev/shm/rocksdb_nonpartitioned ./db_bench -benchmarks=fillrandom -num=10000000 -disable_wal=1 -write_buffer_size=30000000 -bloom_bits=16 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -prefix_size=8 TEST_TMPDIR=/dev/shm/rocksdb_partitioned ./db_bench -benchmarks=fillrandom -num=10000000 -disable_wal=1 -write_buffer_size=30000000 -bloom_bits=16 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -prefix_size=8 -partition_index_and_filters ``` Observe no measurable change in non-partitioned performance ``` TEST_TMPDIR=/dev/shm/rocksdb_nonpartitioned ./db_bench -benchmarks=seekrandom[-X1000] -num=10000000 -readonly -bloom_bits=16 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -prefix_size=8 -auto_prefix_mode -cache_index_and_filter_blocks=1 -cache_size=1000000000 -duration 20 ``` Before: seekrandom [AVG 15 runs] : 11798 (± 331) ops/sec After: seekrandom [AVG 15 runs] : 11724 (± 315) ops/sec Observe big improvement with partitioned (also supported by bloom use statistics) ``` TEST_TMPDIR=/dev/shm/rocksdb_partitioned ./db_bench -benchmarks=seekrandom[-X1000] -num=10000000 -readonly -bloom_bits=16 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -prefix_size=8 -partition_index_and_filters -auto_prefix_mode -cache_index_and_filter_blocks=1 -cache_size=1000000000 -duration 20 ``` Before: seekrandom [AVG 12 runs] : 2942 (± 57) ops/sec After: seekrandom [AVG 12 runs] : 7489 (± 184) ops/sec Reviewed By: siying Differential Revision: D36469796 Pulled By: pdillinger fbshipit-source-id: bcf1e2a68d347b32adb2b27384f945434e7a266d |

||

|

|

3573558ec5 |

Rewrite memory-charging feature's option API (#9926)

Summary: **Context:** Previous PR https://github.com/facebook/rocksdb/pull/9748, https://github.com/facebook/rocksdb/pull/9073, https://github.com/facebook/rocksdb/pull/8428 added separate flag for each charged memory area. Such API design is not scalable as we charge more and more memory areas. Also, we foresee an opportunity to consolidate this feature with other cache usage related features such as `cache_index_and_filter_blocks` using `CacheEntryRole`. Therefore we decided to consolidate all these flags with `CacheUsageOptions cache_usage_options` and this PR serves as the first step by consolidating memory-charging related flags. **Summary:** - Replaced old API reference with new ones, including making `kCompressionDictionaryBuildingBuffer` opt-out and added a unit test for that - Added missing db bench/stress test for some memory charging features - Renamed related test suite to indicate they are under the same theme of memory charging - Refactored a commonly used mocked cache component in memory charging related tests to reduce code duplication - Replaced the phrases "memory tracking" / "cache reservation" (other than CacheReservationManager-related ones) with "memory charging" for standard description of this feature. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9926 Test Plan: - New unit test for opt-out `kCompressionDictionaryBuildingBuffer` `TEST_F(ChargeCompressionDictionaryBuildingBufferTest, Basic)` - New unit test for option validation/sanitization `TEST_F(CacheUsageOptionsOverridesTest, SanitizeAndValidateOptions)` - CI - db bench (in case querying new options introduces regression) **+0.5% micros/op**: `TEST_TMPDIR=/dev/shm/testdb ./db_bench -benchmarks=fillseq -db=$TEST_TMPDIR -charge_compression_dictionary_building_buffer=1(remove this for comparison) -compression_max_dict_bytes=10000 -disable_auto_compactions=1 -write_buffer_size=100000 -num=4000000 | egrep 'fillseq'` #-run | (pre-PR) avg micros/op | std micros/op | (post-PR) micros/op | std micros/op | change (%) -- | -- | -- | -- | -- | -- 10 | 3.9711 | 0.264408 | 3.9914 | 0.254563 | 0.5111933721 20 | 3.83905 | 0.0664488 | 3.8251 | 0.0695456 | **-0.3633711465** 40 | 3.86625 | 0.136669 | 3.8867 | 0.143765 | **0.5289363078** - db_stress: `python3 tools/db_crashtest.py blackbox -charge_compression_dictionary_building_buffer=1 -charge_filter_construction=1 -charge_table_reader=1 -cache_size=1` killed as normal Reviewed By: ajkr Differential Revision: D36054712 Pulled By: hx235 fbshipit-source-id: d406e90f5e0c5ea4dbcb585a484ad9302d4302af |

||

|

|

736a7b5433 |

Remove own ToString() (#9955)

Summary: ToString() is created as some platform doesn't support std::to_string(). However, we've already used std::to_string() by mistake for 16 months (in db/db_info_dumper.cc). This commit just remove ToString(). Pull Request resolved: https://github.com/facebook/rocksdb/pull/9955 Test Plan: Watch CI tests Reviewed By: riversand963 Differential Revision: D36176799 fbshipit-source-id: bdb6dcd0e3a3ab96a1ac810f5d0188f684064471 |

||

|

|

49628c9a83 |

Use std::numeric_limits<> (#9954)

Summary: Right now we still don't fully use std::numeric_limits but use a macro, mainly for supporting VS 2013. Right now we only support VS 2017 and up so it is not a problem. The code comment claims that MinGW still needs it. We don't have a CI running MinGW so it's hard to validate. since we now require C++17, it's hard to imagine MinGW would still build RocksDB but doesn't support std::numeric_limits<>. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9954 Test Plan: See CI Runs. Reviewed By: riversand963 Differential Revision: D36173954 fbshipit-source-id: a35a73af17cdcae20e258cdef57fcf29a50b49e0 |

||

|

|

bf68d1c93d |

Print elapsed time and number of operations completed (#9886)

Summary: This is inspired by debugging a regression test that runs for ~0.05 seconds and the short running time makes it prone to variance. While db_bench ran for ~60 seconds, 59.95 seconds was spent opening 128 databases (and doing recovery). So it was harder to notice that the benchmark only ran for 0.05 seconds. Normally I add output to the end of the line to make life easier for existing tools that parse it but in this case the output near the end of the line has two optional parts and one of the optional parts adds an extra newline. This is for https://github.com/facebook/rocksdb/issues/9856 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9886 Test Plan: ./db_bench --benchmarks=overwrite,readrandom --num=1000000 --threads=4 old output: DB path: [/tmp/rocksdbtest-2260/dbbench] overwrite : 14.108 micros/op 283338 ops/sec; 31.3 MB/s DB path: [/tmp/rocksdbtest-2260/dbbench] readrandom : 7.994 micros/op 496788 ops/sec; 55.0 MB/s (1000000 of 1000000 found) new output: DB path: [/tmp/rocksdbtest-2260/dbbench] overwrite : 14.117 micros/op 282862 ops/sec 14.141 seconds 4000000 operations; 31.3 MB/s DB path: [/tmp/rocksdbtest-2260/dbbench] readrandom : 8.649 micros/op 458475 ops/sec 8.725 seconds 4000000 operations; 49.8 MB/s (981548 of 1000000 found) Reviewed By: ajkr Differential Revision: D36102269 Pulled By: mdcallag fbshipit-source-id: 5cd8a9e11f5cbe2a46809571afd83335b6b0caa0 |

||

|

|

b6ec3328af |

Make --benchmarks=flush flush the default column family (#9887)

Summary: db_bench --benchmarks=flush wasn't flushing the default column family. This is for https://github.com/facebook/rocksdb/issues/9880 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9887 Test Plan: Confirm that flush works (*.log is empty) when "flush" added to benchmark list Confirm that *.log is not empty otherwise. Repeat for all combinations for: uses column families, uses multiple databases ./db_bench --benchmarks=overwrite --num=10000 ls -lrt /tmp/rocksdbtest-2260/dbbench/*.log -rw-r--r-- 1 me users 1380286 Apr 21 10:47 /tmp/rocksdbtest-2260/dbbench/000004.log ./db_bench --benchmarks=overwrite,flush --num=10000 ls -lrt /tmp/rocksdbtest-2260/dbbench/*.log -rw-r--r-- 1 me users 0 Apr 21 10:48 /tmp/rocksdbtest-2260/dbbench/000008.log ./db_bench --benchmarks=overwrite --num=10000 --num_column_families=4 ls -lrt /tmp/rocksdbtest-2260/dbbench/*.log -rw-r--r-- 1 me users 1387823 Apr 21 10:49 /tmp/rocksdbtest-2260/dbbench/000004.log ./db_bench --benchmarks=overwrite,flush --num=10000 --num_column_families=4 ls -lrt /tmp/rocksdbtest-2260/dbbench/*.log -rw-r--r-- 1 me users 0 Apr 21 10:51 /tmp/rocksdbtest-2260/dbbench/000014.log ./db_bench --benchmarks=overwrite --num=10000 --num_multi_db=2 ls -lrt /tmp/rocksdbtest-2260/dbbench/[01]/*.log -rw-r--r-- 1 me users 1380838 Apr 21 10:55 /tmp/rocksdbtest-2260/dbbench/0/000004.log -rw-r--r-- 1 me users 1379734 Apr 21 10:55 /tmp/rocksdbtest-2260/dbbench/1/000004.log ./db_bench --benchmarks=overwrite,flush --num=10000 --num_multi_db=2 ls -lrt /tmp/rocksdbtest-2260/dbbench/[01]/*.log -rw-r--r-- 1 me users 0 Apr 21 10:57 /tmp/rocksdbtest-2260/dbbench/0/000013.log -rw-r--r-- 1 me users 0 Apr 21 10:57 /tmp/rocksdbtest-2260/dbbench/1/000013.log ./db_bench --benchmarks=overwrite --num=10000 --num_column_families=4 --num_multi_db=2 ls -lrt /tmp/rocksdbtest-2260/dbbench/[01]/*.log -rw-r--r-- 1 me users 1395108 Apr 21 10:52 /tmp/rocksdbtest-2260/dbbench/1/000004.log -rw-r--r-- 1 me users 1380411 Apr 21 10:52 /tmp/rocksdbtest-2260/dbbench/0/000004.log ./db_bench --benchmarks=overwrite,flush --num=10000 --num_column_families=4 --num_multi_db=2 ls -lrt /tmp/rocksdbtest-2260/dbbench/[01]/*.log -rw-r--r-- 1 me users 0 Apr 21 10:54 /tmp/rocksdbtest-2260/dbbench/0/000022.log -rw-r--r-- 1 me users 0 Apr 21 10:54 /tmp/rocksdbtest-2260/dbbench/1/000022.log Reviewed By: ajkr Differential Revision: D36026777 Pulled By: mdcallag fbshipit-source-id: d42d3d7efceea7b9a25bbbc0f04461d2b7301122 |

||

|

|

fb9a167a55 |

Add 95% confidence intervals to db_bench output (#9882)

Summary: Enhancing `db_bench` output with 95% statistical confidence intervals for better performance evaluation. The goal is to unambiguously separate random variance when running benchmark over multiple iterations. Output enhanced with confidence intervals exposed in brackets: ``` $ ./db_bench --benchmarks=fillseq[-X10] Running benchmark for 10 times fillseq : 4.961 micros/op 201578 ops/sec; 22.3 MB/s fillseq : 5.030 micros/op 198824 ops/sec; 22.0 MB/s fillseq [AVG 2 runs] : 200201 (± 2698) ops/sec; 22.1 (± 0.3) MB/sec fillseq : 4.963 micros/op 201471 ops/sec; 22.3 MB/s fillseq [AVG 3 runs] : 200624 (± 1765) ops/sec; 22.2 (± 0.2) MB/sec fillseq : 5.035 micros/op 198625 ops/sec; 22.0 MB/s fillseq [AVG 4 runs] : 200124 (± 1586) ops/sec; 22.1 (± 0.2) MB/sec fillseq : 4.979 micros/op 200861 ops/sec; 22.2 MB/s fillseq [AVG 5 runs] : 200272 (± 1262) ops/sec; 22.2 (± 0.1) MB/sec fillseq : 4.893 micros/op 204367 ops/sec; 22.6 MB/s fillseq [AVG 6 runs] : 200954 (± 1688) ops/sec; 22.2 (± 0.2) MB/sec fillseq : 4.914 micros/op 203502 ops/sec; 22.5 MB/s fillseq [AVG 7 runs] : 201318 (± 1595) ops/sec; 22.3 (± 0.2) MB/sec fillseq : 4.998 micros/op 200074 ops/sec; 22.1 MB/s fillseq [AVG 8 runs] : 201163 (± 1415) ops/sec; 22.3 (± 0.2) MB/sec fillseq : 4.946 micros/op 202188 ops/sec; 22.4 MB/s fillseq [AVG 9 runs] : 201277 (± 1267) ops/sec; 22.3 (± 0.1) MB/sec fillseq : 5.093 micros/op 196331 ops/sec; 21.7 MB/s fillseq [AVG 10 runs] : 200782 (± 1491) ops/sec; 22.2 (± 0.2) MB/sec fillseq [AVG 10 runs] : 200782 (± 1491) ops/sec; 22.2 (± 0.2) MB/sec fillseq [MEDIAN 10 runs] : 201166 ops/sec; 22.3 MB/s ``` For more explicit interval representation, use `--confidence_interval_only` flag: ``` $ ./db_bench --benchmarks=fillseq[-X10] --confidence_interval_only Running benchmark for 10 times fillseq : 4.935 micros/op 202648 ops/sec; 22.4 MB/s fillseq : 5.078 micros/op 196943 ops/sec; 21.8 MB/s fillseq [CI95 2 runs] : (194205, 205385) ops/sec; (21.5, 22.7) MB/sec fillseq : 5.159 micros/op 193816 ops/sec; 21.4 MB/s fillseq [CI95 3 runs] : (192735, 202869) ops/sec; (21.3, 22.4) MB/sec fillseq : 4.947 micros/op 202158 ops/sec; 22.4 MB/s fillseq [CI95 4 runs] : (194721, 203061) ops/sec; (21.5, 22.5) MB/sec fillseq : 4.908 micros/op 203756 ops/sec; 22.5 MB/s fillseq [CI95 5 runs] : (196113, 203615) ops/sec; (21.7, 22.5) MB/sec fillseq : 5.063 micros/op 197528 ops/sec; 21.9 MB/s fillseq [CI95 6 runs] : (196319, 202631) ops/sec; (21.7, 22.4) MB/sec fillseq : 5.214 micros/op 191799 ops/sec; 21.2 MB/s fillseq [CI95 7 runs] : (194953, 201803) ops/sec; (21.6, 22.3) MB/sec fillseq : 5.260 micros/op 190095 ops/sec; 21.0 MB/s fillseq [CI95 8 runs] : (193749, 200937) ops/sec; (21.4, 22.2) MB/sec fillseq : 5.076 micros/op 196992 ops/sec; 21.8 MB/s fillseq [CI95 9 runs] : (194134, 200474) ops/sec; (21.5, 22.2) MB/sec fillseq : 5.388 micros/op 185603 ops/sec; 20.5 MB/s fillseq [CI95 10 runs] : (192487, 199781) ops/sec; (21.3, 22.1) MB/sec fillseq [AVG 10 runs] : 196134 (± 3647) ops/sec; 21.7 (± 0.4) MB/sec fillseq [MEDIAN 10 runs] : 196968 ops/sec; 21.8 MB/sec ``` Pull Request resolved: https://github.com/facebook/rocksdb/pull/9882 Reviewed By: pdillinger Differential Revision: D35796148 Pulled By: vanekjar fbshipit-source-id: 8313712d16728ff982b8aff28195ee56622385b8 |

||

|

|

690f1edf37 |

Avoid overwriting OPTIONS file settings in db_bench (#9862)

Summary: `InitializeOptionsGeneral()` was overwriting many options that were already configured by OPTIONS file, potentially with the flag default values. This PR changes that function to only overwrite options in limited scenarios, as described at the top of its definition. Block cache is still a violation. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9862 Test Plan: ran under various scenarios (multi-DB, single DB, OPTIONS file, flags) and verified options are set as expected Reviewed By: jay-zhuang Differential Revision: D35736960 Pulled By: ajkr fbshipit-source-id: 75b77740af37e6f5741618f8a8f5685df2417d03 |

||

|

|

f241d082b6 |

Prevent double caching in the compressed secondary cache (#9747)

Summary: ### **Summary:** When both LRU Cache and CompressedSecondaryCache are configured together, there possibly are some data blocks double cached. **Changes include:** 1. Update IS_PROMOTED to IS_IN_SECONDARY_CACHE to prevent confusions. 2. This PR updates SecondaryCacheResultHandle and use IsErasedFromSecondaryCache to determine whether the handle is erased in the secondary cache. Then, the caller can determine whether to SetIsInSecondaryCache(). 3. Rename LRUSecondaryCache to CompressedSecondaryCache. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9747 Test Plan: **Test Scripts:** 1. Populate a DB. The on disk footprint is 482 MB. The data is set to be 50% compressible, so the total decompressed size is expected to be 964 MB. ./db_bench --benchmarks=fillrandom --num=10000000 -db=/db_bench_1 2. overwrite it to a stable state: ./db_bench --benchmarks=overwrite,stats --num=10000000 -use_existing_db -duration=10 --benchmark_write_rate_limit=2000000 -db=/db_bench_1 4. Run read tests with diffeernt cache setting: T1: ./db_bench --benchmarks=seekrandom,stats --threads=16 --num=10000000 -use_existing_db -duration=120 --benchmark_write_rate_limit=52000000 -use_direct_reads --cache_size=520000000 --statistics -db=/db_bench_1 T2: ./db_bench --benchmarks=seekrandom,stats --threads=16 --num=10000000 -use_existing_db -duration=120 --benchmark_write_rate_limit=52000000 -use_direct_reads --cache_size=320000000 -compressed_secondary_cache_size=400000000 --statistics -use_compressed_secondary_cache -db=/db_bench_1 T3: ./db_bench --benchmarks=seekrandom,stats --threads=16 --num=10000000 -use_existing_db -duration=120 --benchmark_write_rate_limit=52000000 -use_direct_reads --cache_size=520000000 -compressed_secondary_cache_size=400000000 --statistics -use_compressed_secondary_cache -db=/db_bench_1 T4: ./db_bench --benchmarks=seekrandom,stats --threads=16 --num=10000000 -use_existing_db -duration=120 --benchmark_write_rate_limit=52000000 -use_direct_reads --cache_size=20000000 -compressed_secondary_cache_size=500000000 --statistics -use_compressed_secondary_cache -db=/db_bench_1 **Before this PR** | Cache Size | Compressed Secondary Cache Size | Cache Hit Rate | |------------|-------------------------------------|----------------| |520 MB | 0 MB | 85.5% | |320 MB | 400 MB | 96.2% | |520 MB | 400 MB | 98.3% | |20 MB | 500 MB | 98.8% | **Before this PR** | Cache Size | Compressed Secondary Cache Size | Cache Hit Rate | |------------|-------------------------------------|----------------| |520 MB | 0 MB | 85.5% | |320 MB | 400 MB | 99.9% | |520 MB | 400 MB | 99.9% | |20 MB | 500 MB | 99.2% | Reviewed By: anand1976 Differential Revision: D35117499 Pulled By: gitbw95 fbshipit-source-id: ea2657749fc13efebe91a8a1b56bc61d6a224a12 |

||

|

|

25e31d1a94 |

tools/db_bench_tool.cc use uint64_t instead of size_t (#9800)

Summary: to fix compilation for 32bit Pull Request resolved: https://github.com/facebook/rocksdb/pull/9800 Reviewed By: riversand963 Differential Revision: D35404447 fbshipit-source-id: 6a1185bb38f3a718357aa120e3b26a1ea77f023d |

||

|

|

49623f9c8e |

Account memory of big memory users in BlockBasedTable in global memory limit (#9748)

Summary: **Context:** Through heap profiling, we discovered that `BlockBasedTableReader` objects can accumulate and lead to high memory usage (e.g, `max_open_file = -1`). These memories are currently not saved, not tracked, not constrained and not cache evict-able. As a first step to improve this, similar to https://github.com/facebook/rocksdb/pull/8428, this PR is to track an estimate of `BlockBasedTableReader` object's memory in block cache and fail future creation if the memory usage exceeds the available space of cache at the time of creation. **Summary:** - Approximate big memory users (`BlockBasedTable::Rep` and `TableProperties` )' memory usage in addition to the existing estimated ones (filter block/index block/un-compression dictionary) - Charge all of these memory usages to block cache on `BlockBasedTable::Open()` and release them on `~BlockBasedTable()` as there is no memory usage fluctuation of concern in between - Refactor on CacheReservationManager (and its call-sites) to add concurrent support for BlockBasedTable used in this PR. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9748 Test Plan: - New unit tests - db bench: `OpenDb` : **-0.52% in ms** - Setup `./db_bench -benchmarks=fillseq -db=/dev/shm/testdb -disable_auto_compactions=1 -write_buffer_size=1048576` - Repeated run with pre-change w/o feature and post-change with feature, benchmark `OpenDb`: `./db_bench -benchmarks=readrandom -use_existing_db=1 -db=/dev/shm/testdb -reserve_table_reader_memory=true (remove this when running w/o feature) -file_opening_threads=3 -open_files=-1 -report_open_timing=true| egrep 'OpenDb:'` #-run | (feature-off) avg milliseconds | std milliseconds | (feature-on) avg milliseconds | std milliseconds | change (%) -- | -- | -- | -- | -- | -- 10 | 11.4018 | 5.95173 | 9.47788 | 1.57538 | -16.87382694 20 | 9.23746 | 0.841053 | 9.32377 | 1.14074 | 0.9343477536 40 | 9.0876 | 0.671129 | 9.35053 | 1.11713 | 2.893283155 80 | 9.72514 | 2.28459 | 9.52013 | 1.0894 | -2.108041632 160 | 9.74677 | 0.991234 | 9.84743 | 1.73396 | 1.032752389 320 | 10.7297 | 5.11555 | 10.547 | 1.97692 | **-1.70275031** 640 | 11.7092 | 2.36565 | 11.7869 | 2.69377 | **0.6635807741** - db bench on write with cost to cache in WriteBufferManager (just in case this PR's CRM refactoring accidentally slows down anything in WBM) : `fillseq` : **+0.54% in micros/op** `./db_bench -benchmarks=fillseq -db=/dev/shm/testdb -disable_auto_compactions=1 -cost_write_buffer_to_cache=true -write_buffer_size=10000000000 | egrep 'fillseq'` #-run | (pre-PR) avg micros/op | std micros/op | (post-PR) avg micros/op | std micros/op | change (%) -- | -- | -- | -- | -- | -- 10 | 6.15 | 0.260187 | 6.289 | 0.371192 | 2.260162602 20 | 7.28025 | 0.465402 | 7.37255 | 0.451256 | 1.267813605 40 | 7.06312 | 0.490654 | 7.13803 | 0.478676 | **1.060579461** 80 | 7.14035 | 0.972831 | 7.14196 | 0.92971 | **0.02254791432** - filter bench: `bloom filter`: **-0.78% in ms/key** - ` ./filter_bench -impl=2 -quick -reserve_table_builder_memory=true | grep 'Build avg'` #-run | (pre-PR) avg ns/key | std ns/key | (post-PR) ns/key | std ns/key | change (%) -- | -- | -- | -- | -- | -- 10 | 26.4369 | 0.442182 | 26.3273 | 0.422919 | **-0.4145720565** 20 | 26.4451 | 0.592787 | 26.1419 | 0.62451 | **-1.1465262** - Crash test `python3 tools/db_crashtest.py blackbox --reserve_table_reader_memory=1 --cache_size=1` killed as normal Reviewed By: ajkr Differential Revision: D35136549 Pulled By: hx235 fbshipit-source-id: 146978858d0f900f43f4eb09bfd3e83195e3be28 |

||

|

|

bcabee737f |

Improve comments for some files (#9793)

Summary: Update the comments, e.g. fixing typo, formatting, etc. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9793 Reviewed By: jay-zhuang Differential Revision: D35323989 Pulled By: gitbw95 fbshipit-source-id: 4a72fc02b67abaae8be0d1439b68f9967a68052d |

||

|

|

bfea9e7c02 |

Add benchmark for GetMergeOperands() (#9785)

Summary: There's an existing benchmark, "getmergeoperands", but it is unconventional in that it has multiple phases and hardcoded setup parameters. This PR adds a different one, "readrandomoperands", that follows the pattern of other benchmarks of having a single phase and taking its configuration from existing flags. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9785 Test Plan: ``` $ ./db_bench -benchmarks=mergerandom -merge_operator=StringAppendOperator -write_buffer_size=1048576 -max_bytes_for_level_base=4194304 -target_file_size_base=1048576 -compression_type=none -disable_auto_compactions=true $ ./db_bench -use_existing_db=true -benchmarks=readrandomoperands -merge_operator=StringAppendOperator -disable_auto_compactions=true -duration=10 ... readrandomoperands : 542.082 micros/op 1844 ops/sec; 0.2 MB/s (11980 of 18999 found) ``` Reviewed By: jay-zhuang Differential Revision: D35290412 Pulled By: ajkr fbshipit-source-id: fb367ca614b128cef844a75f0e5d9dd7c3328d85 |

||

|

|

37de4e1d08 |

Correctly set ThreadState::tid (#9757)

Summary: Fixes a bug introduced by me in https://github.com/facebook/rocksdb/pull/9733 That PR added a counter so that the per-thread seeds in ThreadState would be unique even when --benchmarks had more than one test. But it incorrectly used this counter as the value for ThreadState::tid as well. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9757 Test Plan: Confirm that unexpectedly good QPS results on the regression tests return to normal with this fix. I have confirmed that the QPS increase starts with the PR 9733 diff. Reviewed By: jay-zhuang Differential Revision: D35149303 Pulled By: mdcallag fbshipit-source-id: dee5cc36b7faaba6c3be6d6a253d3c2eaad72864 |

||

|

|

1a130fa3c1 |

db_bench should use a good seed when --seed is not set or set to 0 (#9740)

Summary: This is for https://github.com/facebook/rocksdb/issues/9737 I have wasted more than a few hours running db_bench benchmarks where --seed was not set and getting better than expected results because cache hit rates are great because multiple invocations of db_bench used the same value for --seed or did not set it, and then all used 0. The result is that all see the same sequence of keys. Others have done the same. The problem is worse in that it is easy to miss and the result is a benchmark with results that are misleading. A good way to avoid this is to set it to the equivalent of gettimeofday() when either --seed is not set or it is set to 0 (the default). With this change the actual seed is printed when it was 0 at process start: Set seed to 1647992570365606 because --seed was 0 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9740 Test Plan: Perf results: ./db_bench --benchmarks=fillseq,readrandom --num=1000000 --reads=4000000 readrandom : 6.469 micros/op 154583 ops/sec; 17.1 MB/s (4000000 of 4000000 found) ./db_bench --benchmarks=fillseq,readrandom --num=1000000 --reads=4000000 --seed=0 readrandom : 6.565 micros/op 152321 ops/sec; 16.9 MB/s (4000000 of 4000000 found) ./db_bench --benchmarks=fillseq,readrandom --num=1000000 --reads=4000000 --seed=1 readrandom : 6.461 micros/op 154777 ops/sec; 17.1 MB/s (4000000 of 4000000 found) ./db_bench --benchmarks=fillseq,readrandom --num=1000000 --reads=4000000 --seed=2 readrandom : 6.525 micros/op 153244 ops/sec; 17.0 MB/s (4000000 of 4000000 found) Reviewed By: jay-zhuang Differential Revision: D35145361 Pulled By: mdcallag fbshipit-source-id: 2b35b153ccec46b27d7c9405997523555fc51267 |

||

|

|

409635cb2a |

Add --slow_usecs option to determine when long op message is printed (#9732)

Summary: This adds the --slow_usecs option with a default value of 1M. Operations that take this much time have a message printed when --histogram=1, --stats_interval=0 and --stats_interval_seconds=0. The current code hardwired this to 20,000 usecs and for some stress tests that reduced throughput by 20% or more. This is for https://github.com/facebook/rocksdb/issues/9620 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9732 Test Plan: ./db_bench --benchmarks=fillrandom,readrandom --compression_type=lz4 --slow_usecs=100 --histogram=1 ./db_bench --benchmarks=fillrandom,readrandom --compression_type=lz4 --slow_usecs=100000 --histogram=1 Reviewed By: jay-zhuang Differential Revision: D35121522 Pulled By: mdcallag fbshipit-source-id: daf27f937efd748980545d6395db332712fc078b |

||

|

|

f219e3d5d8 |

db_bench should fail on bad values for --compaction_fadvice and --value_size_distribution_type (#9741)

Summary: db_bench quietly parses and ignores bad values for --compaction_fadvice and --value_size_distribution_type I prefer that it fail for them as it does for bad option values in most other cases. Otherwise a benchmark result will be provided for the wrong configuration and the result will be misleading. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9741 Test Plan: These now fail: ./db_bench --compaction_fadvice=noney Unknown compaction fadvice:noney ./db_bench --value_size_distribution_type=norma Cannot parse distribution type 'norma' While correct values continue to work: ./db_bench --value_size_distribution_type=normal Initializing RocksDB Options from the specified file Initializing RocksDB Options from command-line flags ./db_bench --compaction_fadvice=none Initializing RocksDB Options from the specified file Initializing RocksDB Options from command-line flags Reviewed By: siying Differential Revision: D35115973 Pulled By: mdcallag fbshipit-source-id: c2b10de5c2d1ea7c7539e676f5bd556351f5d370 |

||

|

|

d583d23d86 |

Avoid seed reuse when --benchmarks has more than one test (#9733)

Summary: When --benchmarks has more than one test then the threads in one benchmark will use the same set of seeds as the threads in the previous benchmark. This diff fixe that. This fixes https://github.com/facebook/rocksdb/issues/9632 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9733 Test Plan: For this command line the block cache is 8GB, so it caches at most 1024 8KB blocks. Note that without this diff the second run of readrandom has a much better response time because seed reuse means the second run reads the same 1000 blocks as the first run and they are cached at that point. But with this diff that does not happen. ./db_bench --benchmarks=fillseq,flush,compact0,waitforcompaction,levelstats,readrandom,readrandom --compression_type=zlib --num=10000000 --reads=1000 --block_size=8192 ... ``` Level Files Size(MB) -------------------- 0 0 0 1 11 238 2 9 253 3 0 0 4 0 0 5 0 0 6 0 0 ``` --- perf results without this diff DB path: [/tmp/rocksdbtest-2260/dbbench] readrandom : 46.212 micros/op 21618 ops/sec; 2.4 MB/s (1000 of 1000 found) DB path: [/tmp/rocksdbtest-2260/dbbench] readrandom : 21.963 micros/op 45450 ops/sec; 5.0 MB/s (1000 of 1000 found) --- perf results with this diff DB path: [/tmp/rocksdbtest-2260/dbbench] readrandom : 47.213 micros/op 21126 ops/sec; 2.3 MB/s (1000 of 1000 found) DB path: [/tmp/rocksdbtest-2260/dbbench] readrandom : 42.880 micros/op 23299 ops/sec; 2.6 MB/s (1000 of 1000 found) Reviewed By: jay-zhuang Differential Revision: D35089763 Pulled By: mdcallag fbshipit-source-id: 1b50143a07afe876b8c8e5fa50dd94a8ce57fc6b |

||

|

|

6904fd0c86 |

db_bench should fail when an option uses an invalid compression type (#9729)

Summary: This changes db_bench to fail at startup for invalid compression types. It had been changing them to Snappy. For other invalid options it fails at startup. This is for https://github.com/facebook/rocksdb/issues/9621 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9729 Test Plan: This continues to work: ./db_bench --benchmarks=fillrandom --compression_type=lz4 This now fails rather than changing the compression type to Snappy ./db_bench --benchmarks=fillrandom --compression_type=lz44 Cannot parse compression type 'lz44' Reviewed By: jay-zhuang Differential Revision: D35081323 Pulled By: mdcallag fbshipit-source-id: 9b38c835abddce11aa7feb235df63f53cf829981 |

||

|

|

d71e5a5beb |

Add number of running flushes & compactions to --stats_per_interval output (#9726)

Summary: This is for https://github.com/facebook/rocksdb/issues/9709 and add two lines to the end of DB Stats for num-running-compactions and num-running-flushes. For example ... ** DB Stats ** Uptime(secs): 6.0 total, 1.0 interval Cumulative writes: 915K writes, 915K keys, 915K commit groups, 1.0 writes per commit group, ingest: 0.11 GB, 18.95 MB/s Cumulative WAL: 915K writes, 0 syncs, 915000.00 writes per sync, written: 0.11 GB, 18.95 MB/s Cumulative stall: 00:00:0.000 H:M:S, 0.0 percent Interval writes: 133K writes, 133K keys, 133K commit groups, 1.0 writes per commit group, ingest: 16.62 MB, 16.53 MB/s Interval WAL: 133K writes, 0 syncs, 133000.00 writes per sync, written: 0.02 GB, 16.53 MB/s Interval stall: 00:00:0.000 H:M:S, 0.0 percent num-running-compactions: 0 num-running-flushes: 0 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9726 Reviewed By: jay-zhuang Differential Revision: D35066759 Pulled By: mdcallag fbshipit-source-id: c161fadd3c15c5aa715a820dab6bfedb46dc099b |

||

|

|

f07eec1bf8 |

Add async_io read option in db_bench (#9735)

Summary: Add async_io Read option in db_bench Pull Request resolved: https://github.com/facebook/rocksdb/pull/9735 Test Plan: ./db_bench -use_existing_db=true -db=/tmp/prefix_scan_prefetch_main -benchmarks="seekrandom" -key_size=32 -value_size=512 -num=5000000 -use_direct_reads=true -seek_nexts=327680 -duration=120 -ops_between_duration_checks=1 -async_io=1 Reviewed By: riversand963 Differential Revision: D35058482 Pulled By: akankshamahajan15 fbshipit-source-id: 1522b638c79f6d85bb7408c67f6ab76dbabeeee7 |

||

|

|

63a284a6ad |

For db_bench --benchmarks=fillseq with --num_multi_db load databases … (#9713)

Summary: …in order This fixes https://github.com/facebook/rocksdb/issues/9650 For db_bench --benchmarks=fillseq --num_multi_db=X it loads databases in sequence rather than randomly choosing a database per Put. The benefits are: 1) avoids long delays between flushing memtables 2) avoids flushing memtables for all of them at the same point in time 3) puts same number of keys per database so that query tests will find keys as expected Pull Request resolved: https://github.com/facebook/rocksdb/pull/9713 Test Plan: Using db_bench.1 without the change and db_bench.2 with the change: for i in 1 2; do rm -rf /data/m/rx/* ; time ./db_bench.$i --db=/data/m/rx --benchmarks=fillseq --num_multi_db=4 --num=10000000; du -hs /data/m/rx ; done --- without the change fillseq : 3.188 micros/op 313682 ops/sec; 34.7 MB/s real 2m7.787s user 1m52.776s sys 0m46.549s 2.7G /data/m/rx --- with the change fillseq : 3.149 micros/op 317563 ops/sec; 35.1 MB/s real 2m6.196s user 1m51.482s sys 0m46.003s 2.7G /data/m/rx Also, temporarily added a printf to confirm that the code switches to the next database at the right time ZZ switch to db 1 at 10000000 ZZ switch to db 2 at 20000000 ZZ switch to db 3 at 30000000 for i in 1 2; do rm -rf /data/m/rx/* ; time ./db_bench.$i --db=/data/m/rx --benchmarks=fillseq,readrandom --num_multi_db=4 --num=100000; du -hs /data/m/rx ; done --- without the change, smaller database, note that not all keys are found by readrandom because databases have < and > --num keys fillseq : 3.176 micros/op 314805 ops/sec; 34.8 MB/s readrandom : 1.913 micros/op 522616 ops/sec; 57.7 MB/s (99873 of 100000 found) --- with the change, smaller database, note that all keys are found by readrandom fillseq : 3.110 micros/op 321566 ops/sec; 35.6 MB/s readrandom : 1.714 micros/op 583257 ops/sec; 64.5 MB/s (100000 of 100000 found) Reviewed By: jay-zhuang Differential Revision: D35030168 Pulled By: mdcallag fbshipit-source-id: 2a18c4ec571d954cf5a57b00a11802a3608823ee |

||

|

|

1ca1562e35 |

Make mixgraph easier to use (#9711)

Summary: Changes: * improves monitoring by displaying average size of a Put value and average scan length * forces the minimum value size to be 10. Before this it was 0 if you didn't set the distribution parameters. * uses reasonable defaults for the distribution parameters that determine value size and scan length * includes seeks in "reads ... found" message, before this they were missing This is for https://github.com/facebook/rocksdb/issues/9672 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9711 Test Plan: Before this change: ./db_bench --benchmarks=fillseq,mixgraph --mix_get_ratio=50 --mix_put_ratio=25 --mix_seek_ratio=25 --num=100000 --value_k=0.2615 --value_sigma=25.45 --iter_k=2.517 --iter_sigma=14.236 fillseq : 4.289 micros/op 233138 ops/sec; 25.8 MB/s mixgraph : 18.461 micros/op 54166 ops/sec; 755.0 MB/s ( Gets:50164 Puts:24919 Seek:24917 of 50164 in 75081 found) After this change: ./db_bench --benchmarks=fillseq,mixgraph --mix_get_ratio=50 --mix_put_ratio=25 --mix_seek_ratio=25 --num=100000 --value_k=0.2615 --value_sigma=25.45 --iter_k=2.517 --iter_sigma=14.236 fillseq : 3.974 micros/op 251553 ops/sec; 27.8 MB/s mixgraph : 16.722 micros/op 59795 ops/sec; 833.5 MB/s ( Gets:50164 Puts:24919 Seek:24917, reads 75081 in 75081 found, avg size: 36.0 value, 504.9 scan) Reviewed By: jay-zhuang Differential Revision: D35030190 Pulled By: mdcallag fbshipit-source-id: d8f555f28d869f752ddb674a524108884511b151 |

||

|

|

ca0ef54f16 |

Rate-limit automatic WAL flush after each user write (#9607)

Summary: **Context:** WAL flush is currently not rate-limited by `Options::rate_limiter`. This PR is to provide rate-limiting to auto WAL flush, the one that automatically happen after each user write operation (i.e, `Options::manual_wal_flush == false`), by adding `WriteOptions::rate_limiter_options`. Note that we are NOT rate-limiting WAL flush that do NOT automatically happen after each user write, such as `Options::manual_wal_flush == true + manual FlushWAL()` (rate-limiting multiple WAL flushes), for the benefits of: - being consistent with [ReadOptions::rate_limiter_priority](https://github.com/facebook/rocksdb/blob/7.0.fb/include/rocksdb/options.h#L515) - being able to turn off some WAL flush's rate-limiting but not all (e.g, turn off specific the WAL flush of a critical user write like a service's heartbeat) `WriteOptions::rate_limiter_options` only accept `Env::IO_USER` and `Env::IO_TOTAL` currently due to an implementation constraint. - The constraint is that we currently queue parallel writes (including WAL writes) based on FIFO policy which does not factor rate limiter priority into this layer's scheduling. If we allow lower priorities such as `Env::IO_HIGH/MID/LOW` and such writes specified with lower priorities occurs before ones specified with higher priorities (even just by a tiny bit in arrival time), the former would have blocked the latter, leading to a "priority inversion" issue and contradictory to what we promise for rate-limiting priority. Therefore we only allow `Env::IO_USER` and `Env::IO_TOTAL` right now before improving that scheduling. A pre-requisite to this feature is to support operation-level rate limiting in `WritableFileWriter`, which is also included in this PR. **Summary:** - Renamed test suite `DBRateLimiterTest to DBRateLimiterOnReadTest` for adding a new test suite - Accept `rate_limiter_priority` in `WritableFileWriter`'s private and public write functions - Passed `WriteOptions::rate_limiter_options` to `WritableFileWriter` in the path of automatic WAL flush. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9607 Test Plan: - Added new unit test to verify existing flush/compaction rate-limiting does not break, since `DBTest, RateLimitingTest` is disabled and current db-level rate-limiting tests focus on read only (e.g, `db_rate_limiter_test`, `DBTest2, RateLimitedCompactionReads`). - Added new unit test `DBRateLimiterOnWriteWALTest, AutoWalFlush` - `strace -ftt -e trace=write ./db_bench -benchmarks=fillseq -db=/dev/shm/testdb -rate_limit_auto_wal_flush=1 -rate_limiter_bytes_per_sec=15 -rate_limiter_refill_period_us=1000000 -write_buffer_size=100000000 -disable_auto_compactions=1 -num=100` - verified that WAL flush(i.e, system-call _write_) were chunked into 15 bytes and each _write_ was roughly 1 second apart - verified the chunking disappeared when `-rate_limit_auto_wal_flush=0` - crash test: `python3 tools/db_crashtest.py blackbox --disable_wal=0 --rate_limit_auto_wal_flush=1 --rate_limiter_bytes_per_sec=10485760 --interval=10` killed as normal **Benchmarked on flush/compaction to ensure no performance regression:** - compaction with rate-limiting (see table 1, avg over 1280-run): pre-change: **915635 micros/op**; post-change: **907350 micros/op (improved by 0.106%)** ``` #!/bin/bash TEST_TMPDIR=/dev/shm/testdb START=1 NUM_DATA_ENTRY=8 N=10 rm -f compact_bmk_output.txt compact_bmk_output_2.txt dont_care_output.txt for i in $(eval echo "{$START..$NUM_DATA_ENTRY}") do NUM_RUN=$(($N*(2**($i-1)))) for j in $(eval echo "{$START..$NUM_RUN}") do ./db_bench --benchmarks=fillrandom -db=$TEST_TMPDIR -disable_auto_compactions=1 -write_buffer_size=6710886 > dont_care_output.txt && ./db_bench --benchmarks=compact -use_existing_db=1 -db=$TEST_TMPDIR -level0_file_num_compaction_trigger=1 -rate_limiter_bytes_per_sec=100000000 | egrep 'compact' done > compact_bmk_output.txt && awk -v NUM_RUN=$NUM_RUN '{sum+=$3;sum_sqrt+=$3^2}END{print sum/NUM_RUN, sqrt(sum_sqrt/NUM_RUN-(sum/NUM_RUN)^2)}' compact_bmk_output.txt >> compact_bmk_output_2.txt done ``` - compaction w/o rate-limiting (see table 2, avg over 640-run): pre-change: **822197 micros/op**; post-change: **823148 micros/op (regressed by 0.12%)** ``` Same as above script, except that -rate_limiter_bytes_per_sec=0 ``` - flush with rate-limiting (see table 3, avg over 320-run, run on the [patch]( |

||

|

|

f706a9c199 |

Add a secondary cache implementation based on LRUCache 1 (#9518)

Summary: **Summary:** RocksDB uses a block cache to reduce IO and make queries more efficient. The block cache is based on the LRU algorithm (LRUCache) and keeps objects containing uncompressed data, such as Block, ParsedFullFilterBlock etc. It allows the user to configure a second level cache (rocksdb::SecondaryCache) to extend the primary block cache by holding items evicted from it. Some of the major RocksDB users, like MyRocks, use direct IO and would like to use a primary block cache for uncompressed data and a secondary cache for compressed data. The latter allows us to mitigate the loss of the Linux page cache due to direct IO. This PR includes a concrete implementation of rocksdb::SecondaryCache that integrates with compression libraries such as LZ4 and implements an LRU cache to hold compressed blocks. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9518 Test Plan: In this PR, the lru_secondary_cache_test.cc includes the following tests: 1. The unit tests for the secondary cache with either compression or no compression, such as basic tests, fails tests. 2. The integration tests with both primary cache and this secondary cache . **Follow Up:** 1. Statistics (e.g. compression ratio) will be added in another PR. 2. Once this implementation is ready, I will do some shadow testing and benchmarking with UDB to measure the impact. Reviewed By: anand1976 Differential Revision: D34430930 Pulled By: gitbw95 fbshipit-source-id: 218d78b672a2f914856d8a90ff32f2f5b5043ded |

||

|

|

39b0d92153 |

Add record to set WAL compression type if enabled (#9556)

Summary: When WAL compression is enabled, add a record (new record type) to store the compression type to indicate that all subsequent records are compressed. The log reader will store the compression type when this record is encountered and use the type to uncompress the subsequent records. Compress and uncompress to be implemented in subsequent diffs. Enabled WAL compression in some WAL tests to check for regressions. Some tests that rely on offsets have been disabled. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9556 Reviewed By: anand1976 Differential Revision: D34308216 Pulled By: sidroyc fbshipit-source-id: 7f10595e46f3277f1ea2d309fbf95e2e935a8705 |

||

|

|

babe56ddba |

Add rate limiter priority to ReadOptions (#9424)

Summary: Users can set the priority for file reads associated with their operation by setting `ReadOptions::rate_limiter_priority` to something other than `Env::IO_TOTAL`. Rate limiting `VerifyChecksum()` and `VerifyFileChecksums()` is the motivation for this PR, so it also includes benchmarks and minor bug fixes to get that working. `RandomAccessFileReader::Read()` already had support for rate limiting compaction reads. I changed that rate limiting to be non-specific to compaction, but rather performed according to the passed in `Env::IOPriority`. Now the compaction read rate limiting is supported by setting `rate_limiter_priority = Env::IO_LOW` on its `ReadOptions`. There is no default value for the new `Env::IOPriority` parameter to `RandomAccessFileReader::Read()`. That means this PR goes through all callers (in some cases multiple layers up the call stack) to find a `ReadOptions` to provide the priority. There are TODOs for cases I believe it would be good to let user control the priority some day (e.g., file footer reads), and no TODO in cases I believe it doesn't matter (e.g., trace file reads). The API doc only lists the missing cases where a file read associated with a provided `ReadOptions` cannot be rate limited. For cases like file ingestion checksum calculation, there is no API to provide `ReadOptions` or `Env::IOPriority`, so I didn't count that as missing. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9424 Test Plan: - new unit tests - new benchmarks on ~50MB database with 1MB/s read rate limit and 100ms refill interval; verified with strace reads are chunked (at 0.1MB per chunk) and spaced roughly 100ms apart. - setup command: `./db_bench -benchmarks=fillrandom,compact -db=/tmp/testdb -target_file_size_base=1048576 -disable_auto_compactions=true -file_checksum=true` - benchmarks command: `strace -ttfe pread64 ./db_bench -benchmarks=verifychecksum,verifyfilechecksums -use_existing_db=true -db=/tmp/testdb -rate_limiter_bytes_per_sec=1048576 -rate_limit_bg_reads=1 -rate_limit_user_ops=true -file_checksum=true` - crash test using IO_USER priority on non-validation reads with https://github.com/facebook/rocksdb/issues/9567 reverted: `python3 tools/db_crashtest.py blackbox --max_key=1000000 --write_buffer_size=524288 --target_file_size_base=524288 --level_compaction_dynamic_level_bytes=true --duration=3600 --rate_limit_bg_reads=true --rate_limit_user_ops=true --rate_limiter_bytes_per_sec=10485760 --interval=10` Reviewed By: hx235 Differential Revision: D33747386 Pulled By: ajkr fbshipit-source-id: a2d985e97912fba8c54763798e04f006ccc56e0c |

||

|

|

479eb1aad6 |

Hide deprecated, inefficient block-based filter from public API (#9535)

Summary: This change removes the ability to configure the deprecated, inefficient block-based filter in the public API. Options that would have enabled it now use "full" (and optionally partitioned) filters. Existing block-based filters can still be read and used, and a "back door" way to build them still exists, for testing and in case of trouble. About the only way this removal would cause an issue for users is if temporary memory for filter construction greatly increases. In HISTORY.md we suggest a few possible mitigations: partitioned filters, smaller SST files, or setting reserve_table_builder_memory=true. Or users who have customized a FilterPolicy using the CreateFilter/KeyMayMatch mechanism removed in https://github.com/facebook/rocksdb/issues/9501 will have to upgrade their code. (It's long past time for people to move to the new builder/reader customization interface.) This change also introduces some internal-use-only configuration strings for testing specific filter implementations while bypassing some compatibility / intelligence logic. This is intended to hint at a path toward making FilterPolicy Customizable, but it also gives us a "back door" way to configure block-based filter. Aside: updated db_bench so that -readonly implies -use_existing_db Pull Request resolved: https://github.com/facebook/rocksdb/pull/9535 Test Plan: Unit tests updated. Specifically, * BlockBasedTableTest.BlockReadCountTest is tweaked to validate the back door configuration interface and ignoring of `use_block_based_builder`. * BlockBasedTableTest.TracingGetTest is migrated from testing block-based filter access pattern to full filter access patter, by re-ordering some things. * Options test (pretty self-explanatory) Performance test - create with `./db_bench -db=/dev/shm/rocksdb1 -bloom_bits=10 -cache_index_and_filter_blocks=1 -benchmarks=fillrandom -num=10000000 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0` with and without `-use_block_based_filter`, which creates a DB with 21 SST files in L0. Read with `./db_bench -db=/dev/shm/rocksdb1 -readonly -bloom_bits=10 -cache_index_and_filter_blocks=1 -benchmarks=readrandom -num=10000000 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -duration=30` Without -use_block_based_filter: readrandom 464 ops/sec, 689280 KB DB With -use_block_based_filter: readrandom 169 ops/sec, 690996 KB DB No consistent difference with fillrandom Reviewed By: jay-zhuang Differential Revision: D34153871 Pulled By: pdillinger fbshipit-source-id: 31f4a933c542f8f09aca47fa64aec67832a69738 |

||

|

|

9745c68eb1 |

Remove deprecated option new_table_reader_for_compaction_inputs (#9443)

Summary: In RocksDB option new_table_reader_for_compaction_inputs has not effect on Compaction or on the behavior of RocksDB library. Therefore, we are removing it in the upcoming 7.0 release. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9443 Test Plan: CircleCI Reviewed By: ajkr Differential Revision: D33788508 Pulled By: akankshamahajan15 fbshipit-source-id: 324ca6f12bfd019e9bd5e1b0cdac39be5c3cec7d |

||

|

|

036bbab6f7 |

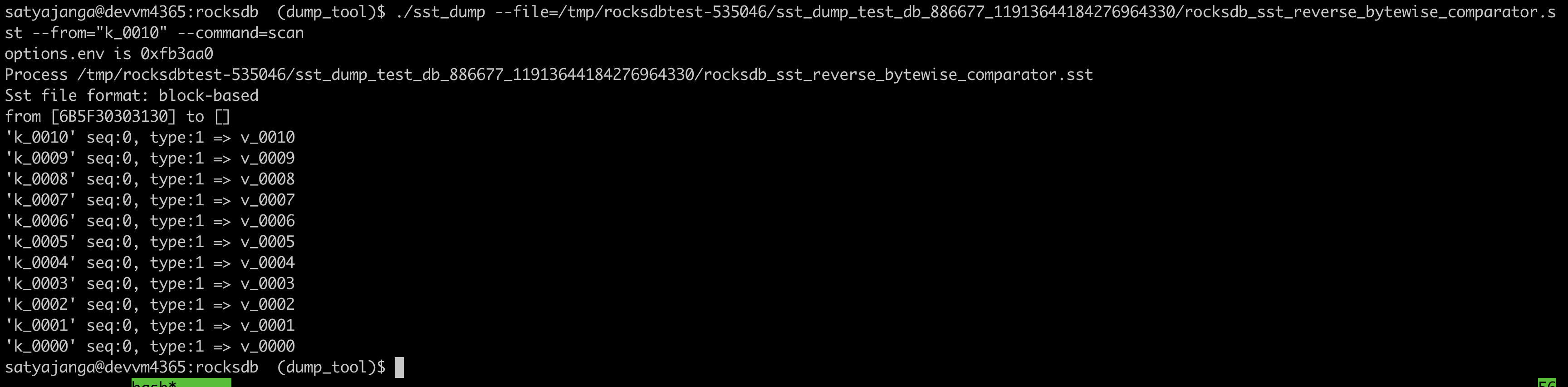

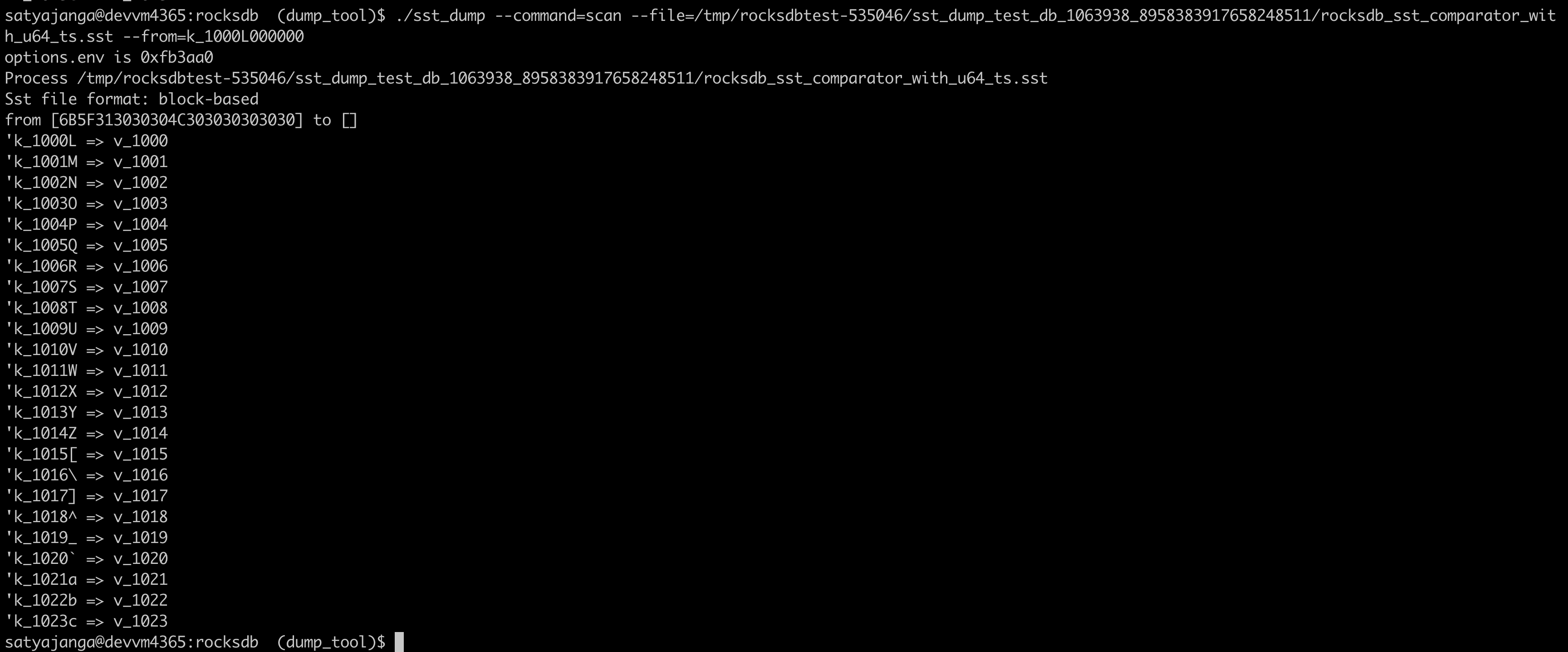

Use the comparator from the sst file table properties in sst_dump_tool (#9491)

Summary: We introduced a new Comparator for timestamp in user keys. In the sst_dump_tool by default we use BytewiseComparator to read sst files. This change allows us to read comparator_name from table properties in meta data block and use it to read. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9491 Test Plan: added unittests for new functionality. make check   Reviewed By: riversand963 Differential Revision: D33993614 Pulled By: satyajanga fbshipit-source-id: 4b5cf938e6d2cb3931d763bef5baccc900b8c536 |

||

|

|

aae3093719 |

Introduce a CountedFileSystem for counting file operations (#9283)

Summary: Added a CountedFileSystem that tracks a number of file operations (opens, closes, deletes, renames, flushes, syncs, fsyncs, reads, writes). This class was based on the ReportFileOpEnv from db_bench. This is a stepping stone PR to be able to change the SpecialEnv into a SpecialFileSystem, where several of the file varieties wish to do operation counting. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9283 Reviewed By: pdillinger Differential Revision: D33062004 Pulled By: mrambacher fbshipit-source-id: d0d297a7fb9c48c06cbf685e5fa755c27193b6f5 |

||

|

|

3122cb4358 |

Revise APIs related to user-defined timestamp (#8946)

Summary: ajkr reminded me that we have a rule of not including per-kv related data in `WriteOptions`. Namely, `WriteOptions` should not include information about "what-to-write", but should just include information about "how-to-write". According to this rule, `WriteOptions::timestamp` (experimental) is clearly a violation. Therefore, this PR removes `WriteOptions::timestamp` for compliance. After the removal, we need to pass timestamp info via another set of APIs. This PR proposes a set of overloaded functions `Put(write_opts, key, value, ts)`, `Delete(write_opts, key, ts)`, and `SingleDelete(write_opts, key, ts)`. Planned to add `Write(write_opts, batch, ts)`, but its complexity made me reconsider doing it in another PR (maybe). For better checking and returning error early, we also add a new set of APIs to `WriteBatch` that take extra `timestamp` information when writing to `WriteBatch`es. These set of APIs in `WriteBatchWithIndex` are currently not supported, and are on our TODO list. Removed `WriteBatch::AssignTimestamps()` and renamed `WriteBatch::AssignTimestamp()` to `WriteBatch::UpdateTimestamps()` since this method require that all keys have space for timestamps allocated already and multiple timestamps can be updated. The constructor of `WriteBatch` now takes a fourth argument `default_cf_ts_sz` which is the timestamp size of the default column family. This will be used to allocate space when calling APIs that do not specify a column family handle. Also, updated `DB::Get()`, `DB::MultiGet()`, `DB::NewIterator()`, `DB::NewIterators()` methods, replacing some assertions about timestamp to returning Status code. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8946 Test Plan: make check ./db_bench -benchmarks=fillseq,fillrandom,readrandom,readseq,deleterandom -user_timestamp_size=8 ./db_stress --user_timestamp_size=8 -nooverwritepercent=0 -test_secondary=0 -secondary_catch_up_one_in=0 -continuous_verification_interval=0 Make sure there is no perf regression by running the following ``` ./db_bench_opt -db=/dev/shm/rocksdb -use_existing_db=0 -level0_stop_writes_trigger=256 -level0_slowdown_writes_trigger=256 -level0_file_num_compaction_trigger=256 -disable_wal=1 -duration=10 -benchmarks=fillrandom ``` Before this PR ``` DB path: [/dev/shm/rocksdb] fillrandom : 1.831 micros/op 546235 ops/sec; 60.4 MB/s ``` After this PR ``` DB path: [/dev/shm/rocksdb] fillrandom : 1.820 micros/op 549404 ops/sec; 60.8 MB/s ``` Reviewed By: ltamasi Differential Revision: D33721359 Pulled By: riversand963 fbshipit-source-id: c131561534272c120ffb80711d42748d21badf09 |

||

|

|

f6d7ec1d02 |

Ignore total_order_seek in DB::Get (#9427)

Summary: Apparently setting total_order_seek=true for DB::Get was intended to allow accurate read semantics if the current prefix extractor doesn't match what was used to generate SST files on disk. But since prefix_extractor was made a mutable option in 5.14.0, we have been able to detect this case and provide the correct semantics regardless of the total_order_seek option. Since that time, the option has only made Get() slower in a reasonably common case: prefix_extractor unchanged and whole_key_filtering=false. So this change primarily removes unnecessary effect of total_order_seek on Get. Also cleans up some related comments. Also adds a -total_order_seek option to db_bench and canonicalizes handling of ReadOptions in db_bench so that command line options have the expected association with library features. (There is potential for change in regression test behavior, but the old behavior is likely indefensible, or some other inconsistency would need to be fixed.) TODO in follow-up work: there should be no reason for Get() to depend on current prefix extractor at all. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9427 Test Plan: Unit tests updated. Performance (using db_bench update) Create DB with `TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -benchmarks=fillrandom -num=10000000 -disable_wal=1 -write_buffer_size=10000000 -bloom_bits=16 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -prefix_size=12 -whole_key_filtering=0` Test with and without `-total_order_seek` on `TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -use_existing_db -readonly -benchmarks=readrandom -num=10000000 -duration=40 -disable_wal=1 -bloom_bits=16 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -prefix_size=12` Before this change, total_order_seek=false: 25188 ops/sec Before this change, total_order_seek=true: 1222 ops/sec (~20x slower) After this change, total_order_seek=false: 24570 ops/sec After this change, total_order_seek=true: 25012 ops/sec (indistinguishable) Reviewed By: siying Differential Revision: D33753458 Pulled By: pdillinger fbshipit-source-id: bf892f34907a5e407d9c40bd4d42f0adbcbe0014 |

||

|

|

42cca28ebb |

Remove deprecated API AdvancedColumnFamilyOptions::rate_limit_delay_max_milliseconds (#9455)

Summary: **Context/Summary:** AdvancedColumnFamilyOptions::rate_limit_delay_max_milliseconds has been marked as deprecated and it's time to actually remove the code. - Keep `soft_rate_limit`/`hard_rate_limit` in `cf_mutable_options_type_info` to prevent throwing `InvalidArgument` in `GetColumnFamilyOptionsFromMap` when reading an option file still with these options (e.g, old option file generated from RocksDB before the deprecation) - Keep `soft_rate_limit`/`hard_rate_limit` in under `OptionsOldApiTest.GetOptionsFromMapTest` to test the case mentioned above. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9455 Test Plan: Rely on my eyeball and CI Reviewed By: ajkr Differential Revision: D33811664 Pulled By: hx235 fbshipit-source-id: 866859427fe710354a90f1095057f80116365ff0 |

||

|

|

22321e1027 |

Remove unused API base_background_compactions (#9462)

Summary: The API is deprecated long time ago. Clean up the codebase by removing it. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9462 Test Plan: CI, fake release: D33835220 Reviewed By: riversand963 Differential Revision: D33835103 Pulled By: jay-zhuang fbshipit-source-id: 6d2dc12c8e7fdbe2700865a3e61f0e3f78bd8184 |

||

|

|

1e0e883ca5 |

Remove deprecated API AdvancedColumnFamilyOptions::soft_rate_limit/hard_rate_limit (#9452)

Summary: **Context/Summary:** AdvancedColumnFamilyOptions::soft_rate_limit/hard_rate_limit have been marked as deprecated and it's time to actually remove the code. - Keep `soft_rate_limit`/`hard_rate_limit` in `cf_mutable_options_type_info` to prevent throwing `InvalidArgument` in `GetColumnFamilyOptionsFromMap` when reading an option file still with these options (e.g, old option file generated from RocksDB before the deprecation) - Keep `soft_rate_limit`/`hard_rate_limit` in under `OptionsOldApiTest.GetOptionsFromMapTest` to test the case mentioned above. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9452 Test Plan: Rely on my eyeball and CI Reviewed By: ajkr Differential Revision: D33804938 Pulled By: hx235 fbshipit-source-id: 133d49f7ec5238d7efceeb0a3122a5792a2b9945 |