mirror of https://github.com/facebook/rocksdb.git

Provide an allocator for new memory type to be used with RocksDB block cache (#6214)

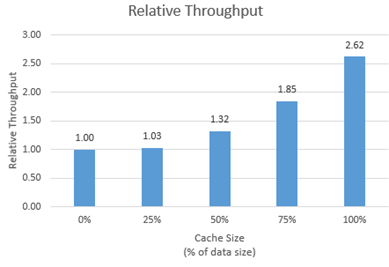

Summary: New memory technologies are being developed by various hardware vendors (Intel DCPMM is one such technology currently available). These new memory types require different libraries for allocation and management (such as PMDK and memkind). The high capacities available make it possible to provision large caches (up to several TBs in size), beyond what is achievable with DRAM. The new allocator provided in this PR uses the memkind library to allocate memory on different media. **Performance** We tested the new allocator using db_bench. - For each test, we vary the size of the block cache (relative to the size of the uncompressed data in the database). - The database is filled sequentially. Throughput is then measured with a readrandom benchmark. - We use a uniform distribution as a worst-case scenario. The plot shows throughput (ops/s) relative to a configuration with no block cache and default allocator. For all tests, p99 latency is below 500 us.  **Changes** - Add MemkindKmemAllocator - Add --use_cache_memkind_kmem_allocator db_bench option (to create an LRU block cache with the new allocator) - Add detection of memkind library with KMEM DAX support - Add test for MemkindKmemAllocator **Minimum Requirements** - kernel 5.3.12 - ndctl v67 - https://github.com/pmem/ndctl - memkind v1.10.0 - https://github.com/memkind/memkind **Memory Configuration** The allocator uses the MEMKIND_DAX_KMEM memory kind. Follow the instructions on[ memkind’s GitHub page](https://github.com/memkind/memkind) to set up NVDIMM memory accordingly. Note on memory allocation with NVDIMM memory exposed as system memory. - The MemkindKmemAllocator will only allocate from NVDIMM memory (using memkind_malloc with MEMKIND_DAX_KMEM kind). - The default allocator is not restricted to RAM by default. Based on NUMA node latency, the kernel should allocate from local RAM preferentially, but it’s a kernel decision. numactl --preferred/--membind can be used to allocate preferentially/exclusively from the local RAM node. **Usage** When creating an LRU cache, pass a MemkindKmemAllocator object as argument. For example (replace capacity with the desired value in bytes): ``` #include "rocksdb/cache.h" #include "memory/memkind_kmem_allocator.h" NewLRUCache( capacity /*size_t*/, 6 /*cache_numshardbits*/, false /*strict_capacity_limit*/, false /*cache_high_pri_pool_ratio*/, std::make_shared<MemkindKmemAllocator>()); ``` Refer to [RocksDB’s block cache documentation](https://github.com/facebook/rocksdb/wiki/Block-Cache) to assign the LRU cache as block cache for a database. Pull Request resolved: https://github.com/facebook/rocksdb/pull/6214 Reviewed By: cheng-chang Differential Revision: D19292435 fbshipit-source-id: 7202f47b769e7722b539c86c2ffd669f64d7b4e1

This commit is contained in:

parent

9d6974d3c9

commit

66a95f0fac

|

|

@ -595,6 +595,7 @@ set(SOURCES

|

||||||

memory/arena.cc

|

memory/arena.cc

|

||||||

memory/concurrent_arena.cc

|

memory/concurrent_arena.cc

|

||||||

memory/jemalloc_nodump_allocator.cc

|

memory/jemalloc_nodump_allocator.cc

|

||||||

|

memory/memkind_kmem_allocator.cc

|

||||||

memtable/alloc_tracker.cc

|

memtable/alloc_tracker.cc

|

||||||

memtable/hash_linklist_rep.cc

|

memtable/hash_linklist_rep.cc

|

||||||

memtable/hash_skiplist_rep.cc

|

memtable/hash_skiplist_rep.cc

|

||||||

|

|

@ -1029,6 +1030,7 @@ if(WITH_TESTS)

|

||||||

logging/env_logger_test.cc

|

logging/env_logger_test.cc

|

||||||

logging/event_logger_test.cc

|

logging/event_logger_test.cc

|

||||||

memory/arena_test.cc

|

memory/arena_test.cc

|

||||||

|

memory/memkind_kmem_allocator_test.cc

|

||||||

memtable/inlineskiplist_test.cc

|

memtable/inlineskiplist_test.cc

|

||||||

memtable/skiplist_test.cc

|

memtable/skiplist_test.cc

|

||||||

memtable/write_buffer_manager_test.cc

|

memtable/write_buffer_manager_test.cc

|

||||||

|

|

|

||||||

4

Makefile

4

Makefile

|

|

@ -505,6 +505,7 @@ TESTS = \

|

||||||

column_family_test \

|

column_family_test \

|

||||||

table_properties_collector_test \

|

table_properties_collector_test \

|

||||||

arena_test \

|

arena_test \

|

||||||

|

memkind_kmem_allocator_test \

|

||||||

block_test \

|

block_test \

|

||||||

data_block_hash_index_test \

|

data_block_hash_index_test \

|

||||||

cache_test \

|

cache_test \

|

||||||

|

|

@ -1241,6 +1242,9 @@ db_repl_stress: tools/db_repl_stress.o $(LIBOBJECTS) $(TESTUTIL)

|

||||||

|

|

||||||

arena_test: memory/arena_test.o $(LIBOBJECTS) $(TESTHARNESS)

|

arena_test: memory/arena_test.o $(LIBOBJECTS) $(TESTHARNESS)

|

||||||

$(AM_LINK)

|

$(AM_LINK)

|

||||||

|

|

||||||

|

memkind_kmem_allocator_test: memory/memkind_kmem_allocator_test.o $(LIBOBJECTS) $(TESTHARNESS)

|

||||||

|

$(AM_LINK)

|

||||||

|

|

||||||

autovector_test: util/autovector_test.o $(LIBOBJECTS) $(TESTHARNESS)

|

autovector_test: util/autovector_test.o $(LIBOBJECTS) $(TESTHARNESS)

|

||||||

$(AM_LINK)

|

$(AM_LINK)

|

||||||

|

|

|

||||||

|

|

@ -28,6 +28,7 @@

|

||||||

# -DZSTD if the ZSTD library is present

|

# -DZSTD if the ZSTD library is present

|

||||||

# -DNUMA if the NUMA library is present

|

# -DNUMA if the NUMA library is present

|

||||||

# -DTBB if the TBB library is present

|

# -DTBB if the TBB library is present

|

||||||

|

# -DMEMKIND if the memkind library is present

|

||||||

#

|

#

|

||||||

# Using gflags in rocksdb:

|

# Using gflags in rocksdb:

|

||||||

# Our project depends on gflags, which requires users to take some extra steps

|

# Our project depends on gflags, which requires users to take some extra steps

|

||||||

|

|

@ -425,7 +426,23 @@ EOF

|

||||||

COMMON_FLAGS="$COMMON_FLAGS -DROCKSDB_MALLOC_USABLE_SIZE"

|

COMMON_FLAGS="$COMMON_FLAGS -DROCKSDB_MALLOC_USABLE_SIZE"

|

||||||

fi

|

fi

|

||||||

fi

|

fi

|

||||||

|

|

||||||

|

if ! test $ROCKSDB_DISABLE_MEMKIND; then

|

||||||

|

# Test whether memkind library is installed

|

||||||

|

$CXX $CFLAGS $COMMON_FLAGS -lmemkind -x c++ - -o /dev/null 2>/dev/null <<EOF

|

||||||

|

#include <memkind.h>

|

||||||

|

int main() {

|

||||||

|

memkind_malloc(MEMKIND_DAX_KMEM, 1024);

|

||||||

|

return 0;

|

||||||

|

}

|

||||||

|

EOF

|

||||||

|

if [ "$?" = 0 ]; then

|

||||||

|

COMMON_FLAGS="$COMMON_FLAGS -DMEMKIND"

|

||||||

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -lmemkind"

|

||||||

|

JAVA_LDFLAGS="$JAVA_LDFLAGS -lmemkind"

|

||||||

|

fi

|

||||||

|

fi

|

||||||

|

|

||||||

if ! test $ROCKSDB_DISABLE_PTHREAD_MUTEX_ADAPTIVE_NP; then

|

if ! test $ROCKSDB_DISABLE_PTHREAD_MUTEX_ADAPTIVE_NP; then

|

||||||

# Test whether PTHREAD_MUTEX_ADAPTIVE_NP mutex type is available

|

# Test whether PTHREAD_MUTEX_ADAPTIVE_NP mutex type is available

|

||||||

$CXX $CFLAGS -x c++ - -o /dev/null 2>/dev/null <<EOF

|

$CXX $CFLAGS -x c++ - -o /dev/null 2>/dev/null <<EOF

|

||||||

|

|

|

||||||

|

|

@ -0,0 +1,32 @@

|

||||||

|

// Copyright (c) 2011-present, Facebook, Inc. All rights reserved.

|

||||||

|

// Copyright (c) 2019 Intel Corporation

|

||||||

|

// This source code is licensed under both the GPLv2 (found in the

|

||||||

|

// COPYING file in the root directory) and Apache 2.0 License

|

||||||

|

// (found in the LICENSE.Apache file in the root directory).

|

||||||

|

|

||||||

|

#ifdef MEMKIND

|

||||||

|

|

||||||

|

#include "memkind_kmem_allocator.h"

|

||||||

|

|

||||||

|

namespace rocksdb {

|

||||||

|

|

||||||

|

void* MemkindKmemAllocator::Allocate(size_t size) {

|

||||||

|

void* p = memkind_malloc(MEMKIND_DAX_KMEM, size);

|

||||||

|

if (p == NULL) {

|

||||||

|

throw std::bad_alloc();

|

||||||

|

}

|

||||||

|

return p;

|

||||||

|

}

|

||||||

|

|

||||||

|

void MemkindKmemAllocator::Deallocate(void* p) {

|

||||||

|

memkind_free(MEMKIND_DAX_KMEM, p);

|

||||||

|

}

|

||||||

|

|

||||||

|

#ifdef ROCKSDB_MALLOC_USABLE_SIZE

|

||||||

|

size_t MemkindKmemAllocator::UsableSize(void* p, size_t /*allocation_size*/) const {

|

||||||

|

return memkind_malloc_usable_size(MEMKIND_DAX_KMEM, p);

|

||||||

|

}

|

||||||

|

#endif // ROCKSDB_MALLOC_USABLE_SIZE

|

||||||

|

|

||||||

|

} // namespace rocksdb

|

||||||

|

#endif // MEMKIND

|

||||||

|

|

@ -0,0 +1,28 @@

|

||||||

|

// Copyright (c) 2011-present, Facebook, Inc. All rights reserved.

|

||||||

|

// Copyright (c) 2019 Intel Corporation

|

||||||

|

// This source code is licensed under both the GPLv2 (found in the

|

||||||

|

// COPYING file in the root directory) and Apache 2.0 License

|

||||||

|

// (found in the LICENSE.Apache file in the root directory).

|

||||||

|

|

||||||

|

#pragma once

|

||||||

|

|

||||||

|

#ifdef MEMKIND

|

||||||

|

|

||||||

|

#include <memkind.h>

|

||||||

|

#include "rocksdb/memory_allocator.h"

|

||||||

|

|

||||||

|

namespace rocksdb {

|

||||||

|

|

||||||

|

class MemkindKmemAllocator : public MemoryAllocator {

|

||||||

|

public:

|

||||||

|

const char* Name() const override { return "MemkindKmemAllocator"; };

|

||||||

|

void* Allocate(size_t size) override;

|

||||||

|

void Deallocate(void* p) override;

|

||||||

|

#ifdef ROCKSDB_MALLOC_USABLE_SIZE

|

||||||

|

size_t UsableSize(void* p, size_t /*allocation_size*/) const override;

|

||||||

|

#endif

|

||||||

|

};

|

||||||

|

|

||||||

|

} // namespace rocksdb

|

||||||

|

#endif // MEMKIND

|

||||||

|

|

||||||

|

|

@ -0,0 +1,99 @@

|

||||||

|

// Copyright (c) 2011-present, Facebook, Inc. All rights reserved.

|

||||||

|

// Copyright (c) 2019 Intel Corporation

|

||||||

|

// This source code is licensed under both the GPLv2 (found in the

|

||||||

|

// COPYING file in the root directory) and Apache 2.0 License

|

||||||

|

// (found in the LICENSE.Apache file in the root directory).

|

||||||

|

|

||||||

|

#include <cstdio>

|

||||||

|

|

||||||

|

#ifdef MEMKIND

|

||||||

|

#include "memkind_kmem_allocator.h"

|

||||||

|

#include "test_util/testharness.h"

|

||||||

|

#include "rocksdb/cache.h"

|

||||||

|

#include "rocksdb/db.h"

|

||||||

|

#include "rocksdb/options.h"

|

||||||

|

#include "table/block_based/block_based_table_factory.h"

|

||||||

|

|

||||||

|

namespace rocksdb {

|

||||||

|

TEST(MemkindKmemAllocatorTest, Allocate) {

|

||||||

|

MemkindKmemAllocator allocator;

|

||||||

|

void* p;

|

||||||

|

try {

|

||||||

|

p = allocator.Allocate(1024);

|

||||||

|

} catch (const std::bad_alloc& e) {

|

||||||

|

return;

|

||||||

|

}

|

||||||

|

ASSERT_NE(p, nullptr);

|

||||||

|

size_t size = allocator.UsableSize(p, 1024);

|

||||||

|

ASSERT_GE(size, 1024);

|

||||||

|

allocator.Deallocate(p);

|

||||||

|

}

|

||||||

|

|

||||||

|

TEST(MemkindKmemAllocatorTest, DatabaseBlockCache) {

|

||||||

|

// Check if a memory node is available for allocation

|

||||||

|

try {

|

||||||

|

MemkindKmemAllocator allocator;

|

||||||

|

allocator.Allocate(1024);

|

||||||

|

} catch (const std::bad_alloc& e) {

|

||||||

|

return; // if no node available, skip the test

|

||||||

|

}

|

||||||

|

|

||||||

|

// Create database with block cache using MemkindKmemAllocator

|

||||||

|

Options options;

|

||||||

|

std::string dbname = test::PerThreadDBPath("memkind_kmem_allocator_test");

|

||||||

|

ASSERT_OK(DestroyDB(dbname, options));

|

||||||

|

|

||||||

|

options.create_if_missing = true;

|

||||||

|

std::shared_ptr<Cache> cache = NewLRUCache(1024 * 1024, 6, false, false,

|

||||||

|

std::make_shared<MemkindKmemAllocator>());

|

||||||

|

BlockBasedTableOptions table_options;

|

||||||

|

table_options.block_cache = cache;

|

||||||

|

options.table_factory.reset(NewBlockBasedTableFactory(table_options));

|

||||||

|

|

||||||

|

DB* db = nullptr;

|

||||||

|

Status s = DB::Open(options, dbname, &db);

|

||||||

|

ASSERT_OK(s);

|

||||||

|

ASSERT_NE(db, nullptr);

|

||||||

|

ASSERT_EQ(cache->GetUsage(), 0);

|

||||||

|

|

||||||

|

// Write 2kB (200 values, each 10 bytes)

|

||||||

|

int num_keys = 200;

|

||||||

|

WriteOptions wo;

|

||||||

|

std::string val = "0123456789";

|

||||||

|

for (int i = 0; i < num_keys; i++) {

|

||||||

|

std::string key = std::to_string(i);

|

||||||

|

s = db->Put(wo, Slice(key), Slice(val));

|

||||||

|

ASSERT_OK(s);

|

||||||

|

}

|

||||||

|

ASSERT_OK(db->Flush(FlushOptions())); // Flush all data from memtable so that reads are from block cache

|

||||||

|

|

||||||

|

// Read and check block cache usage

|

||||||

|

ReadOptions ro;

|

||||||

|

std::string result;

|

||||||

|

for (int i = 0; i < num_keys; i++) {

|

||||||

|

std::string key = std::to_string(i);

|

||||||

|

s = db->Get(ro, key, &result);

|

||||||

|

ASSERT_OK(s);

|

||||||

|

ASSERT_EQ(result, val);

|

||||||

|

}

|

||||||

|

ASSERT_GT(cache->GetUsage(), 2000);

|

||||||

|

|

||||||

|

// Close database

|

||||||

|

s = db->Close();

|

||||||

|

ASSERT_OK(s);

|

||||||

|

ASSERT_OK(DestroyDB(dbname, options));

|

||||||

|

}

|

||||||

|

} // namespace rocksdb

|

||||||

|

|

||||||

|

int main(int argc, char** argv) {

|

||||||

|

::testing::InitGoogleTest(&argc, argv);

|

||||||

|

return RUN_ALL_TESTS();

|

||||||

|

}

|

||||||

|

|

||||||

|

#else

|

||||||

|

|

||||||

|

int main(int /*argc*/, char** /*argv*/) {

|

||||||

|

printf("Skip memkind_kmem_allocator_test as the required library memkind is missing.");

|

||||||

|

}

|

||||||

|

|

||||||

|

#endif // MEMKIND

|

||||||

2

src.mk

2

src.mk

|

|

@ -92,6 +92,7 @@ LIB_SOURCES = \

|

||||||

memory/arena.cc \

|

memory/arena.cc \

|

||||||

memory/concurrent_arena.cc \

|

memory/concurrent_arena.cc \

|

||||||

memory/jemalloc_nodump_allocator.cc \

|

memory/jemalloc_nodump_allocator.cc \

|

||||||

|

memory/memkind_kmem_allocator.cc \

|

||||||

memtable/alloc_tracker.cc \

|

memtable/alloc_tracker.cc \

|

||||||

memtable/hash_linklist_rep.cc \

|

memtable/hash_linklist_rep.cc \

|

||||||

memtable/hash_skiplist_rep.cc \

|

memtable/hash_skiplist_rep.cc \

|

||||||

|

|

@ -402,6 +403,7 @@ MAIN_SOURCES = \

|

||||||

logging/env_logger_test.cc \

|

logging/env_logger_test.cc \

|

||||||

logging/event_logger_test.cc \

|

logging/event_logger_test.cc \

|

||||||

memory/arena_test.cc \

|

memory/arena_test.cc \

|

||||||

|

memory/memkind_kmem_allocator_test.cc \

|

||||||

memtable/inlineskiplist_test.cc \

|

memtable/inlineskiplist_test.cc \

|

||||||

memtable/memtablerep_bench.cc \

|

memtable/memtablerep_bench.cc \

|

||||||

memtable/skiplist_test.cc \

|

memtable/skiplist_test.cc \

|

||||||

|

|

|

||||||

|

|

@ -73,6 +73,10 @@

|

||||||

#include "utilities/merge_operators/sortlist.h"

|

#include "utilities/merge_operators/sortlist.h"

|

||||||

#include "utilities/persistent_cache/block_cache_tier.h"

|

#include "utilities/persistent_cache/block_cache_tier.h"

|

||||||

|

|

||||||

|

#ifdef MEMKIND

|

||||||

|

#include "memory/memkind_kmem_allocator.h"

|

||||||

|

#endif

|

||||||

|

|

||||||

#ifdef OS_WIN

|

#ifdef OS_WIN

|

||||||

#include <io.h> // open/close

|

#include <io.h> // open/close

|

||||||

#endif

|

#endif

|

||||||

|

|

@ -452,6 +456,9 @@ DEFINE_int64(simcache_size, -1,

|

||||||

DEFINE_bool(cache_index_and_filter_blocks, false,

|

DEFINE_bool(cache_index_and_filter_blocks, false,

|

||||||

"Cache index/filter blocks in block cache.");

|

"Cache index/filter blocks in block cache.");

|

||||||

|

|

||||||

|

DEFINE_bool(use_cache_memkind_kmem_allocator, false,

|

||||||

|

"Use memkind kmem allocator for block cache.");

|

||||||

|

|

||||||

DEFINE_bool(partition_index_and_filters, false,

|

DEFINE_bool(partition_index_and_filters, false,

|

||||||

"Partition index and filter blocks.");

|

"Partition index and filter blocks.");

|

||||||

|

|

||||||

|

|

@ -2631,9 +2638,22 @@ class Benchmark {

|

||||||

}

|

}

|

||||||

return cache;

|

return cache;

|

||||||

} else {

|

} else {

|

||||||

return NewLRUCache(

|

if(FLAGS_use_cache_memkind_kmem_allocator) {

|

||||||

static_cast<size_t>(capacity), FLAGS_cache_numshardbits,

|

#ifdef MEMKIND

|

||||||

false /*strict_capacity_limit*/, FLAGS_cache_high_pri_pool_ratio);

|

return NewLRUCache(

|

||||||

|

static_cast<size_t>(capacity), FLAGS_cache_numshardbits,

|

||||||

|

false /*strict_capacity_limit*/, FLAGS_cache_high_pri_pool_ratio,

|

||||||

|

std::make_shared<MemkindKmemAllocator>());

|

||||||

|

|

||||||

|

#else

|

||||||

|

fprintf(stderr, "Memkind library is not linked with the binary.");

|

||||||

|

exit(1);

|

||||||

|

#endif

|

||||||

|

} else {

|

||||||

|

return NewLRUCache(

|

||||||

|

static_cast<size_t>(capacity), FLAGS_cache_numshardbits,

|

||||||

|

false /*strict_capacity_limit*/, FLAGS_cache_high_pri_pool_ratio);

|

||||||

|

}

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|

|

||||||

Loading…

Reference in New Issue